-

How LSTM Network Solves The Issue Of Vanishing Gradient Problem?

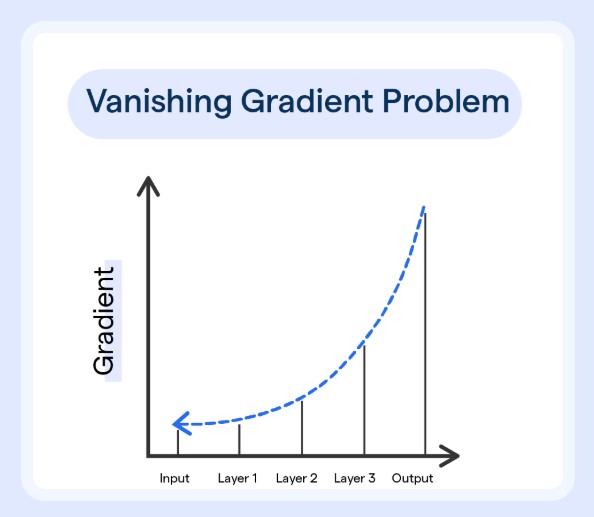

How Vanishing Gradient Problems Solved By The LSTM ? Table Of Contents: What Is Vanishing Gradient Problem? Why Does It Occurs In RNN? (1) What Is Vanishing Gradient Problem? The vanishing gradient problem is a challenge that occurs during the training of deep neural networks, particularly in networks with many layers. It arises when the gradients of the loss function, which are used to update the network’s weights, become extremely small as they are backpropagated through the layers of the network. This is the gradient calculation with only one layer. With only one layer you can see that three terms

-

Forward Pass Of LSTM Network.

Forward Pass Of LSTM Network Syllabus: Forward Pass In LSTM Neural Network. Answer: Forward Pass Work: Input Layer: 1st Hidden Layer: Each cell in the LSTM layer will output two values one is Cell state (Ct) and another one is hidden state (ht). This two value will be passed to the next layer as a tuple. Example: