Interview Questions On Linear Regression.

Table Of Contents:

- What is linear regression?

- Explain the assumptions of a linear regression model.

- What is the difference between simple and multiple linear regression?

- What is multicollinearity, and how do you detect it?

- What are residuals in linear regression?

- What is the cost function used in linear regression?

- How do you find the optimal parameters in linear regression?

- Explain the formula for the regression line.

- What is R-squared?

- What is the adjusted R-squared, and why is it important?

- How do you handle categorical variables in linear regression?

- What would you do if your model shows heteroscedasticity?

- How do you deal with multicollinearity in multiple linear regression?

- What are the common metrics to evaluate a linear regression model?

- What is overfitting, and how can it be prevented in linear regression?

- What would you do if your model has a low R-squared value?

- How would you explain the results of a linear regression to a non-technical stakeholder?

- When would you not use linear regression?

- What is the role of the p-value in linear regression?

- What is the difference between Ridge and Lasso regression?

- What is the difference between correlation and regression?

- How does regularization help in linear regression?

- Can linear regression be used for classification problems?

- Why is Ordinary Least Squares (OLS) used for parameter estimation in linear regression?

- What are the limitations of using R-squared as a performance metric?

- What is the Gauss-Markov theorem?

- Why do we assume normality of residuals in linear regression?

- What happens if the residuals are not independent?

- What if the relationship between the predictors and the dependent variable is non-linear?

- What would you do if you detect heteroscedasticity in your residuals?

- How do you test for multicollinearity, and why is it a problem in linear regression?

- What would happen if you include irrelevant predictors in your model?

- What does it mean if your residuals show a pattern when plotted against fitted values?

- Explain the impact of outliers on linear regression. How would you handle them?

- How would you deal with missing values in your dataset before applying linear regression?

- When performing feature selection for a regression model, how do you decide which variables to include?

- How would you evaluate a linear regression model if the data has a time-series nature?

- What is the interpretation of coefficients in a multiple linear regression model?

- What does a negative coefficient in a regression model mean?

- What does it mean if your intercept is negative, and is it always meaningful?

- What happens if the number of predictors is greater than the number of observations?

- How would you handle categorical predictors with many levels in a linear regression model?

- How do you handle the situation where your independent variables are on vastly different scales?

- Can you use linear regression to model probabilities? Why or why not?

- Why is gradient descent not commonly used in linear regression?

- What is the impact of regularization on the coefficients of a linear regression model?

- How do you determine the optimal regularization parameter (e.g., α\alphaα in Ridge or Lasso)?

- Explain the trade-off between bias and variance in linear regression.

- How would you apply linear regression in the presence of multicollinearity in real-world data?

- How would you explain linear regression to a non-technical stakeholder?

- What would you do if your regression model has high R-squared but performs poorly on unseen data?

(1) What is linear regression?

- Linear regression is a statistical method used to model and analyze the relationships between a dependent (target) variable and one or more independent (predictor) variables.

- It aims to find the best-fitting linear equation that can predict the dependent variable based on the given independent variables.

(2) Explain the assumptions of a linear regression model.

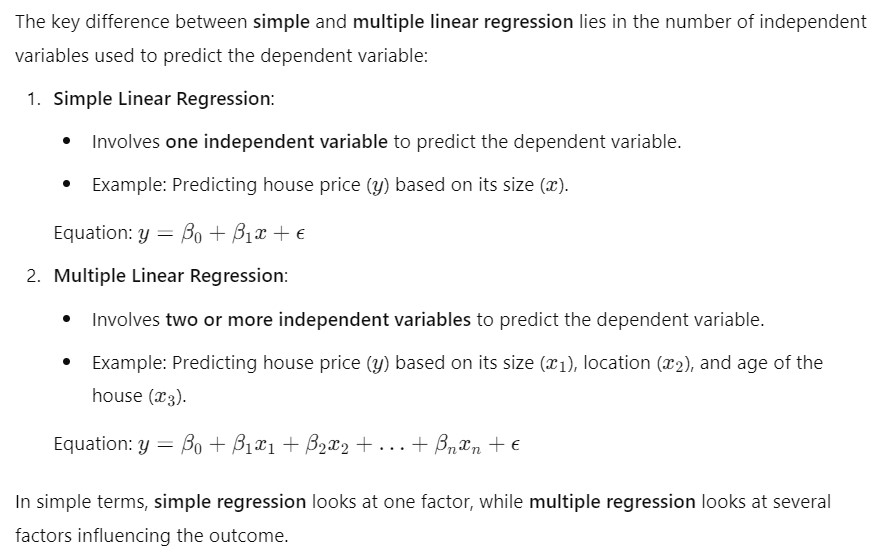

(3) What is the difference between simple and multiple linear regression?

(4) What is multicollinearity, and how do you detect it?

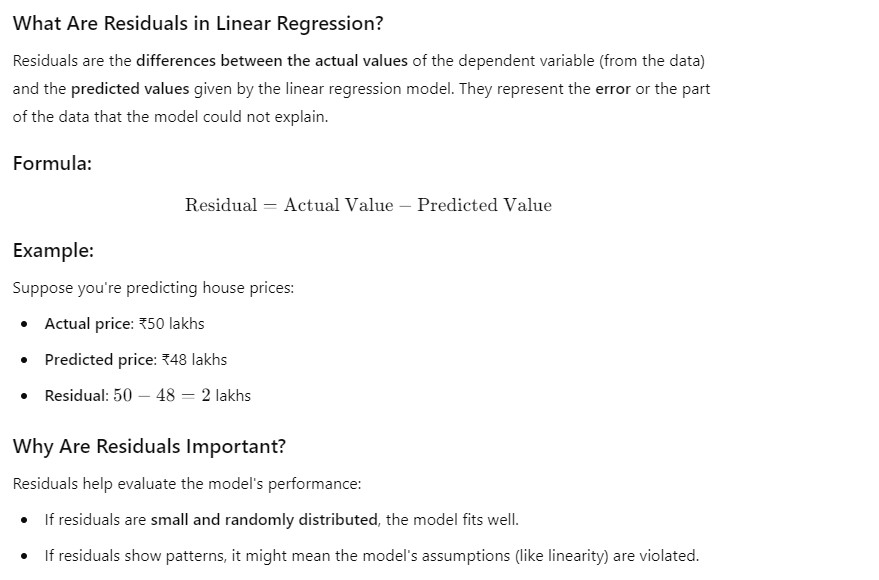

(5) What are residuals in linear regression?

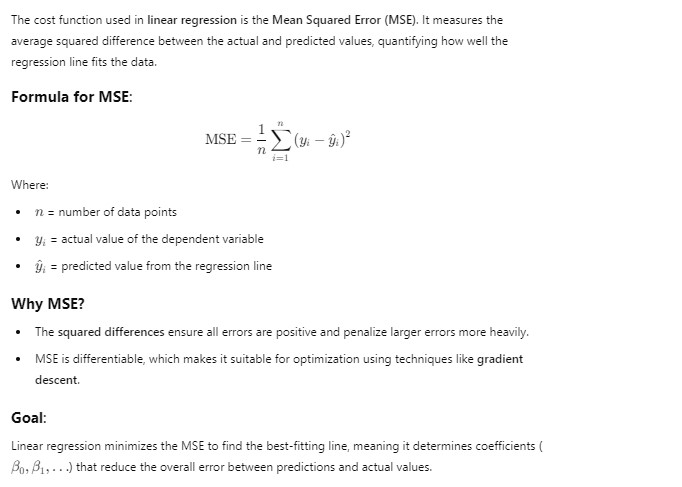

(6) What is the cost function used in linear regression?

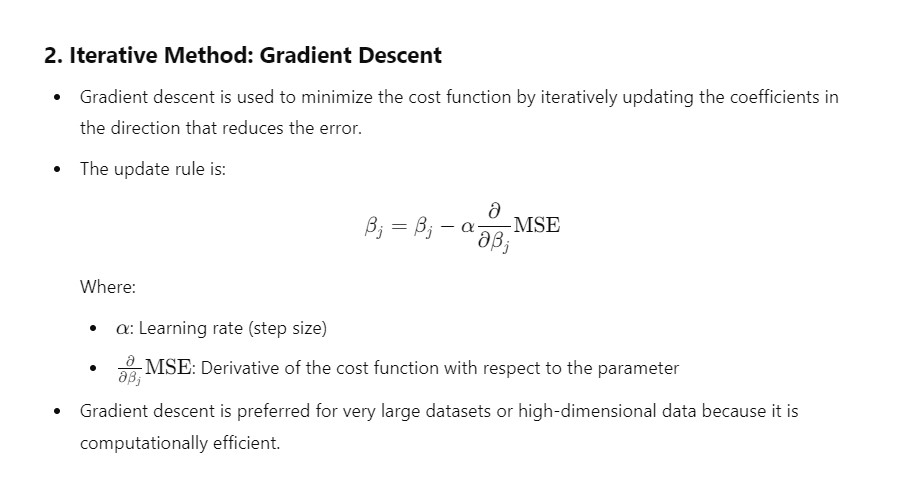

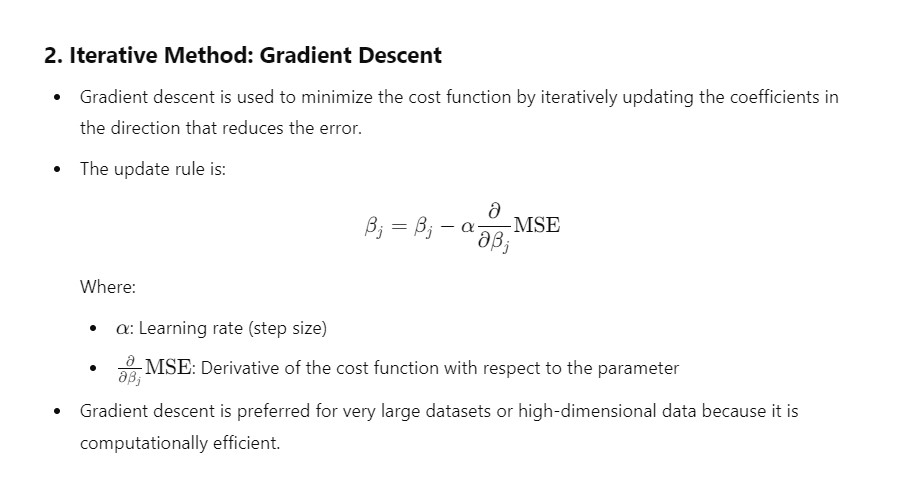

(7) How do you find the optimal parameters in linear regression?

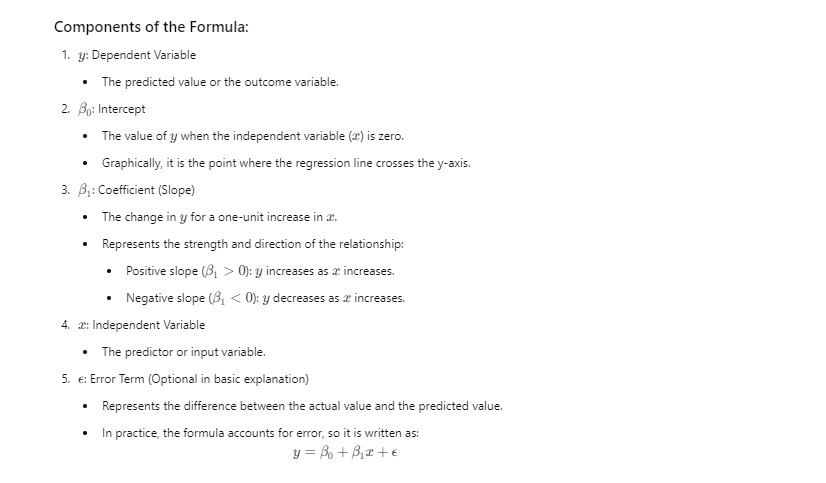

(8) Explain the formula for the regression line.

(9) What is R-squared?

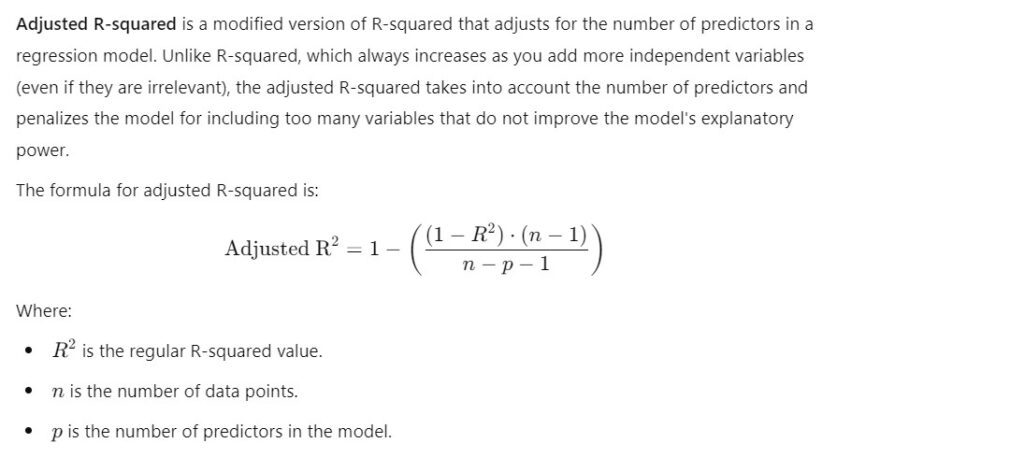

(10) What is the adjusted R-squared, and why is it important?