AWS – How To Deploy ML Model Using Sagemaker Endpoint Using Prebuild Container?

Table Of Contents:

- Setup AWS & Install Dependencies.

- Train & Save The Model.

- Create A Docker Container.

- Push The Docker Image To Amazon ECR.

- Deploy The Model To Sagemaker Endpoint.

- Make Prediction Using Endpoint

- Cleanup The Resources.

(1) Setup AWS & Install Dependencies.

AWS Dependencies:

- Ensure You Have The The Following Dependencies Installed.

An AWS account with SageMaker and ECR permissions.

Docker installed (

docker – version).AWS CLI configured (

aws configure).Boto3 and SageMaker SDK installed.

Python Libraries:

- Python libraries to build the model.

pip install boto3 sagemaker scikit-learn pandas numpy flask gunicorn

(2) Train & Save The Model.

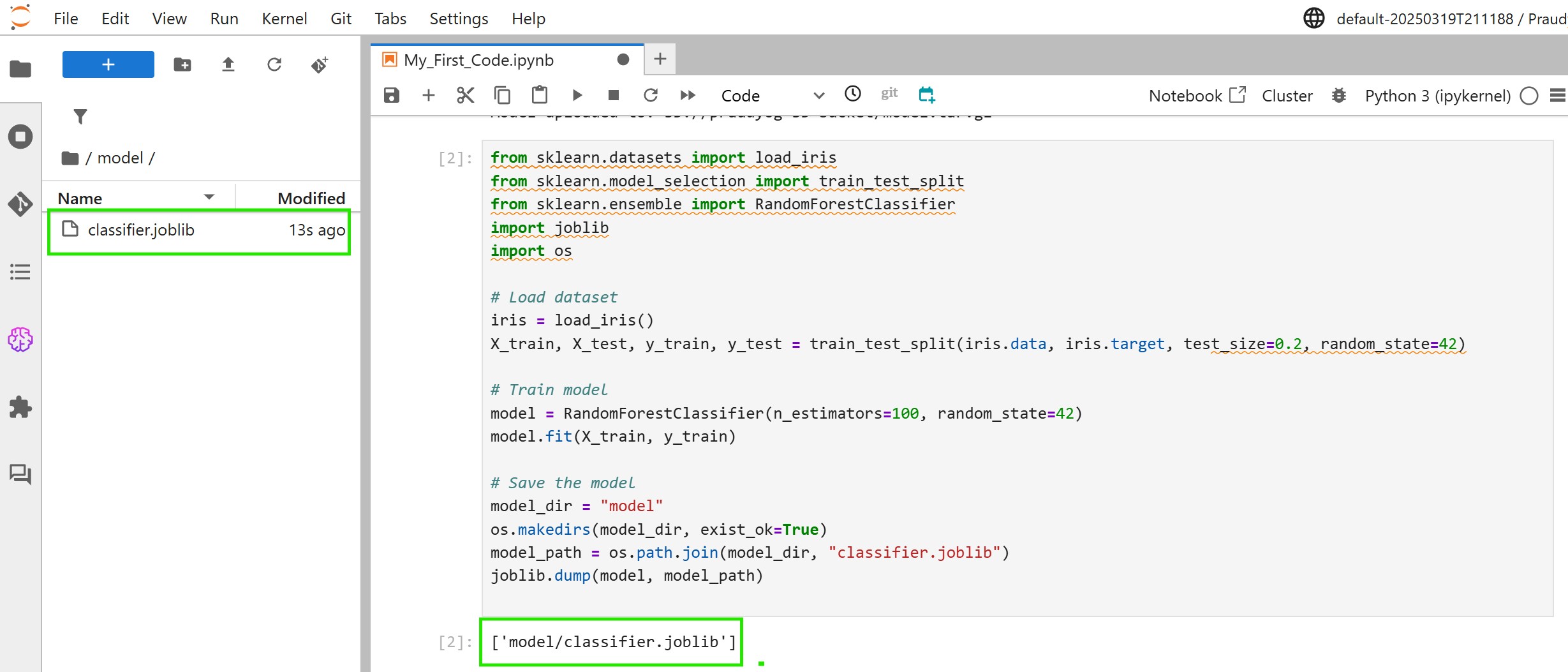

- For this example, we’ll use the Iris dataset to train a basic classifier.

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

from sklearn.ensemble import RandomForestClassifier

import joblib

import os

# Load dataset

iris = load_iris()

X_train, X_test, y_train, y_test = train_test_split(iris.data, iris.target, test_size=0.2, random_state=42)

# Train model

model = RandomForestClassifier(n_estimators=100, random_state=42)

model.fit(X_train, y_train)

# Save the model

model_dir = "model"

os.makedirs(model_dir, exist_ok=True)

model_path = os.path.join(model_dir, "classifier.joblib")

joblib.dump(model, model_path)

(3) Create Inference.py File

import joblib

import os

import numpy as np

from flask import Flask, request, jsonify

# Create Flask app

app = Flask(__name__)

# Define model path inside the container

MODEL_PATH = "/opt/ml/model/classifier.joblib"

# Load the model when the container starts

def load_model():

global model

if os.path.exists(MODEL_PATH):

model = joblib.load(MODEL_PATH)

else:

raise FileNotFoundError(f"Model file not found at {MODEL_PATH}")

load_model() # Load model at startup

@app.route('/ping', methods=['GET'])

def ping():

"""Health check endpoint"""

return jsonify({"status": "Healthy"}), 200

@app.route('/invocations', methods=['POST'])

def predict():

"""Inference endpoint"""

try:

# Convert incoming JSON data into NumPy array

input_data = np.array(request.json)

predictions = model.predict(input_data).tolist()

return jsonify(predictions)

except Exception as e:

return jsonify({"error": str(e)}), 400

# Run Flask app

if __name__ == '__main__':

app.run(host='0.0.0.0', port=8080)(4) Upload Model to S3

- SageMaker requires models to be stored in an S3 bucket.

import boto3

import tarfile

import os

# Define S3 bucket and model path

s3_bucket = "praudyog-s3-bucket" # Change this to your S3 bucket

s3_prefix = "iris-model"

local_model_path = "model/classifier.joblib"

tar_model_path = "classifier.tar.gz"

inference_script_path = "inference.py"

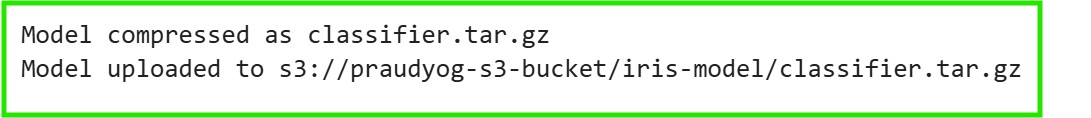

# Step 1: Compress the joblib model into a .tar.gz file

with tarfile.open(tar_model_path, "w:gz") as tar:

tar.add(local_model_path, arcname="classifier.joblib")

tar.add(inference_script_path, arcname="inference.py")

print(f"Model compressed as {tar_model_path}")

# Step 2: Upload the compressed model to S3

s3_client = boto3.client("s3")

s3_model_path = f"s3://{s3_bucket}/{s3_prefix}/classifier.tar.gz"

s3_client.upload_file(tar_model_path, s3_bucket, f"{s3_prefix}/classifier.tar.gz")

print(f"Model uploaded to {s3_model_path}")

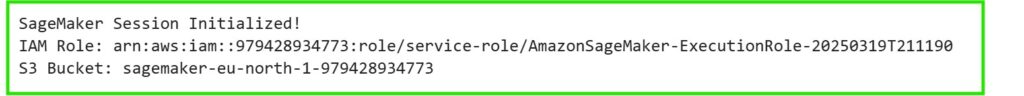

(4) Set Up SageMaker Session & IAM Role in Python

- Set up a SageMaker session in Python:

import boto3

import sagemaker

from sagemaker import get_execution_role

# Start a SageMaker session

sagemaker_session = sagemaker.Session()

# Define IAM Role (replace with your IAM role ARN)

role = get_execution_role()

# Get the default S3 bucket for SageMaker

bucket = sagemaker_session.default_bucket()

print("SageMaker Session Initialized!")

print("IAM Role:", role)

print("S3 Bucket:", bucket)

(5) Create a SageMaker Model Using Prebuilt Container

- Since you’re using SageMaker’s prebuilt Scikit-Learn container, get the container image.

from sagemaker.sklearn.model import SKLearnModel

# Define the model location in S3

model_s3_path = f"s3://praudyog-s3-bucket/iris-model/classifier.tar.gz"

# Get the Scikit-Learn container URI for your AWS region

container = sagemaker.image_uris.retrieve("sklearn", sagemaker_session.boto_region_name, version="0.23-1")

# Create a SageMaker model

model = SKLearnModel(

model_data=model_s3_path,

role=role,

image_uri=container, # SageMaker’s prebuilt container

entry_point="inference.py", # Optional, only if you have a custom script

sagemaker_session=sagemaker_session

)

(6) Deploy the Model as a SageMaker Endpoint

predictor = model.deploy(

instance_type="ml.c5.xlarge", # Choose the instance type

initial_instance_count=1

)(7) Make Predictions Using the Endpoint

import numpy as np

# Sample input data (modify as needed)

test_data = np.array([[5.1, 3.5, 1.4, 0.2]])

# Make a prediction

response = predictor.predict(test_data)

print("Prediction:", response)