GenAI – How To Optimize Query Preprocessing & Embedding Component ?

Table Of Contents:

- What Is Query Preprocessing & Embedding Layer.

- Where Can Latency Happen ?

- How To Reduce Latency

(1) What Is Query Preprocessing & Embedding Layer ?

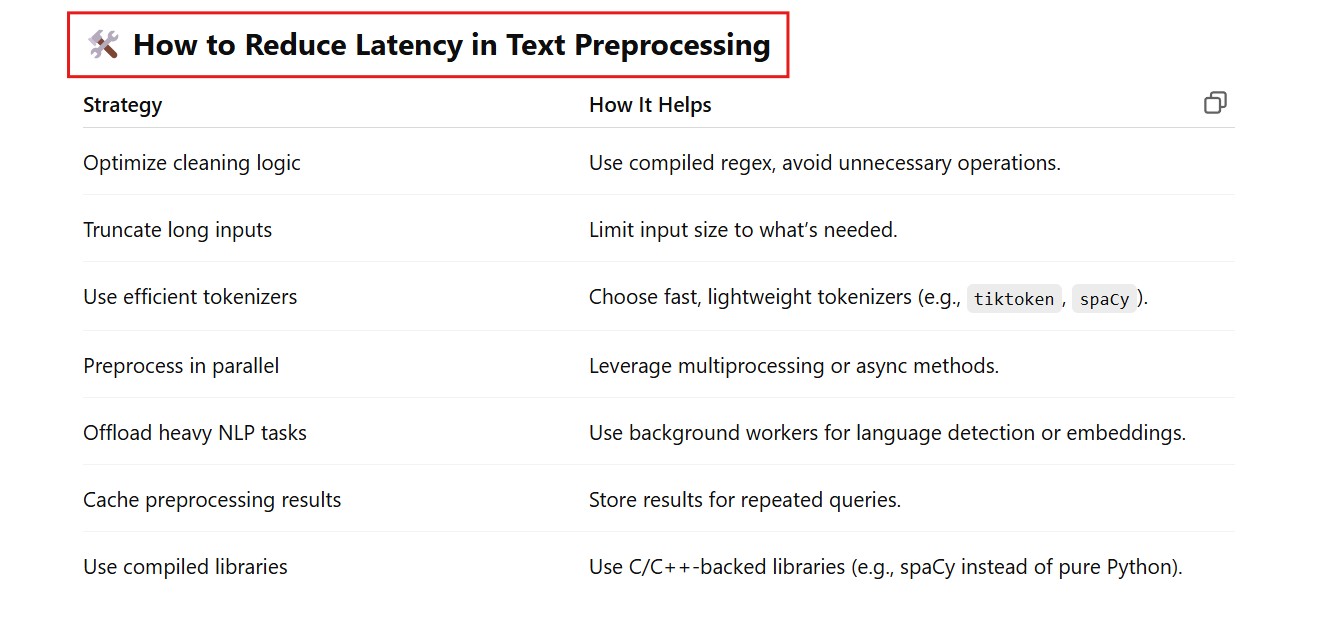

(2) How Text Preprocessing Can Add Latency In The Process?

What Is Compiled Regex ?

Example-1:

import re

# Compile The Regex Pattern Once.

pattern = re.compile(r'\W+')

#Use The Compiled pattern

clean_text = pattern.sub(' '."This is @ a sample # text")

print(clean_text)This is a sample text

Example-2:

import re

non_alpha_pattern = re.compile(r'[^a-zA-Z\s')

def preprocess_text():

text = text.lower()

text = non_alpha_pattern.sub('' , text)

return text

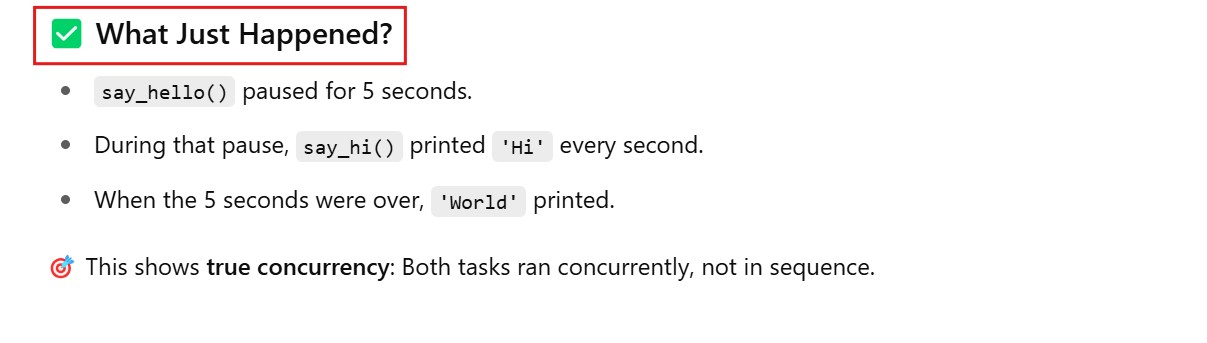

What Is async Function ?

import asyncio

async def say_hello():

print('Hello')

await asyncio.sleep(5)

print('World')

async def say_hi():

for i in range(5):

print('Hi')

await asyncio.sleep(1)

async def main():

await asyncio.gather(say_hello(), say_hi())

await main()Hello

Hi

Hi

Hi

Hi

Hi

World

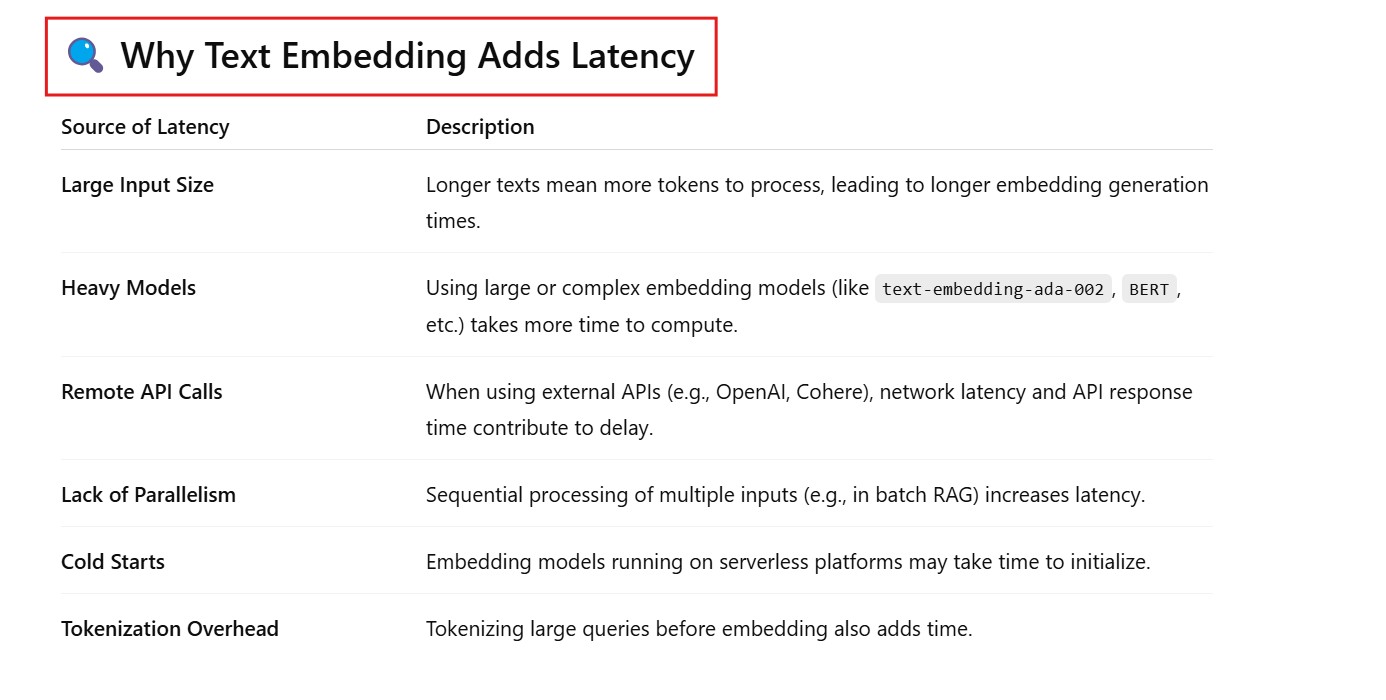

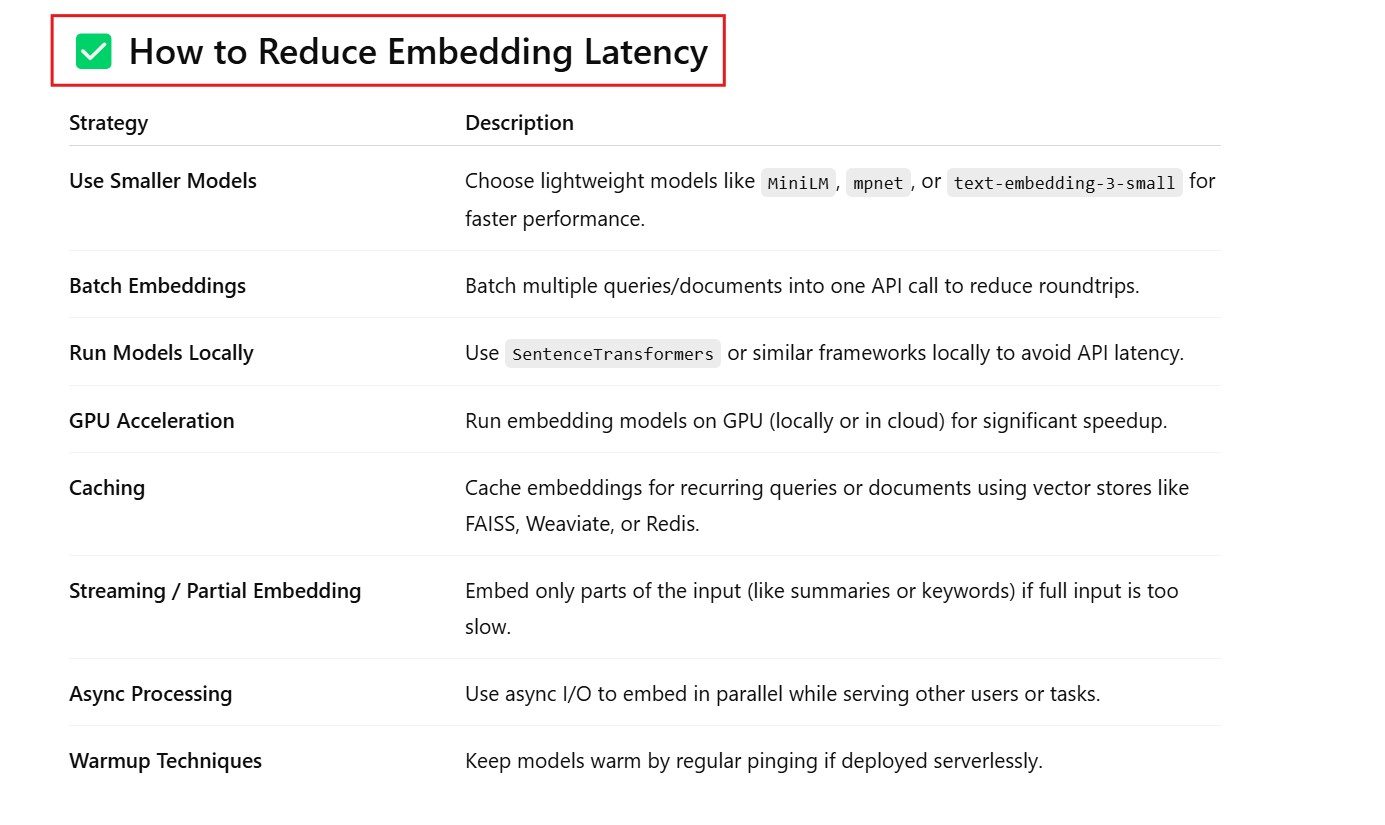

(3) How Text Embedding Can Add Latency In The Process?

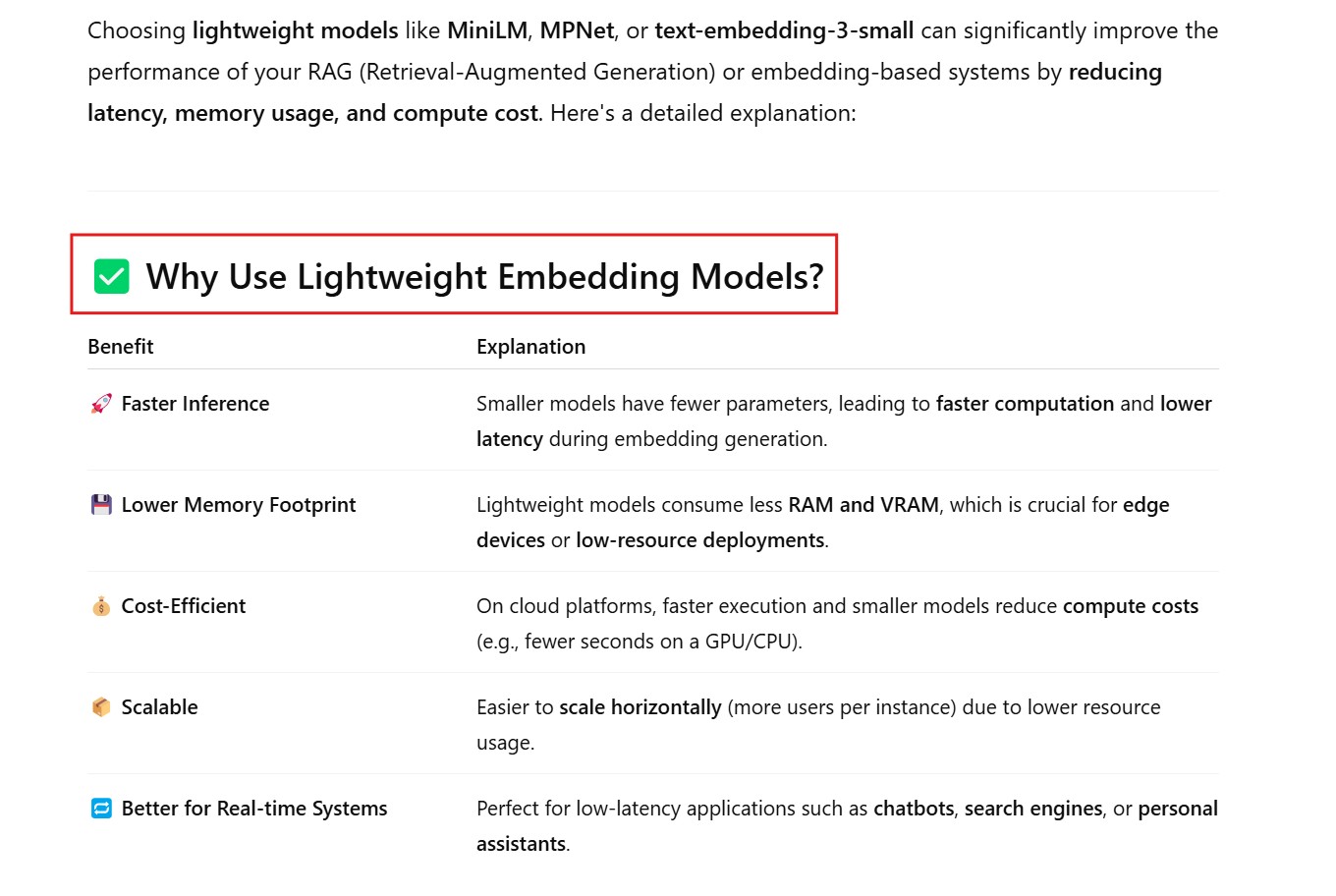

Use Smaller Model .

Popular Light Weight Models.

from sentence_transformer import SentenceTransformer

import openai

miniLM_L6_v2 = SentenceTransformer('sentence-transformers/all-MiniLM-l6-v2')

miniLM_L12_v2 = SentenceTransformer('sentence-transformers/all-MiniLM-L12-v2')

multi_qa_miniLM = SentenceTransformer('sentence-transformers/multi-qa-MiniLM-l6-v2')

distilroberta = SentenceTransformer('sentence-transformers/all-distilroberta-v1')

paraphrase_miniLM = SentenceTransformer('sentence-transformers/paraphrase-MiniLM-L6-v2')

distiluse_multilingual = SentenceTransformer('sentence-transformers/distiluse-base-multilingual-cased-v1')

# Intel's E5 models (local)

e5_small_v2 = SentenceTransformer('intfloat/e5-small-v2')

multilingual_e5_small = SentenceTransformer('intfloat/multilingual-e5-small')

# ✅ For OpenAI models: Embedding via API (cloud-based)

openai.api_key = "YOUR_OPENAI_API_KEY"

def get_openai_embedding(text, model="text-embedding-3-small"):

response = openai.Embedding.create(

input=text,

model=model

)

return response["data"][0]["embedding"]

# Example usage:

text = "Sample input text for embedding"

embedding = get_openai_embedding(text, model="text-embedding-3-small") # or use "text-embedding-ada-002"

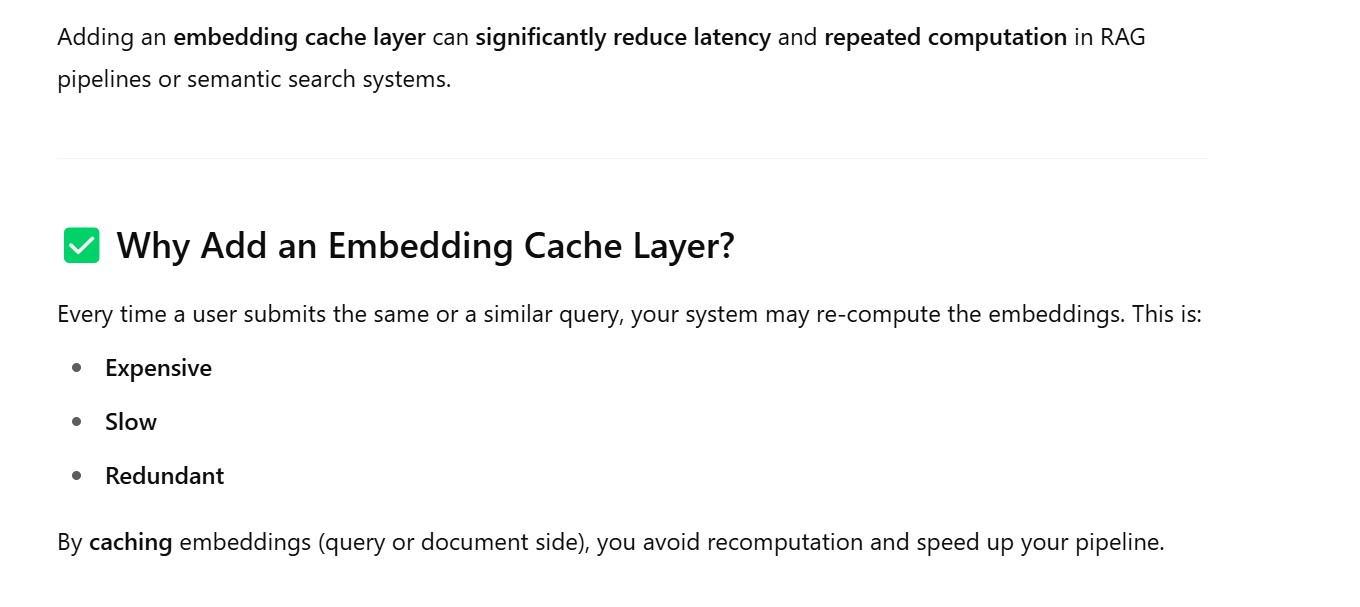

Add Embedding Cache Layer