AWS – Docker Container

Table Of Contents:

- What Is Docker Container?

- Problem Faced Without Docker Container.

- Does Docker Solves Build Once & Run Anywhere?

- How Does Docker Container Works?

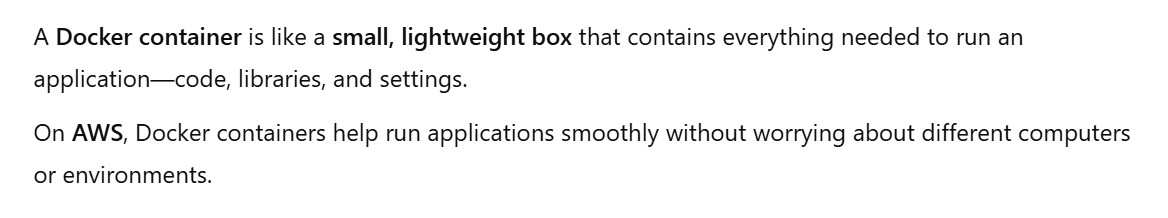

(1) What Is AWS Docker Container

(2) Problem Faced Without Docker Container.

- If you run your application on different computers or environments without using Docker containers, you might face several issues, such as:

(3) Does Docker Solves Build Once & Run Anywhere?

(4) How Does Docker Container Works?

- A Docker container allows you to package and run your application consistently across different environments.

- Here’s a step-by-step guide to creating a Docker container.

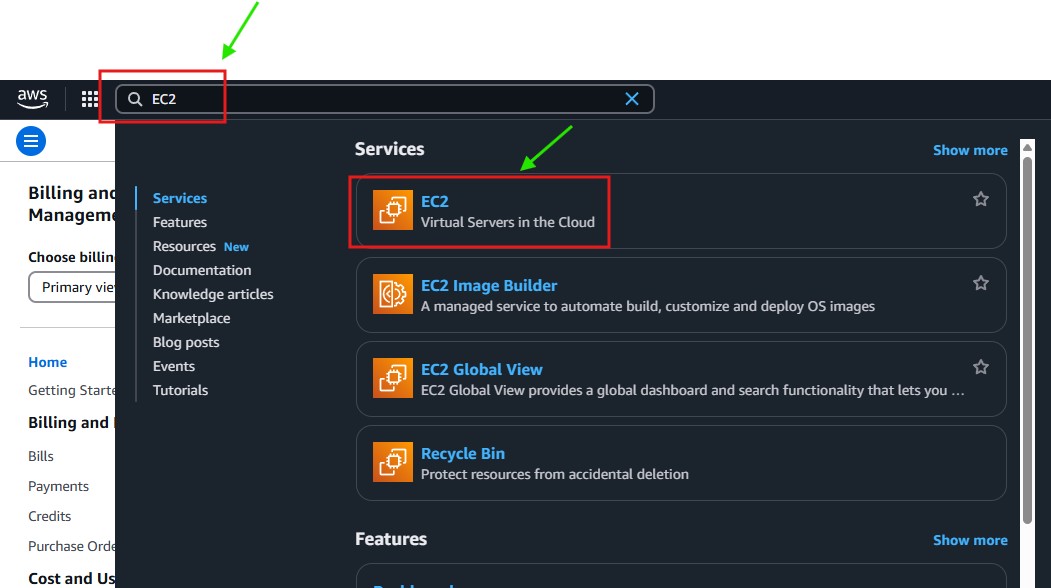

(1) Install Docker Container On EC2

- You can either install the Docker in your local machine or an EC2 instance.

- For running large LLM models and containerizing it we need an GPU based EC2 instance where we can run our Docker container.

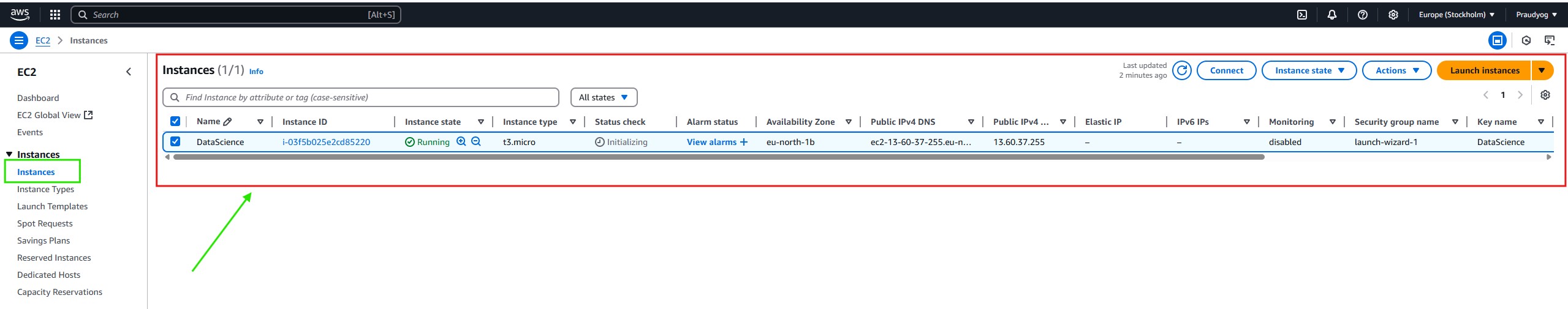

(1.1) Lunch An EC2 Instance:

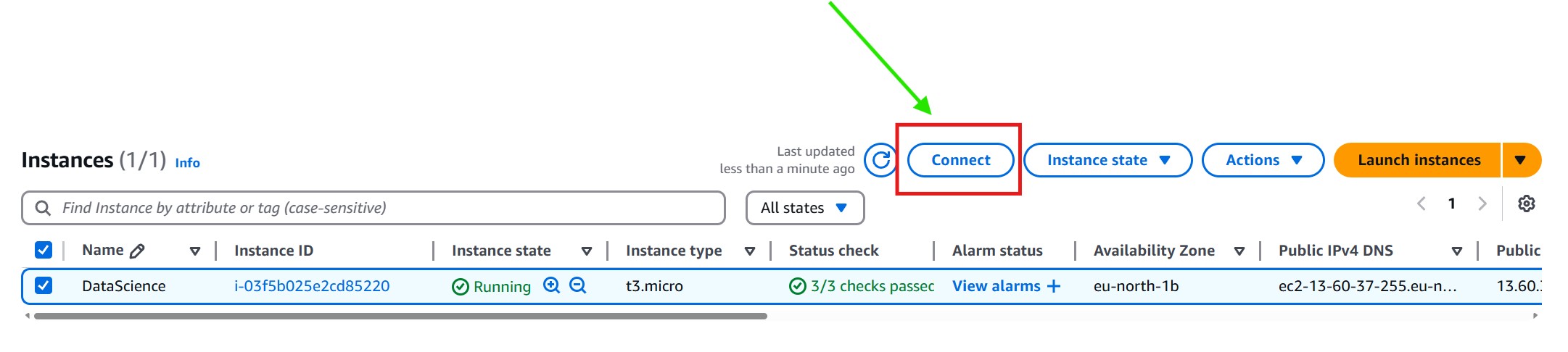

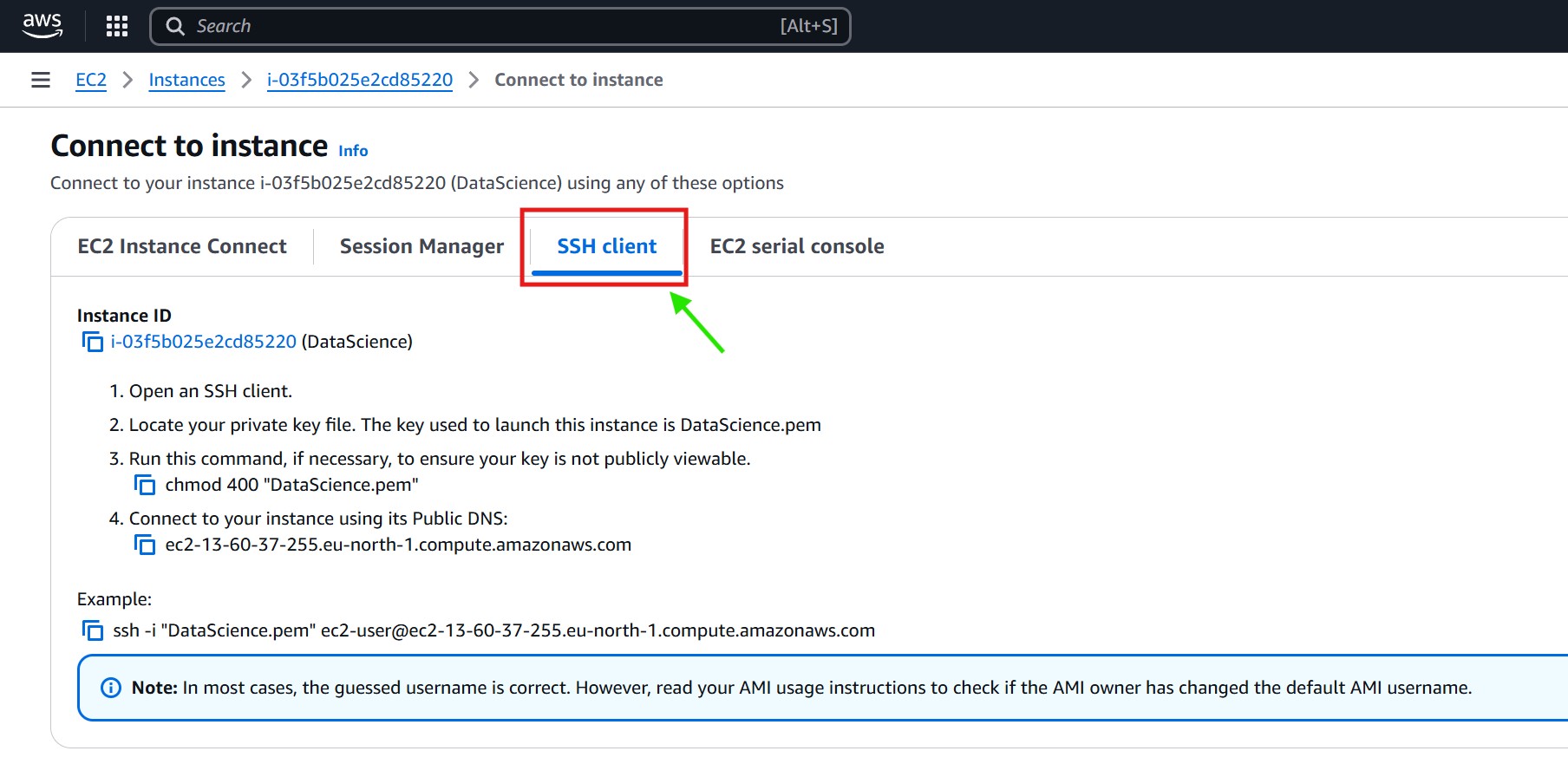

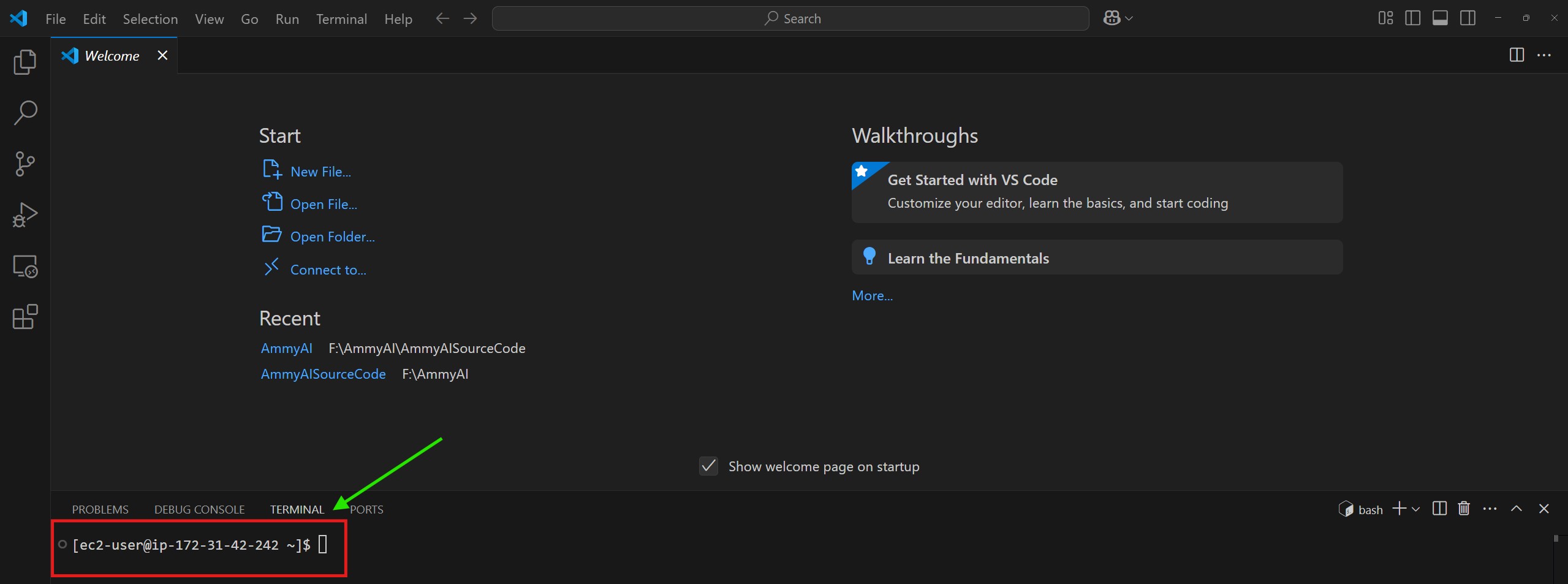

(1.2) Connect To The EC2 Instance

- Once you lunched the EC2 instance you need to make the connection to it.

- You need to connect the EC2 instance through the command prompt.

- Whatever the operation you want to perform on this EC2 instance you can do it using the command prompt.

- Run the below command to make connection to the EC2 instance.

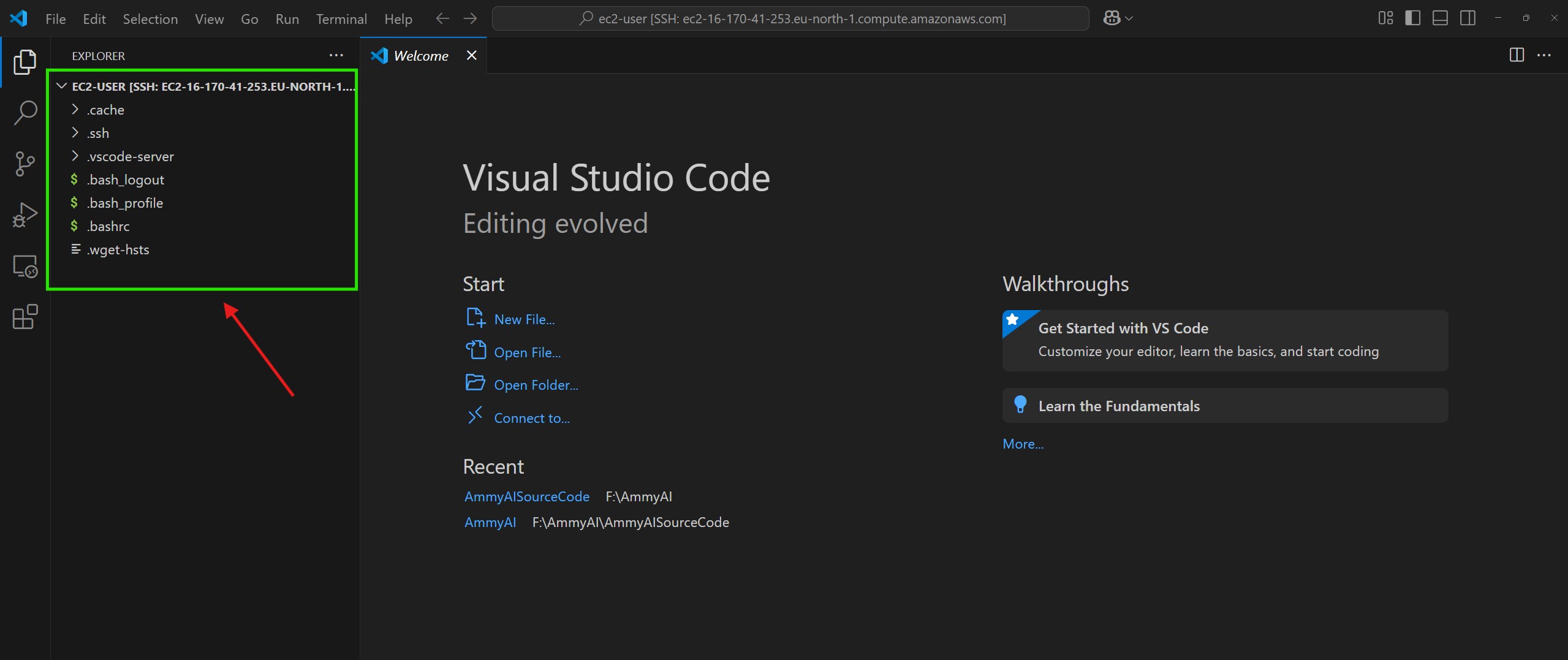

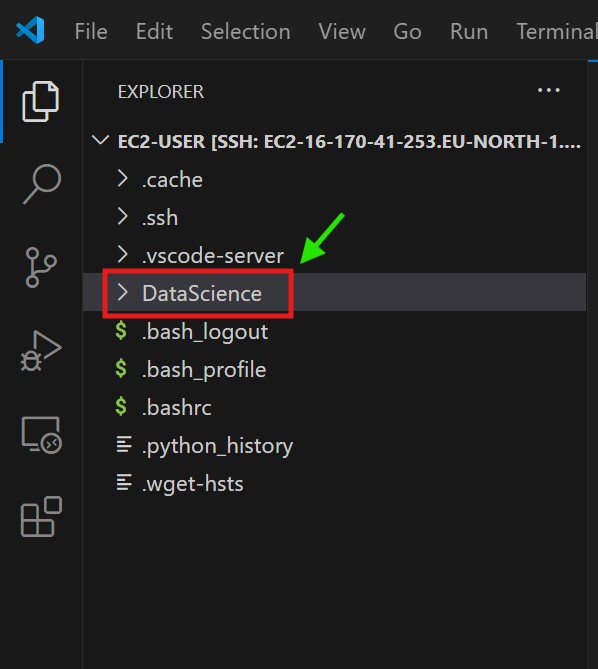

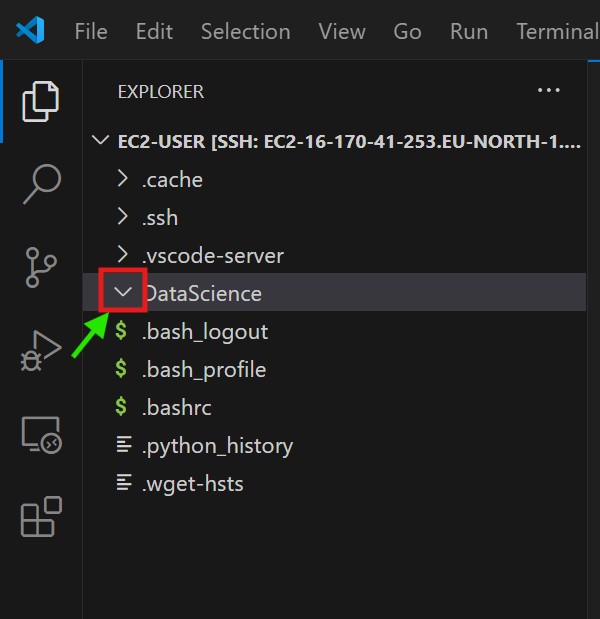

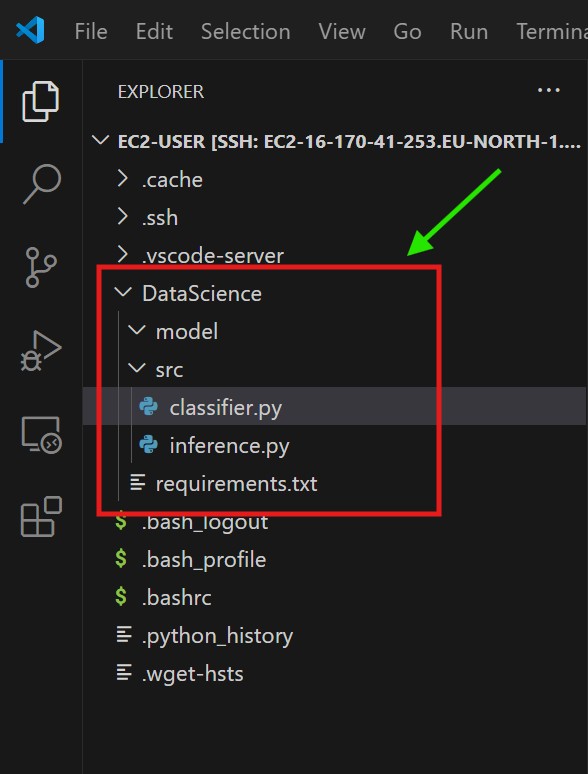

- You can use Visual Studio to make the EC2 instance connection.

- After making the connection you can see all the files and folders present inside this DataScience instance.

- One important thing is you should have the “DataScience.pem” file present in your local to make this connection.

ssh -i "DataScience.pem" [email protected]

(1.3) Install Docker & Verify

For Amazon Linux 2:

sudo yum update -y

sudo yum install -y docker

For Ubuntu:

sudo apt update -y

sudo apt install -y docker.io

Start Docker and enable it on startup:

sudo systemctl start docker

sudo systemctl enable docker

Add user to Docker group (avoid using sudo for Docker commands):

sudo usermod -aG docker $USER

newgrp docker

Verify installation:

docker – version

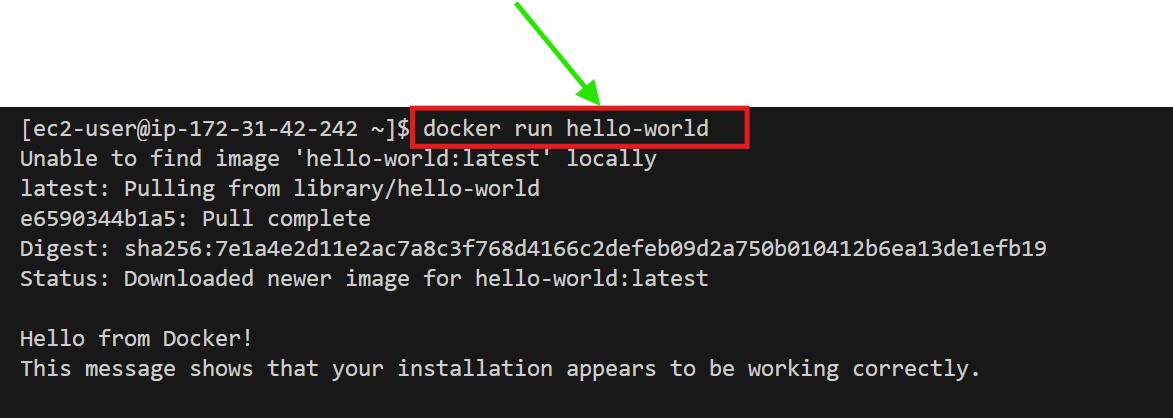

(1.4) Run & Test Docker Container

docker run hello-world

(5) Create A Docker Container For My Application

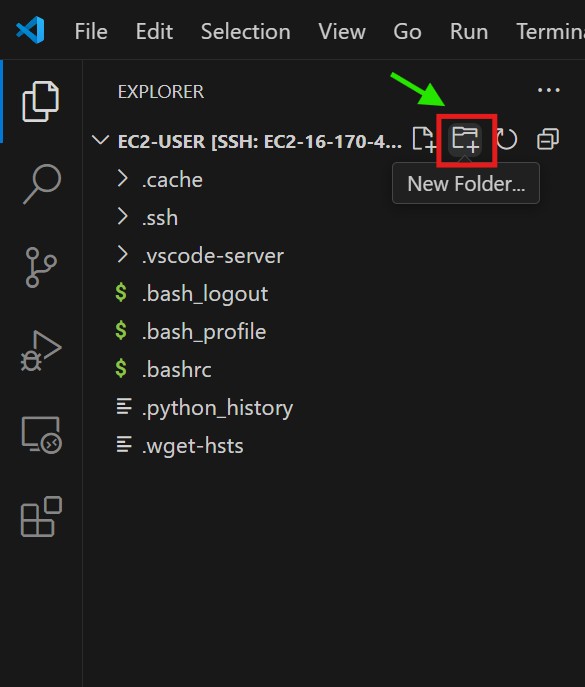

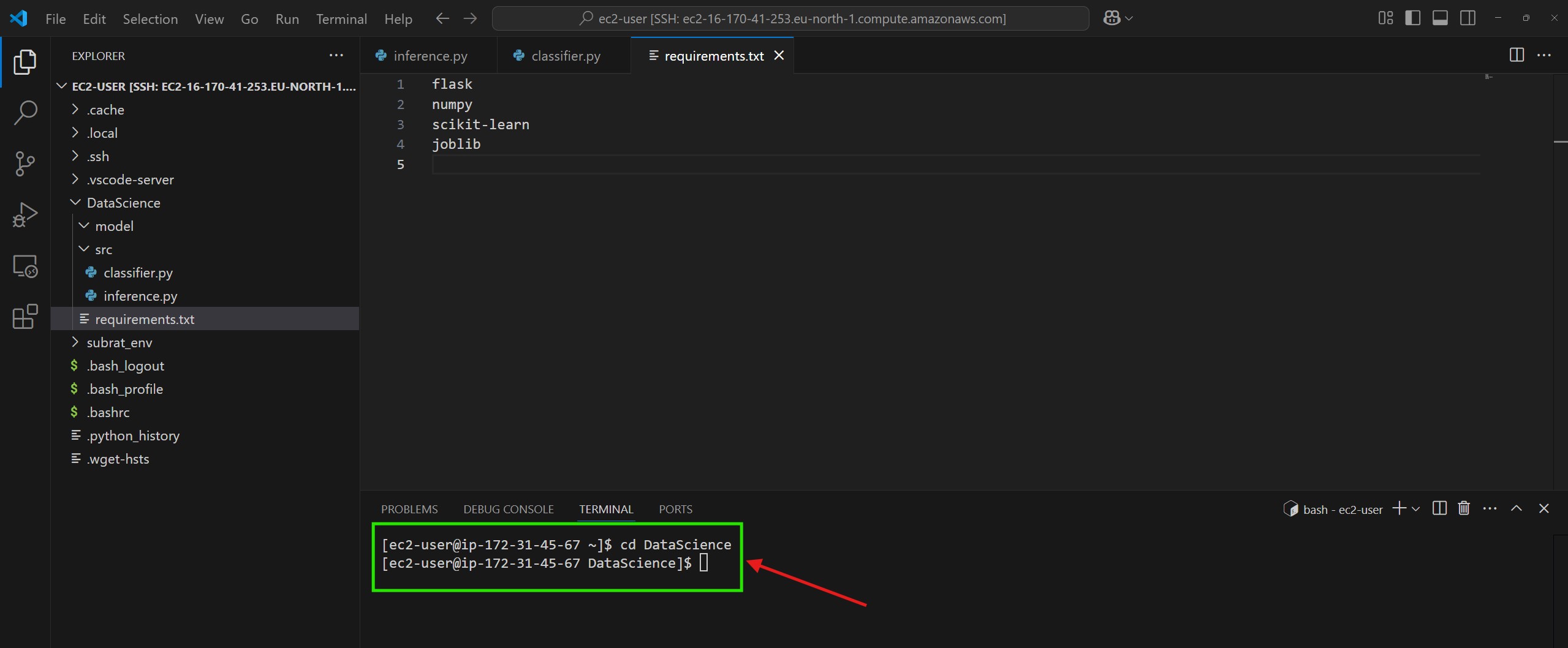

Step-1: Project Setup:

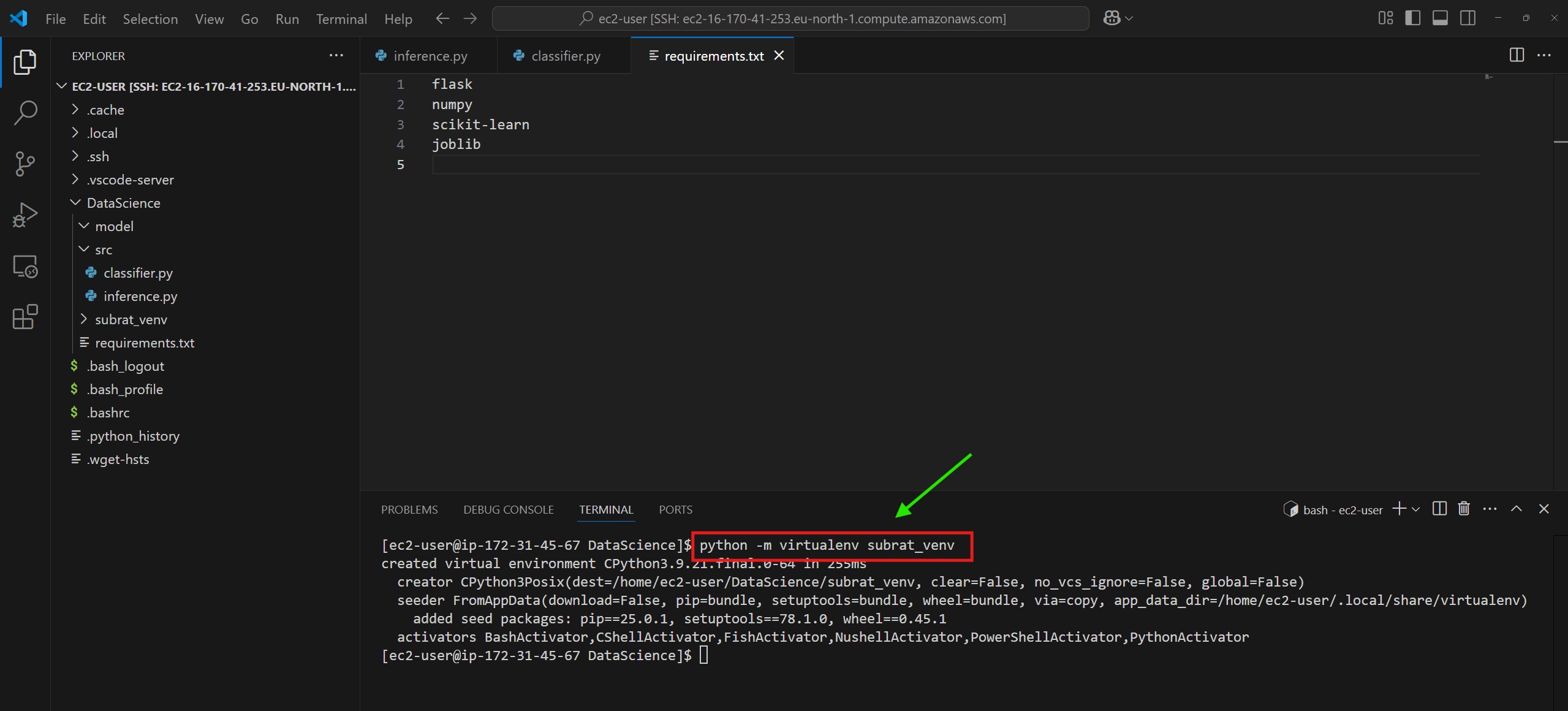

Step-2: Create An Environment Variable

- Create an environment variable and install the required library and run the model.

cd DataScience

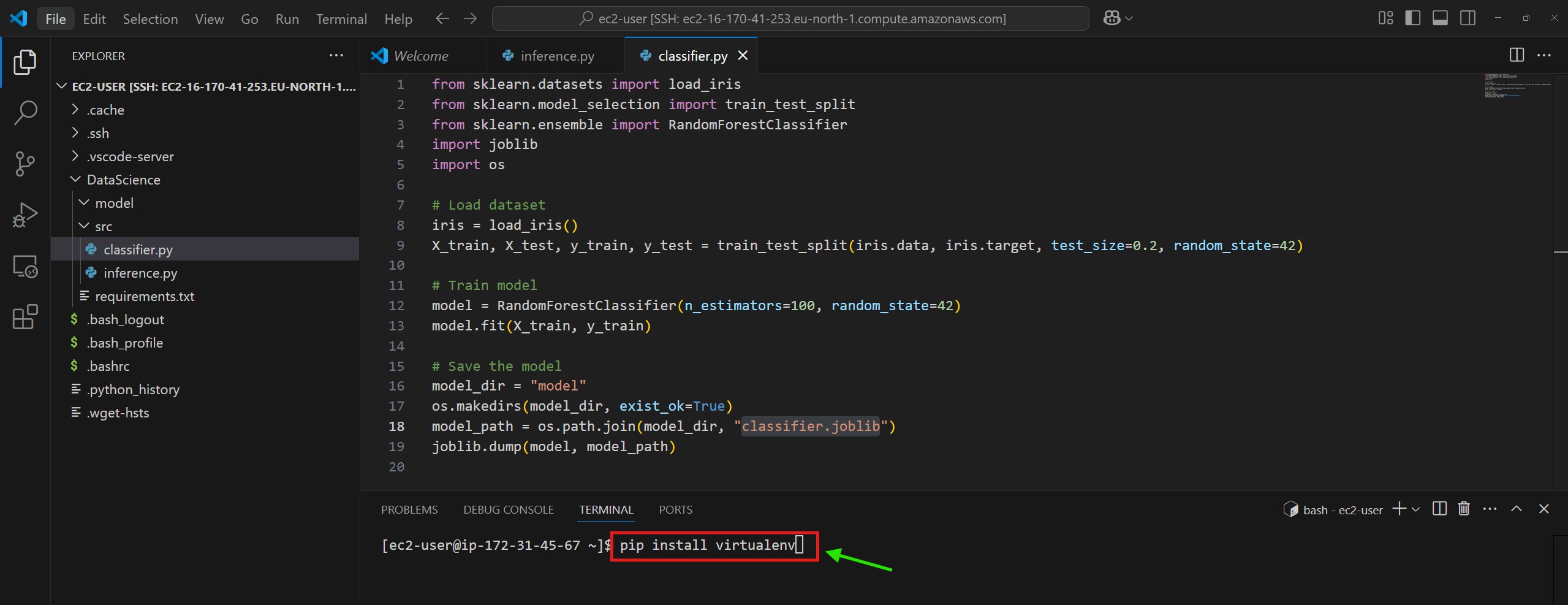

pip install virtualenv

python -m virtualenv subrat_venv

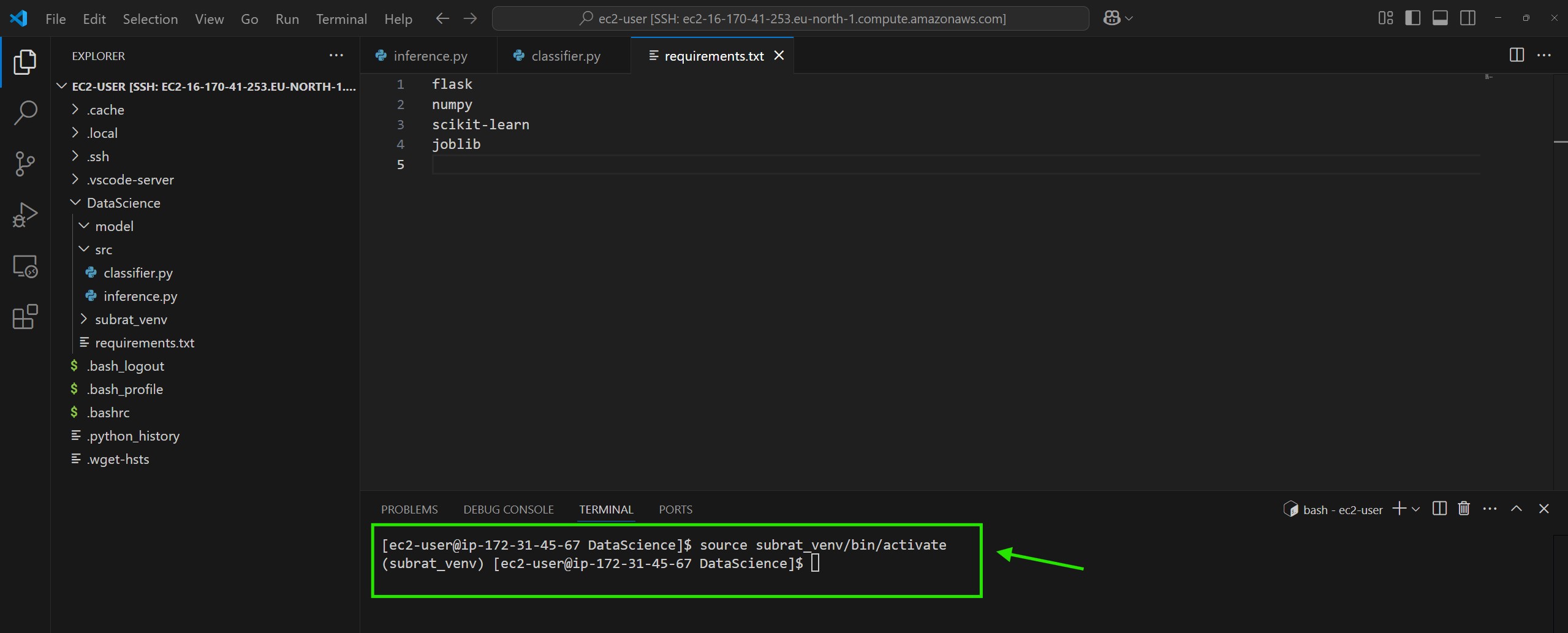

source subrat_venv/bin/activate

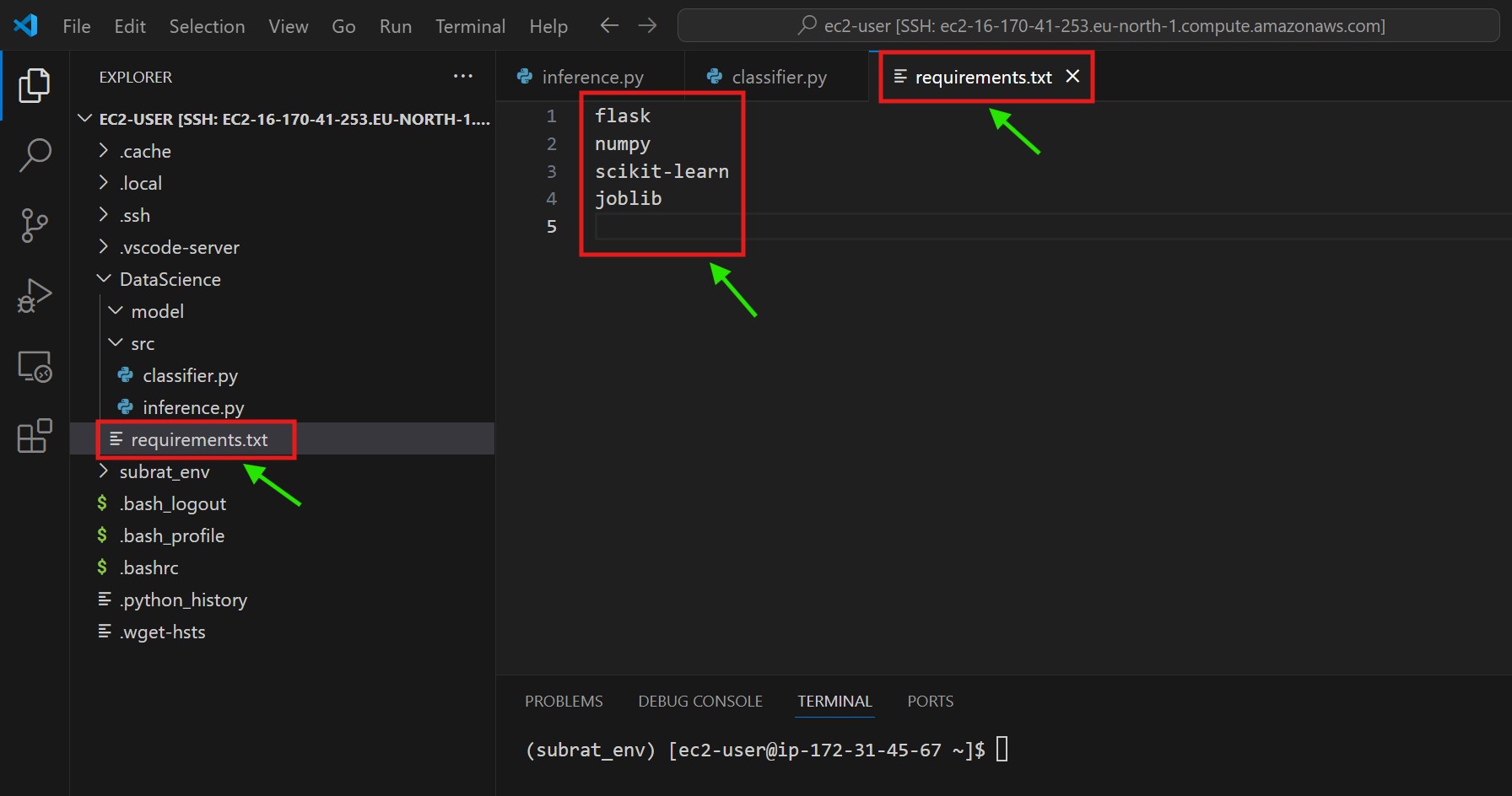

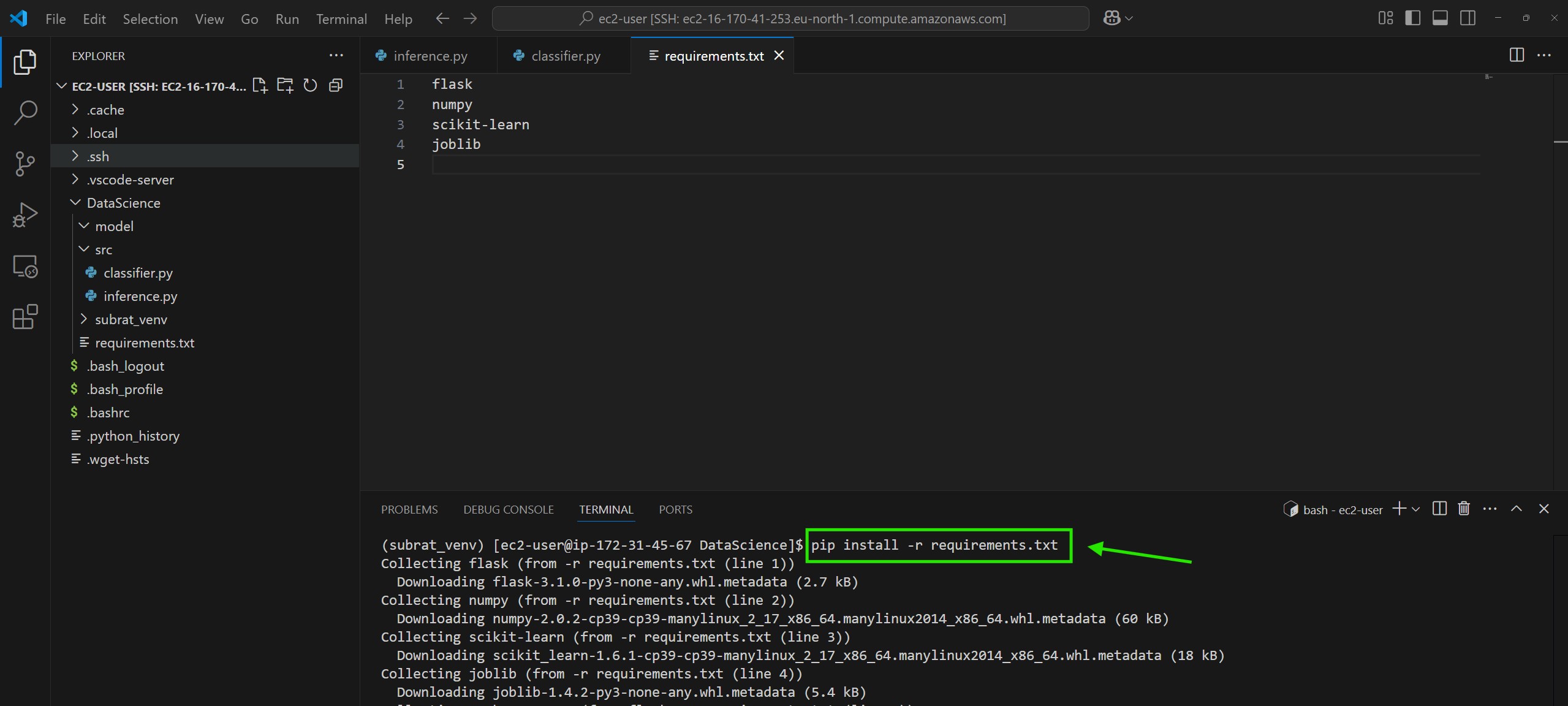

Step-3: Prepare requrements.txt File

flask

numpy

scikit-learn

joblib

Step-4: Install The Libraries From Requirements.txt

pip install -r requirements.txt

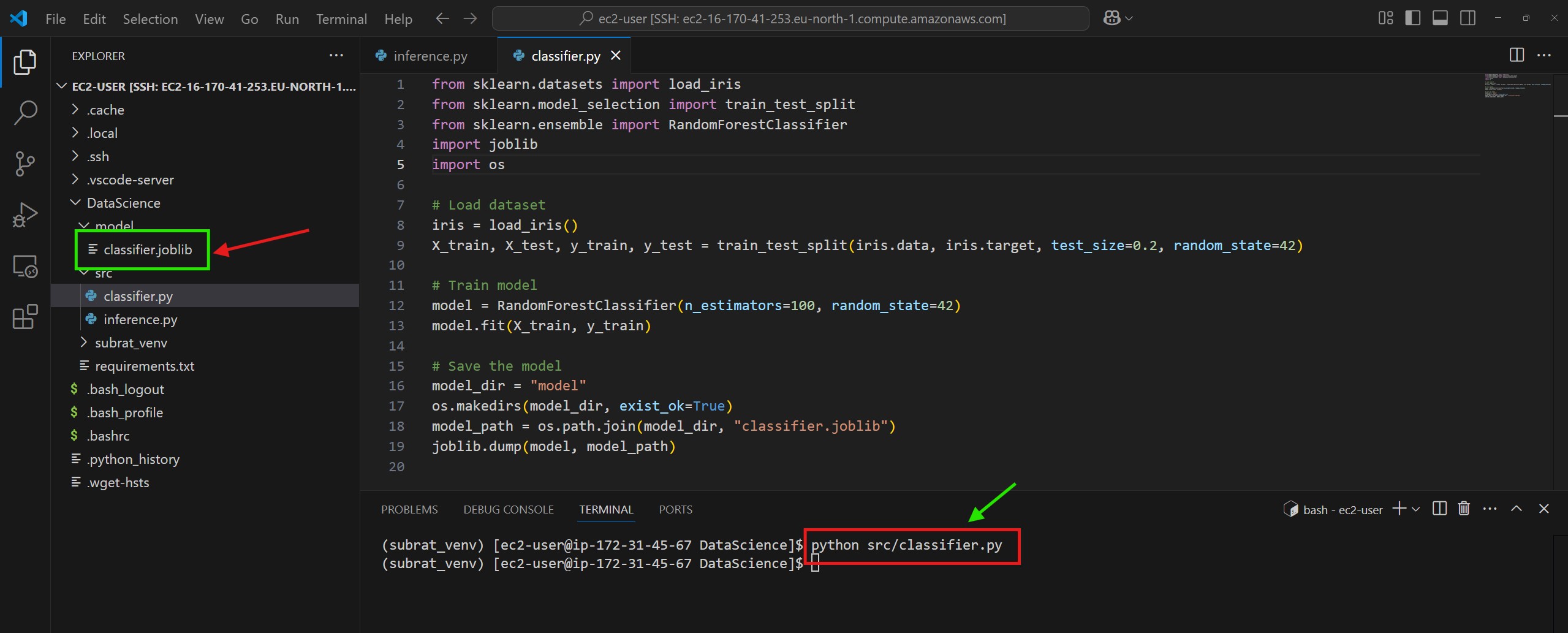

Step-5: Run The ‘classifier.py’ File

src/classifier.py

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

from sklearn.ensemble import RandomForestClassifier

import joblib

import os

# Load dataset

iris = load_iris()

X_train, X_test, y_train, y_test = train_test_split(iris.data, iris.target, test_size=0.2, random_state=42)

# Train model

model = RandomForestClassifier(n_estimators=100, random_state=42)

model.fit(X_train, y_train)

# Save the model

model_dir = "model"

os.makedirs(model_dir, exist_ok=True)

model_path = os.path.join(model_dir, "classifier.joblib")

joblib.dump(model, model_path)

python src/classifier.py

- It will create a “classifier.joblib” model inside the “model” folder.

Step-6: Create The ‘inference.py’ File.

src/inference.py

import joblib

import os

import numpy as np

from flask import Flask, request, jsonify

# Create Flask app

app = Flask(__name__)

# Define model path inside the container

MODEL_PATH = "model/classifier.joblib"

# Load the model when the container starts

def load_model():

global model

if os.path.exists(MODEL_PATH):

model = joblib.load(MODEL_PATH)

else:

raise FileNotFoundError(f"Model file not found at {MODEL_PATH}")

load_model() # Load model at startup

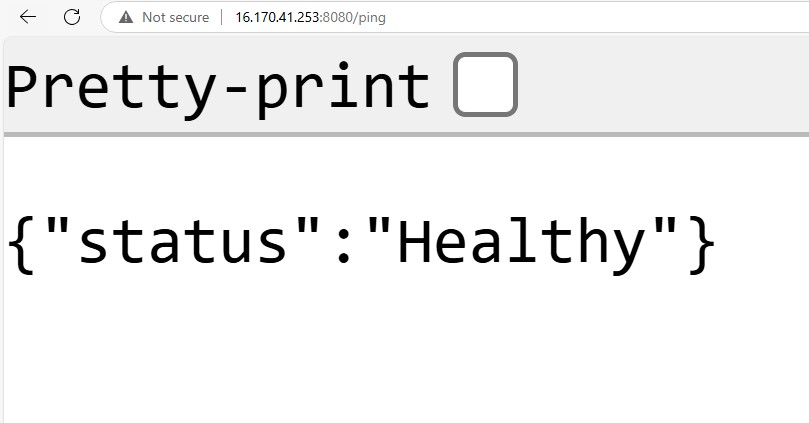

@app.route('/ping', methods=['GET'])

def ping():

"""Health check endpoint"""

return jsonify({"status": "Healthy"}), 200

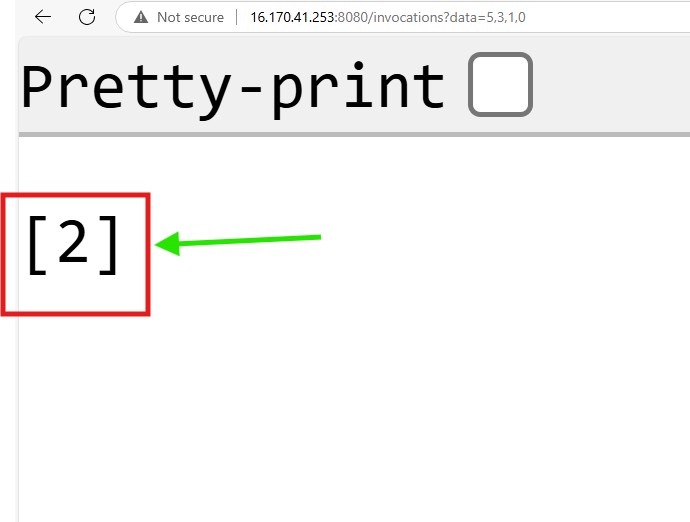

@app.route('/invocations', methods=['GET'])

def predict():

"""Inference endpoint"""

try:

data_str = request.args.get("data") # Example: "5.1,3.5,1.4,0.2", http://16.170.41.253:8080/invocations?data=5.1,3.5,1.4,0.2

if not data_str:

return jsonify({"error": "Missing 'data' parameter"}), 400

# Convert the comma-separated string into a NumPy array

input_data = np.array([list(map(float, data_str.split(",")))])

# Make prediction

predictions = model.predict(input_data).tolist()

return jsonify(predictions)

except Exception as e:

return jsonify({"error": str(e)}), 400

# Run Flask app

if __name__ == '__main__':

app.run(host='0.0.0.0', port=8080)Step-7: Create a Dockerfile

- A Dockerfile is a script that automates the process of creating a Docker container.

- It contains step-by-step instructions to set up the environment, install dependencies, and run the application inside an isolated container.

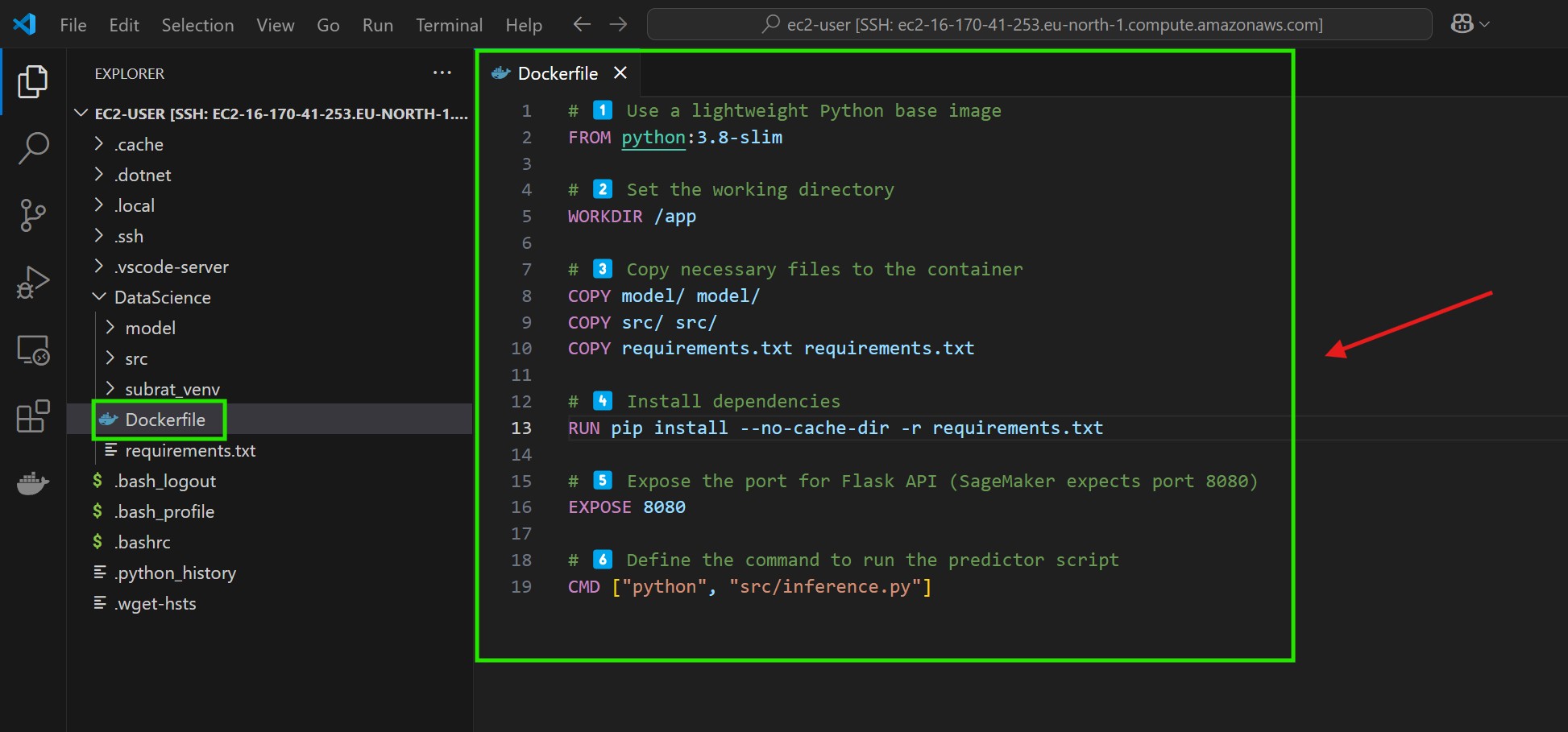

Dockerfile

# 1️⃣ Use a lightweight Python base image

FROM python:3.8-slim

# 2️⃣ Set the working directory

WORKDIR /app

# 3️⃣ Copy necessary files to the container

COPY model/ model/

COPY src/ src/

COPY requirements.txt requirements.txt

# 4️⃣ Install dependencies

RUN pip install – no-cache-dir -r requirements.txt

# 5️⃣ Expose the port for Flask API (SageMaker expects port 8080)

EXPOSE 8080

# 6️⃣ Define the command to run the predictor script

CMD ["python", "src/inference.py"]

Parts Of The Docker File:

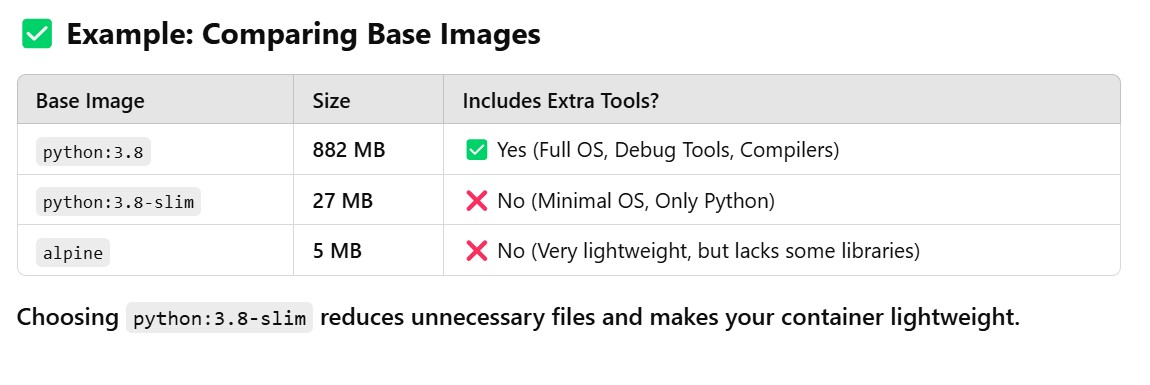

# 1️⃣ Base Image:

FROM python:3.8-slim

- you’re not just pulling Python—you’re pulling a pre-built image that includes Python installed on a minimal Debian OS.

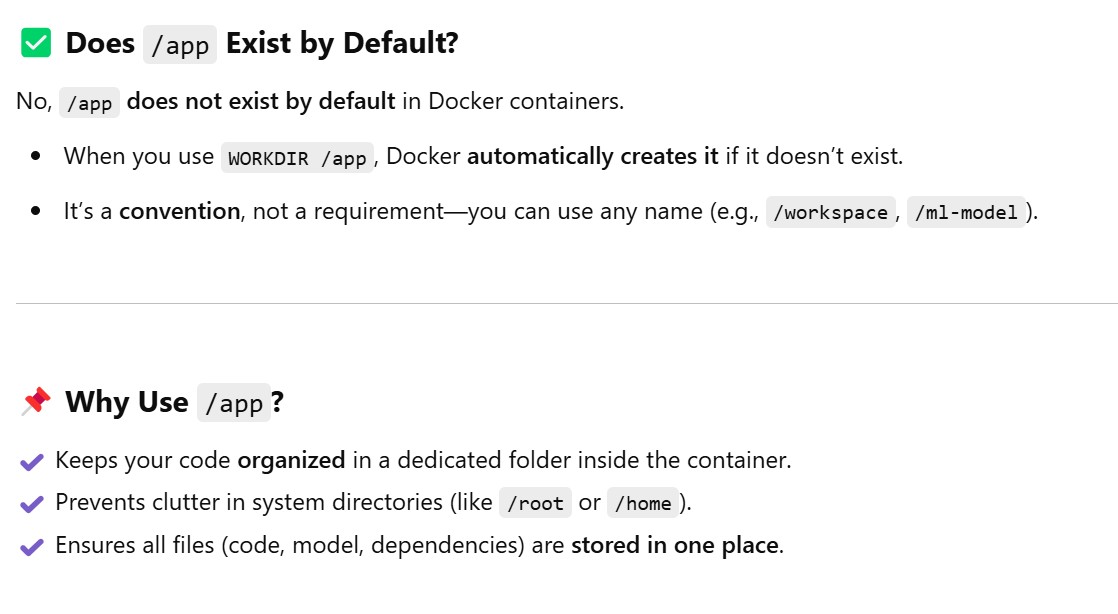

# 2️⃣ Why Use WORKDIR?

# 2️⃣ Set the working directory

WORKDIR /appThe

WORKDIRcommand sets the working directory inside the container.It’s like running

cd /appbefore executing further commands.

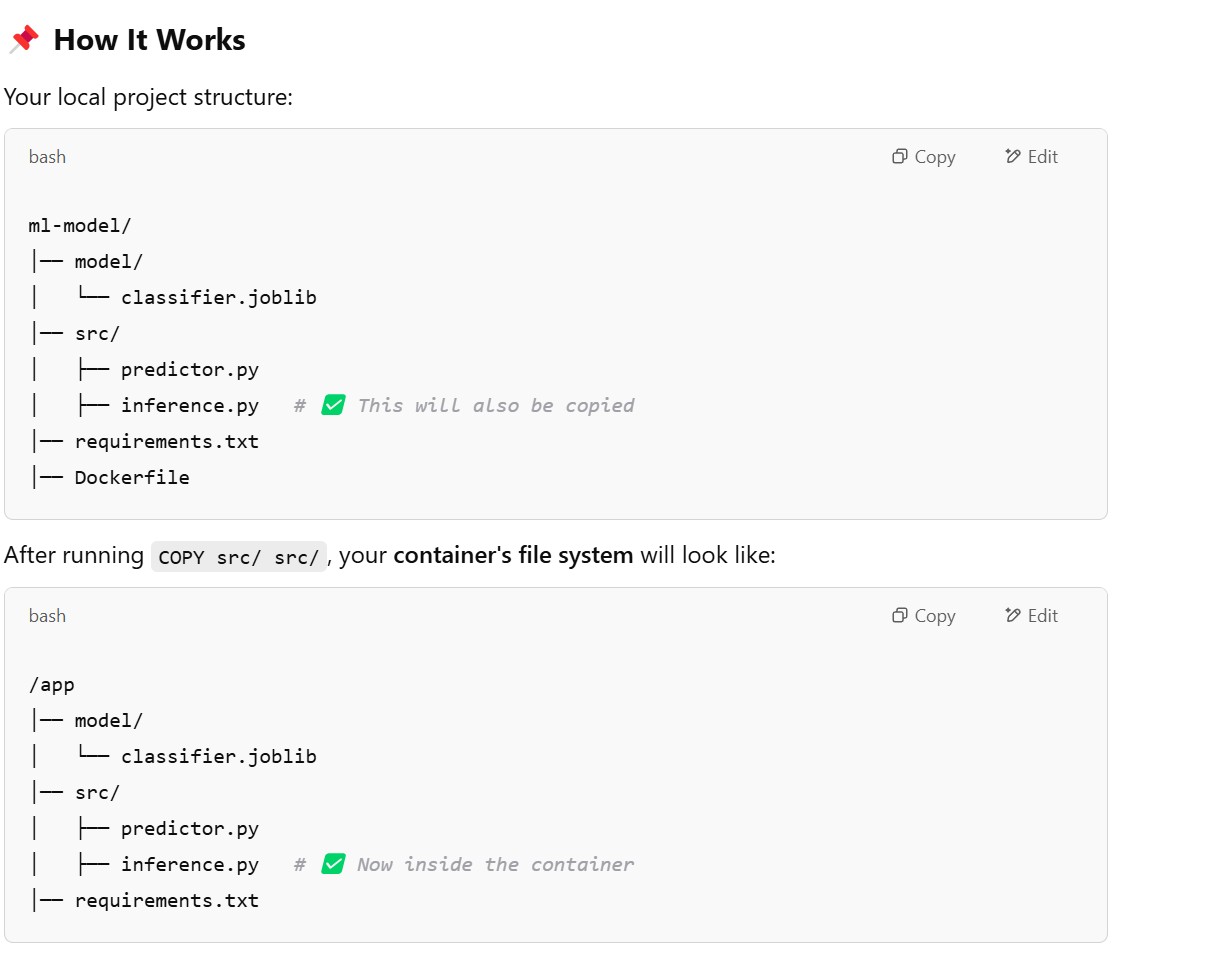

# 3️⃣ Copy Necessary Files To The Container :

COPY model/ model/

COPY src/ src/

COPY requirements.txt requirements.txt- The

COPYcommand copies files from your local machine (host) into the Docker container.

# 4️⃣ Install dependencies RUN pip install – no-cache-dir -r requirements.txt

RUN pip install – no-cache-dir -r requirements.txt- Installs all dependencies listed in

requirements.txt - Uses

--no-cache-dirto prevent caching, reducing image size

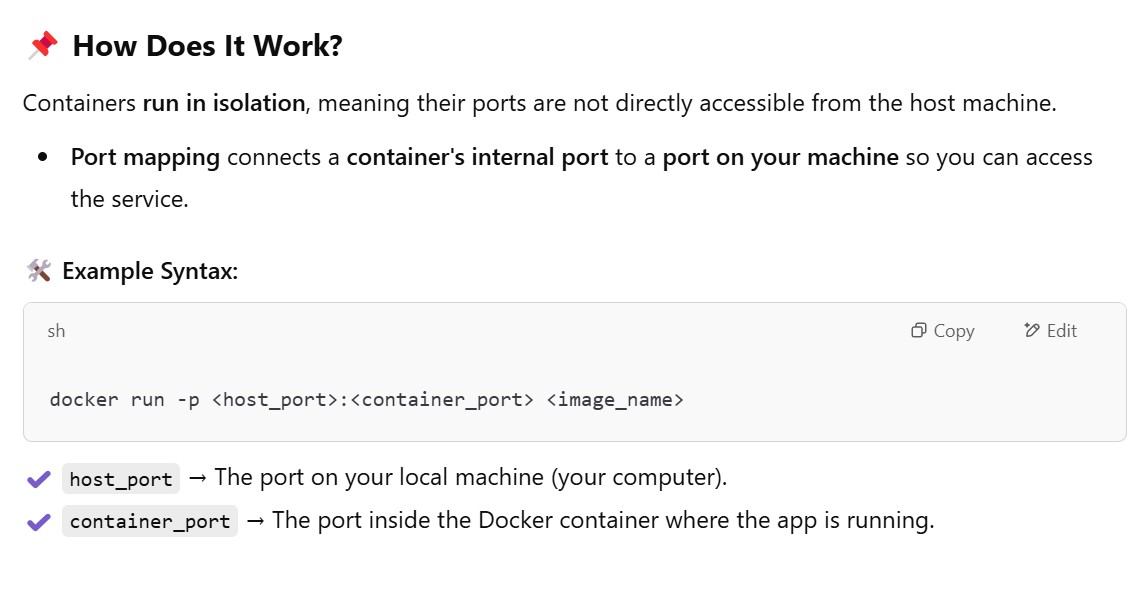

# 5️⃣ Expose the port for Flask API (SageMaker expects port 8080)

EXPOSE 8080- The

EXPOSE 8080instruction in a Dockerfile is used to document that the container will listen on port 8080. - This tells Docker that the application inside the container will be serving requests on this port. However, it doesn’t actually publish the port or make it accessible from outside the container.

- To make the port accessible, you would use the

-poption when running the container withdocker run.

# 6️⃣ Define the command to run the predictor script

CMD ["python", "src/inference.py"]- The

CMD ["python", "src/predictor.py"]instruction in a Dockerfile specifies the command that will be run when the container starts. - In this case, it will execute the

predictor.pyscript located in thesrcdirectory of your container’s filesystem using thepythoninterpreter. python: This is the command that starts the Python interpreter inside the container.src/predictor.py: This is the path to the Python script (predictor.py) that will be executed. The path is relative to the working directory set in the Docker image (if it’s not set explicitly, it defaults to the root directory/).

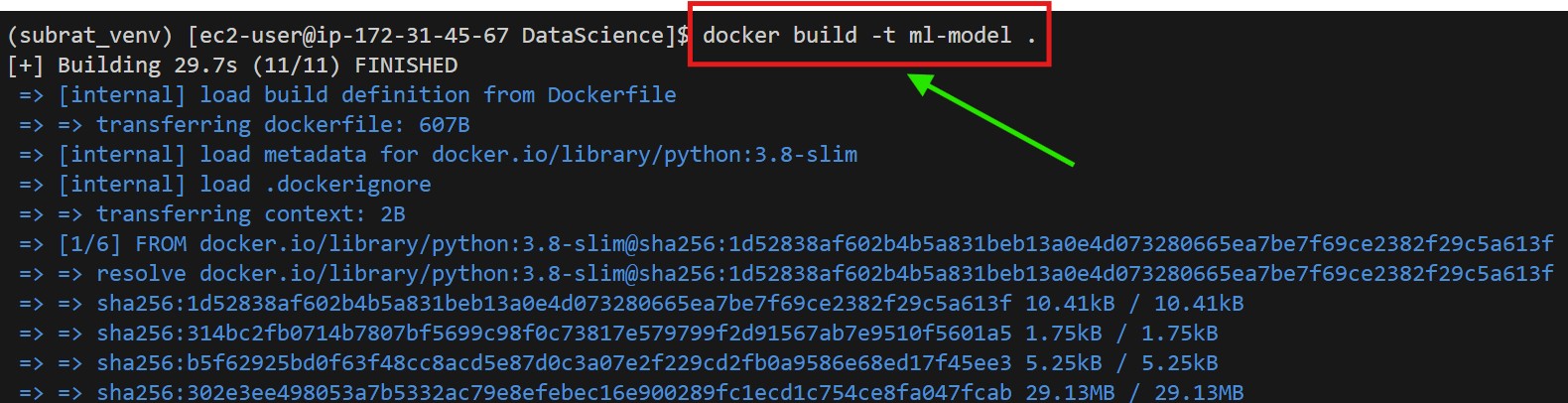

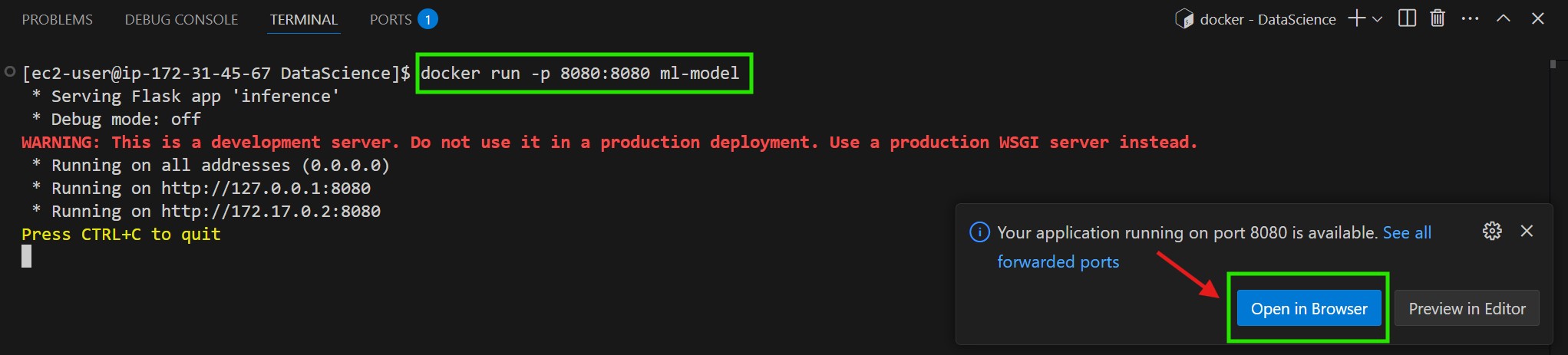

Step-8: Build and Run the Docker Image Locally

- Run the following commands in the directory where

Dockerfileis located.

# Build the Docker image

docker build -t ml-model .

# Run the container

docker run -p 8080:8080 ml-model

# 1️⃣ Build the Docker image

# Build the Docker image

docker build -t ml-model .# 2️⃣ Run The Container

# Run the container

docker run -p 8080:8080 ml-model

- This command runs a Docker container from the

ml-modelimage and maps port 8080 from the container to your local machine.

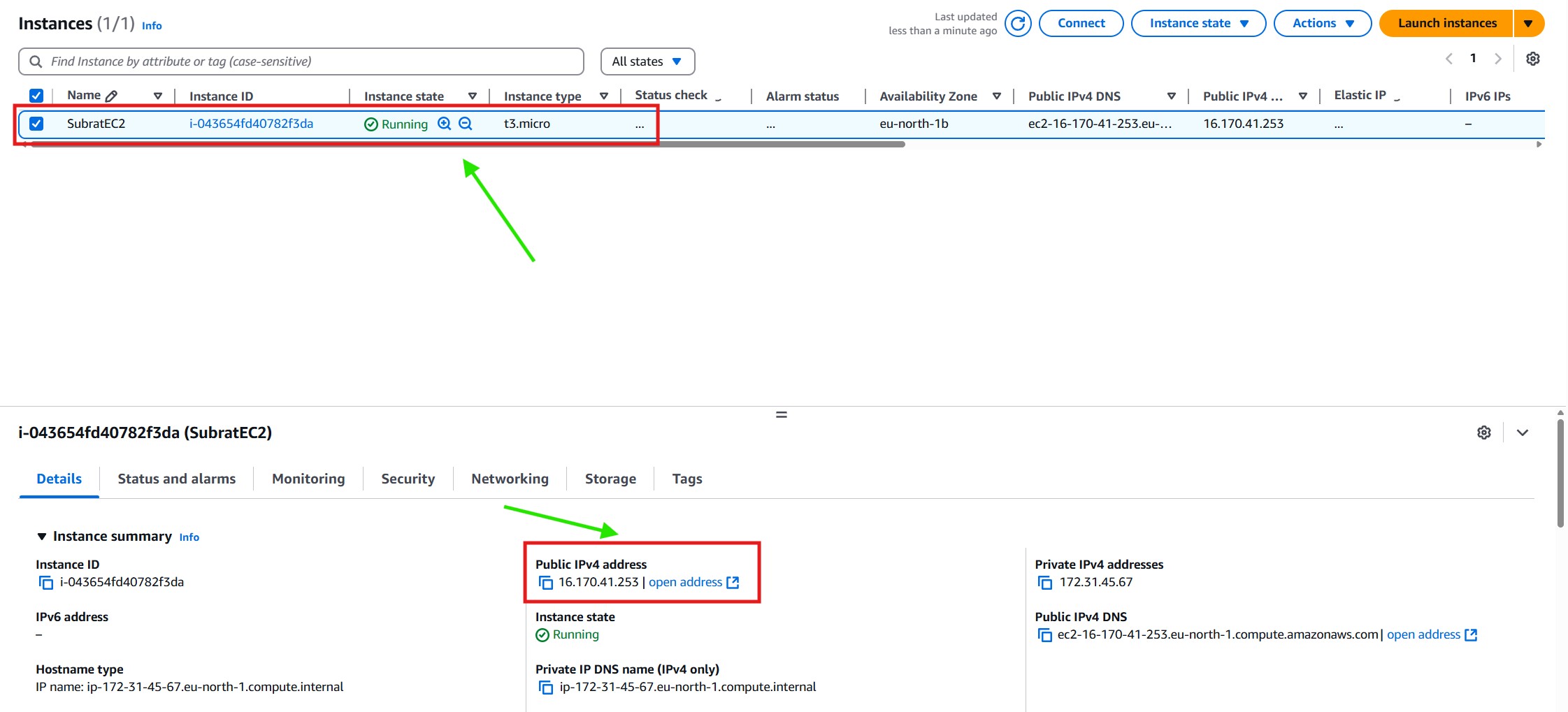

Step-8: Open The Browser

# 1️⃣ Open The URL In the Browser

# 2️⃣ Get The Public IP Address Of The EC2 Instance

# 3️⃣ Open The EC2 Public URL & ‘ping’ Method

http://16.170.41.253:8080/ping

# 4️⃣ Open The Public URL Of EC2 & ‘Invocation’ Method

http://16.170.41.253:8080/invocations?data=5,3,1,0

Note:

- This EC2 instance should be up and running 24*7 to serve the incoming request.

- If You close the instance then your service will also get stopped.

Step-9: Error Handling

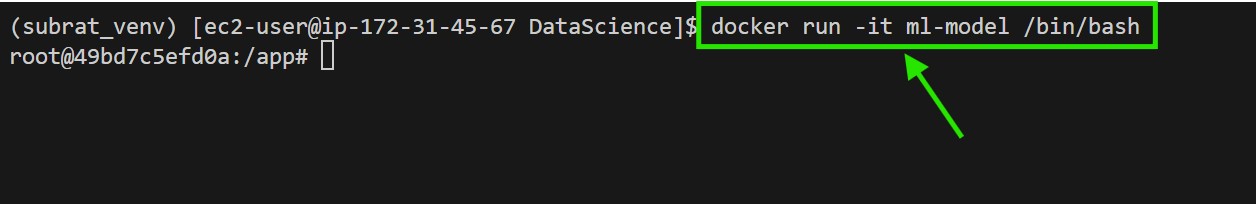

# 1️⃣ How To Login To A Container ?

docker run -it ml-model /bin/bash

# 2️⃣ How To Exit From A Container ?

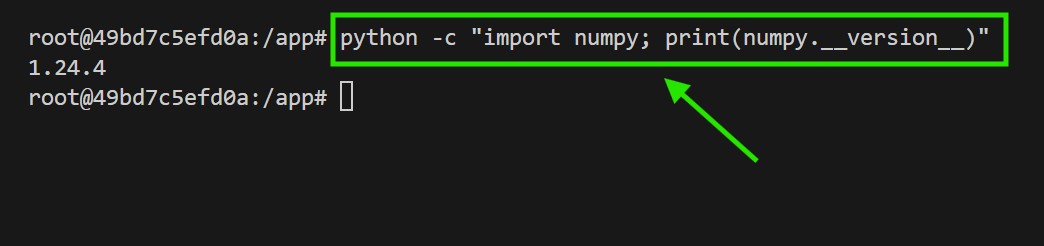

CTRL + D# 1️⃣ How To Check A Library Version Inside A Container ?

python -c "import numpy; print(numpy.__version__)"