-

Linear Regression

Linear Regression Algorithm admin August 18, 2023 Data Science Interview Questions,Machine Learning Read More Assumptions In Linear Regression. admin August 19, 2023 Data Science Interview Questions,Machine Learning Read More Linear Regression – Assumption- 1 (Linear Relationship) admin April 7, 2025 Machine Learning Read More Linear Regression – Assumption -2 (No Multicollinearity) admin April 7, 2025 Machine Learning Read More Linear Regression – Assumption – 2 (What Is Standard Error ?) admin December 16, 2024 Data Science Interview Questions,Machine Learning Read More

-

Linear Regression – (R – Squared and Adjusted R – Squared)

R- Squared and Adjusted R-Squared Table Of Contents: What Is R-Squared Value? Formula For R-Squared Value. Interpretation Of R-Squared Value. Example Of R-Squared. Key Points To Note. Conclusion. (1) What Is R-Squared Value? R-squared, also known as the coefficient of determination, is a statistical measure that shows how well the independent variable(s) in a regression model explain the variability of the dependent variable. It provides an indication of the model’s goodness of fit. (2) Formula For R Squared Value (3) Interpretation Of R-Squared (4) Example Of R-Squared (5) Key Points To Note (6) Conclusion (7) Adjusted R-Squared Adjusted R-squared is

-

Linear Regression – Assumption – 5 (Autocorrelation In Regression)

Autocorrelation Table Of Contents: What Is Autocorrelation? Assumption Of No Autocorrelation. Why No Autocorrelation Is Important? Common Causes Of Autocorrelation. Detecting Autocorrelation. Addressing Autocorrelation. Examples Of Autocorrelation In Residuals. (1) What Is Autocorrelation? In linear regression, autocorrelation refers to the correlation of the residuals (errors) of the model with themselves, particularly in time-series data or data with a sequential nature. The assumption of no autocorrelation is one of the key assumptions for the validity of a linear regression model. (2) Assumption Of No Autocorrelation. (3) Why No Autocorrelation Is Important? (4) Common Causes Of Autocorrelation. Omitted Variables: Missing important predictors

-

Linear Regression – Assumption – 4 (Homoscedasticity In Details?)

Homoscedasticity Table Of Contents: What Is Homoscedasticity ? Why Is Homoscedasticity Important? How to Identify Homoscedasticity? Examples Of Homoscedasticity . Consequences of Violating Homoscedasticity. How to Fix Heteroscedasticity? In Summary. (1) What Is Homoscedasticity? Homoscedasticity is an assumption in linear regression that the variance of the errors (residuals) is constant across all levels of the independent variables. In other words, the spread of residuals should be roughly the same for all predicted values of the dependent variable. (2) Why Is Homoscedasticity Important? Homoscedasticity is a key assumption in linear regression because: Accuracy of Predictions: When the variance of residuals is

-

Linear Regression – Assumption – 2 (Inflection Factor.)

Variance Inflection Factor Table Of Contents: What Is VIF? How VIF Works? Mathematical Formula. Interpreting VIF Value. Example Of VIF. Why VIF Is Important? How To Handle High VIF? Summary. (1) What Is VIF? The Variance Inflation Factor (VIF) is a statistical measure used to detect multicollinearity in a regression model. It quantifies how much the variance of a regression coefficient is inflated due to multicollinearity among the independent variables. In simpler terms, VIF helps identify whether a predictor is strongly correlated with other predictors in the model. (2) How VIF Works? If an independent variable is highly correlated with

-

Linear Regression – Assumption – 2 (Effect Of Multicollinearity In Regression Model.)

Effect Of Multicollinearity In Regression Model. Table Of Contents: What Is Multicollinearity? Effects Of Multicollinearity. (1) What Is Multicollinearity? Multicollinearity refers to a situation in multiple regression where two or more independent variables are highly correlated. This means that these variables share a significant amount of the same information, making it difficult for the regression model to separate their individual effects on the dependent variable. Here’s how multicollinearity affects regression coefficients: (2) Effects Of Multicollinearity. 1. Instability of Coefficients When multicollinearity exists, small changes in the data can lead to large changes in the estimated regression coefficients. This happens because

-

Linear Regression – Assumption – 2 (What Is A Singular Matrix?)

What Is A Singular Matrix? Table Of Contents: What Is A Singular Matrix? (1) What Is A Singular Matrix? A singular matrix is a square matrix that does not have an inverse. This happens when its determinant is equal to zero. In other words, a matrix is singular if it is not full rank, meaning some of its rows or columns are linearly dependent, and they can be expressed as a linear combination of the others. (2) Properties Of A Singular Matrix. (3) Example Of A Singular Matrix. Example-1: Example-2:

-

Linear Regression – Assumption – 2 (What Is Standard Error ?)

What Is Standard Error? Table Of Contents: What Is Standard Error? Why Standard Error Is Called Standard ? Factors Affecting Standard Error. Theoretical Range Of Standard Error. Key Concepts In Standard Error. Types Of Standard Error. Mathematical Example Of Standard Error. Why Standard Error Matters? Practical Example Of Standard Error. Linear Regression Coefficients Standard Error. (1) What Is Standard Error? The Standard Error (SE) is a measure of the variability or uncertainty of a statistic, such as a mean, proportion, or regression coefficient, when calculated from a sample. It quantifies how much a sample statistic (e.g., sample mean, xˉbar{x}xˉ) is

-

Linear Regression – What Is a Biased Estimator In Linear Regression?

What Is a Biased Estimator In Linear Regression? In linear regression, estimator biasness refers to the systematic deviation of the estimated coefficients from their true population values. A biased estimator consistently produces estimates that, on average, differ from the true values in a predictable manner. In the context of linear regression, the biases of an estimator can occur for different reasons: Omitted Variable Bias: If relevant variables are excluded from the regression model, the estimated coefficients may be biased. Omitted variable bias arises when the omitted variables are correlated with both the independent variables included in the model and the

-

Linear Regression – Assumption – 6 (What Is Endogeneity?)

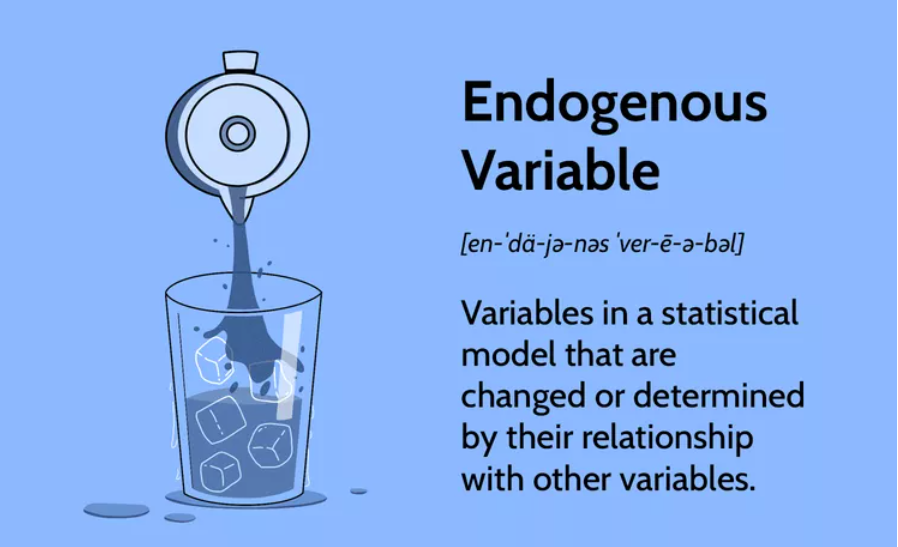

What Is Endogeneity Table Of Contents: What Is Endogeneity? Why Does Endogeneity occur? How To Avoid Endogeneity? (1) What Is Endogeneity? Endogeneity refers to a situation in which there is a correlation or relationship between an explanatory variable and the error term in a statistical model. This correlation can arise when the explanatory variable is influenced by factors that are not accounted for in the model, leading to biased and inconsistent estimates of the model parameters. (2) Why Does Endogeneity Occur ? In the context of regression analysis, endogeneity can occur when one or more of the following conditions are