Autocorrelation

Table Of Contents:

- What Is Autocorrelation?

- Assumption Of No Autocorrelation.

- Why No Autocorrelation Is Important?

- Common Causes Of Autocorrelation.

- Detecting Autocorrelation.

- Addressing Autocorrelation.

- Examples Of Autocorrelation In Residuals.

(1) What Is Autocorrelation?

- In linear regression, autocorrelation refers to the correlation of the residuals (errors) of the model with themselves, particularly in time-series data or data with a sequential nature.

- The assumption of no autocorrelation is one of the key assumptions for the validity of a linear regression model.

(2) Assumption Of No Autocorrelation.

(3) Why No Autocorrelation Is Important?

(4) Common Causes Of Autocorrelation.

- Omitted Variables:

- Missing important predictors that are correlated with the dependent variable.

- Misspecified Model:

- Using an incorrect functional form or missing time-lagged relationships in time-series data.

- Measurement Errors:

- Errors in data collection can introduce patterns in residuals.

- Time-Series or Sequential Data:

- Observations close in time or sequence are often correlated.

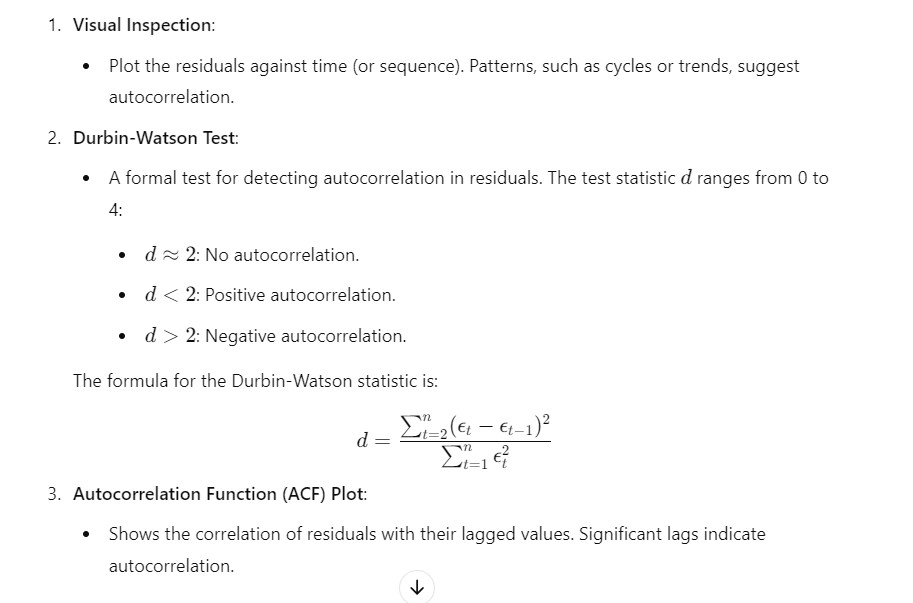

(5) Detecting Autocorrelation.

(6) Addressing Autocorrelation.

Add Lagged Variables:

- Include lagged dependent or independent variables to account for temporal effects.

Transform Variables:

- Use differencing or other transformations to remove trends or cycles.

Generalized Least Squares (GLS):

- Modify the regression approach to account for correlated residuals.

Use Time-Series Models:

- For strongly autocorrelated data, consider models like ARIMA (Auto-Regressive Integrated Moving Average) or similar time-series-specific models.

Clustered Standard Errors:

- Adjust standard errors to account for autocorrelation, particularly in panel data.