Linear Regression – Interview Q & A.

Table Of Contents:

- Beginner-Level (Fundamentals)

- What is Linear Regression?

- What is the equation of a simple linear regression model?

- What are the assumptions of linear regression?

- What is the difference between simple and multiple linear regression?

- What do the coefficients in a linear regression model represent?

- How do you interpret the intercept and slope in a regression line?

- What is the cost function used in linear regression?

- What is the difference between correlation and regression?

- What is Mean Squared Error (MSE)?

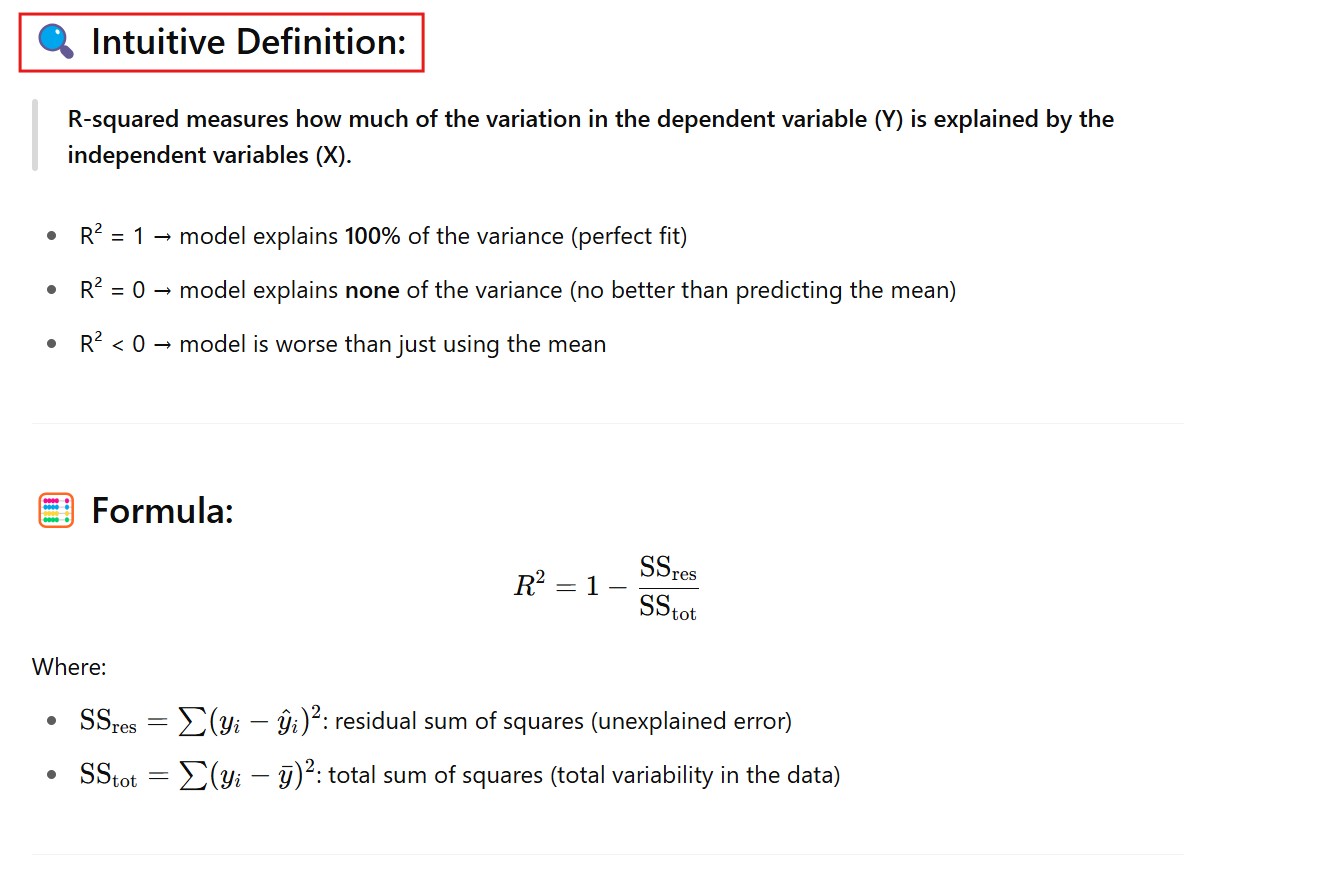

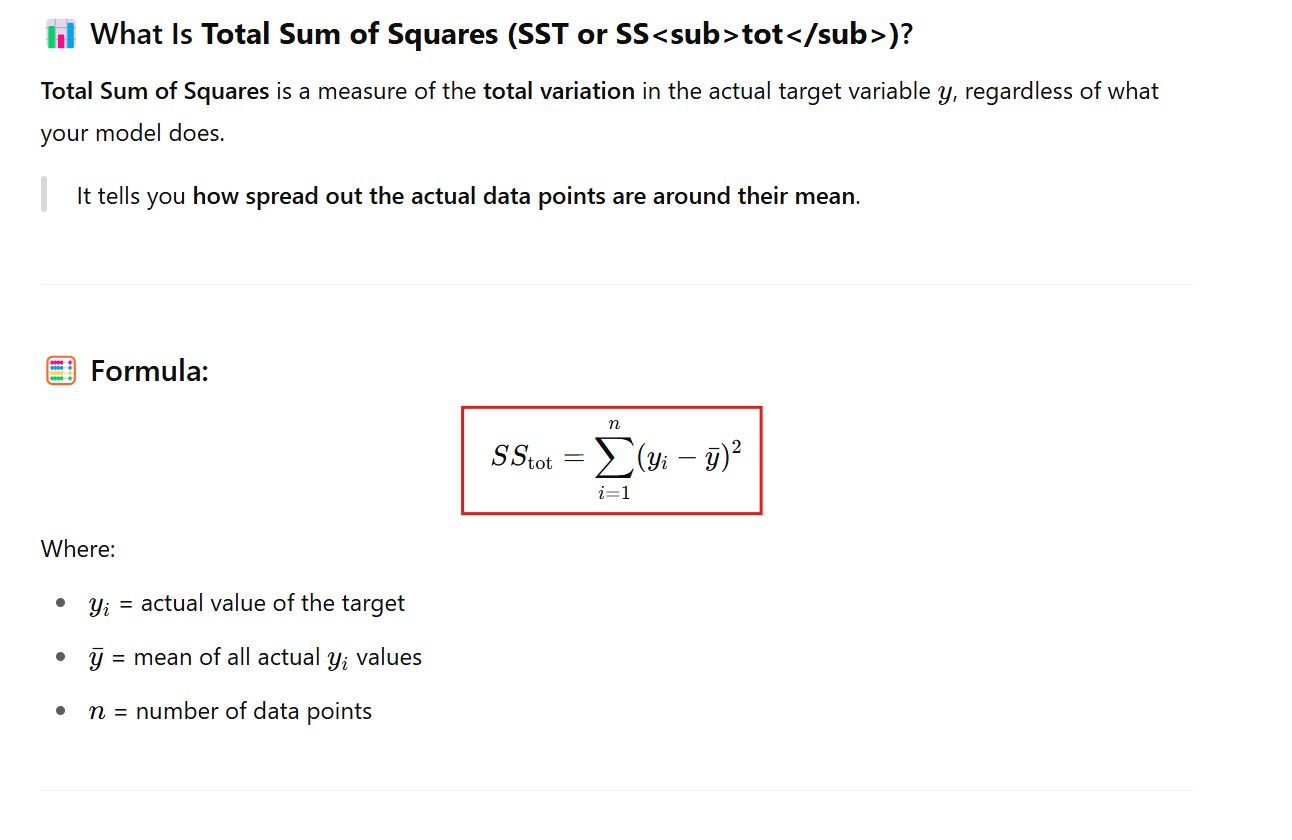

- How is R-squared interpreted?

- What does an R-squared of 0.85 mean?

- What is adjusted R-squared? Why is it used?

- What is multicollinearity? How do you detect it?

- What is the role of the p-value in linear regression?

- Intermediate-Level

- What is the difference between MSE, MAE, and RMSE?

- What happens if the linear regression assumptions are violated?

- How do you check the linearity assumption?

- What is heteroscedasticity and how can you detect it?

- How do you handle categorical variables in linear regression?

- Why do we use dummy variables?

- What is the Variance Inflation Factor (VIF)?

- How would you handle missing data before fitting a linear regression model?

- How do you regularize a linear model? (L1, L2)

- When would you prefer Ridge over Lasso regression?

- How do outliers affect a linear regression model?

- How do you detect and deal with outliers?

- Can linear regression be used for classification?

- Advanced-Level (Experienced)

- How does gradient descent work in linear regression?

- Why might you prefer gradient descent over the normal equation?

- What is the time complexity of fitting a linear regression model using the normal equation?

- Explain the bias-variance tradeoff in the context of linear regression.

- Why does adding more features reduce bias but increase variance?

- What is model overfitting and how can regularization help?

- How would you evaluate a linear regression model’s performance?

- How would you do feature selection for linear regression?

- How would you validate a linear regression model?

- Give an example of a real-world use case for linear regression.

- What are the limitations of linear regression in practice?

- What steps would you take to deploy a linear regression model?

- How would you interpret a regression model to a non-technical stakeholder?

- Bonus: Tricky & Scenario-Based

- What happens if you fit a linear regression model to non-linear data?

- How do you handle high-dimensional data with linear regression?

- Suppose your model has high R² but performs poorly on test data. Why?

- Can you explain what regularization does geometrically?

- What if two features are highly correlated — what would you do?

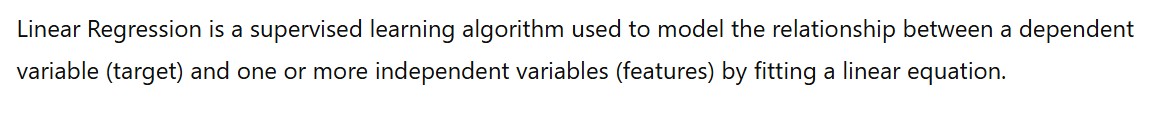

(1) What Is Linear Regression ?

(2) What Is The Equation Of The Simple Linear Regression Model ?

(3) Assumptions Of Linear Regression Model

- Linear Relationship Should Present.

- No Multicollinearity.

- Normality Of Error Term.

- Homoscedasticity.

- No Autocorrelation In Error Term.

(4) Simple Vs Multiple Linear Regression.

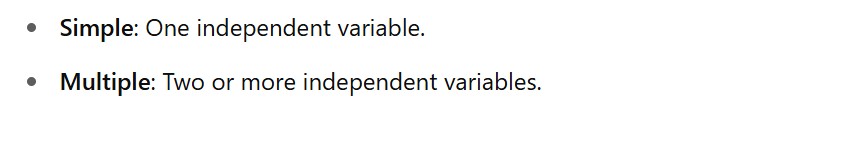

(5) What Do The Coefficients Of Linear Regression Represents ?

- The coefficients of the Linear Regression model represents the strength of relationship between the independent and the dependent variable.

- The coefficients have no fixed range it can be of any value.

- But the coefficients can have the positive or the negative sign which represents the direction of the relationship.

- If input features are standardized (mean=0, std=1), then coefficients reflect relative importance more clearly.

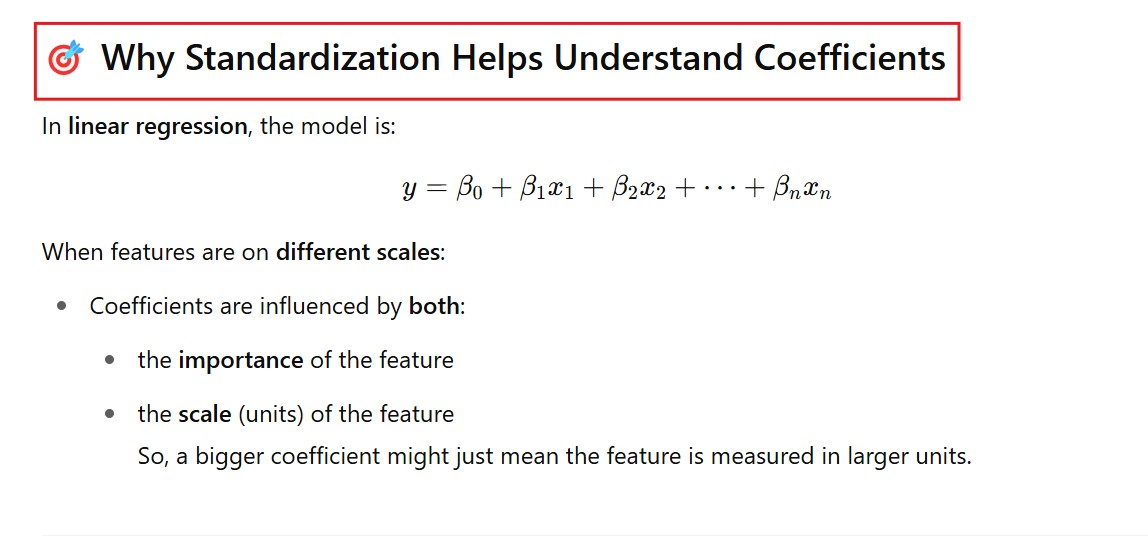

(6) How Can We Compare Coefficients Of Different Features ?

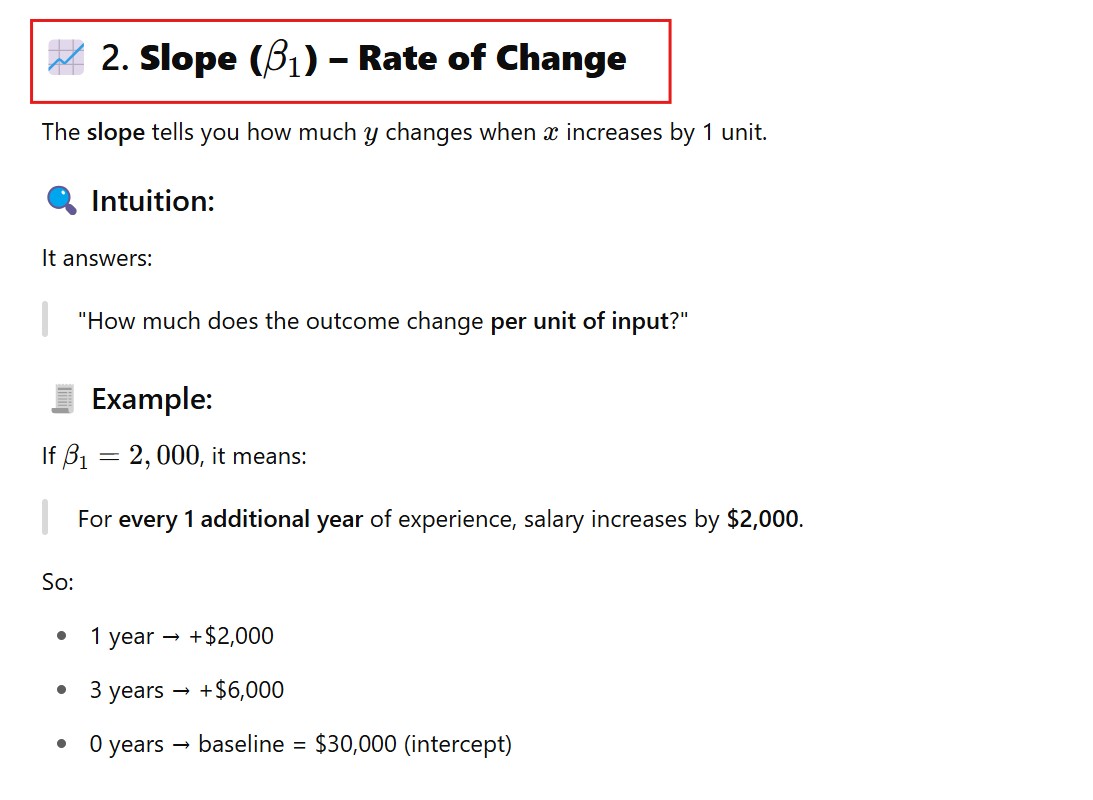

(7) What Does Slope & Intercept Represents ?

(8) Cost Function Of Linear Regression Model.

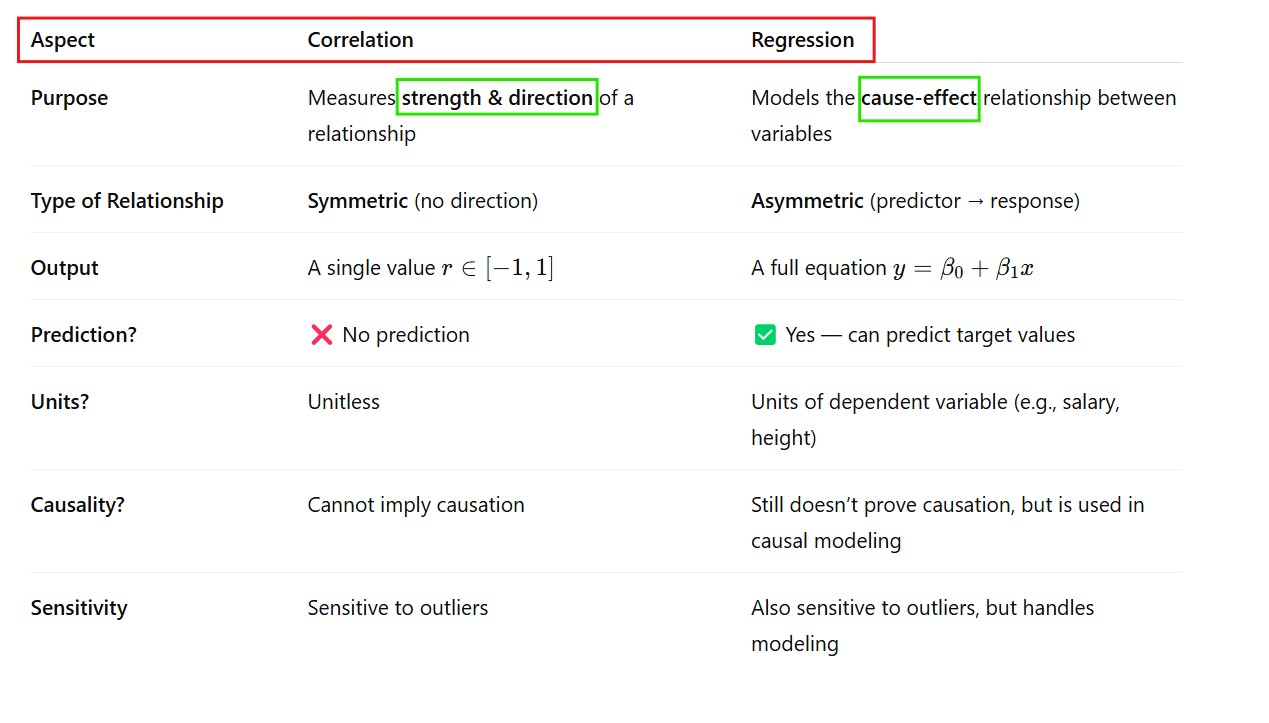

(9) Difference Between Correlation & Regression.

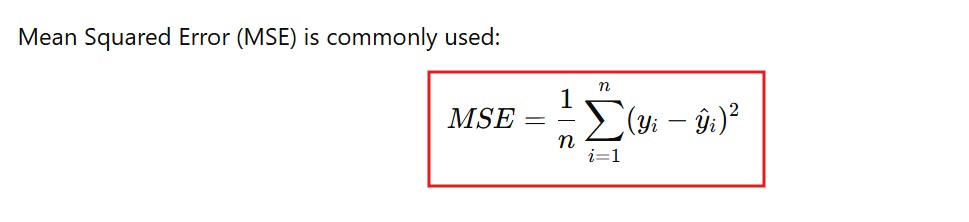

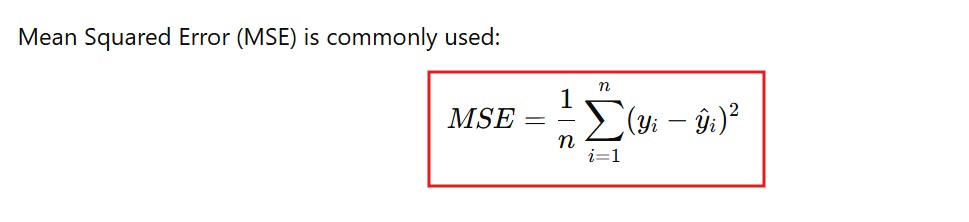

(10) What Is Mean Squared Error ?

- The average of sum of squared difference between actual and predicted values.

- Interims of reducing the larger MSE error the optimizer will reduce the error for the data point having maximum error .

(11) What Is R – Squared ?

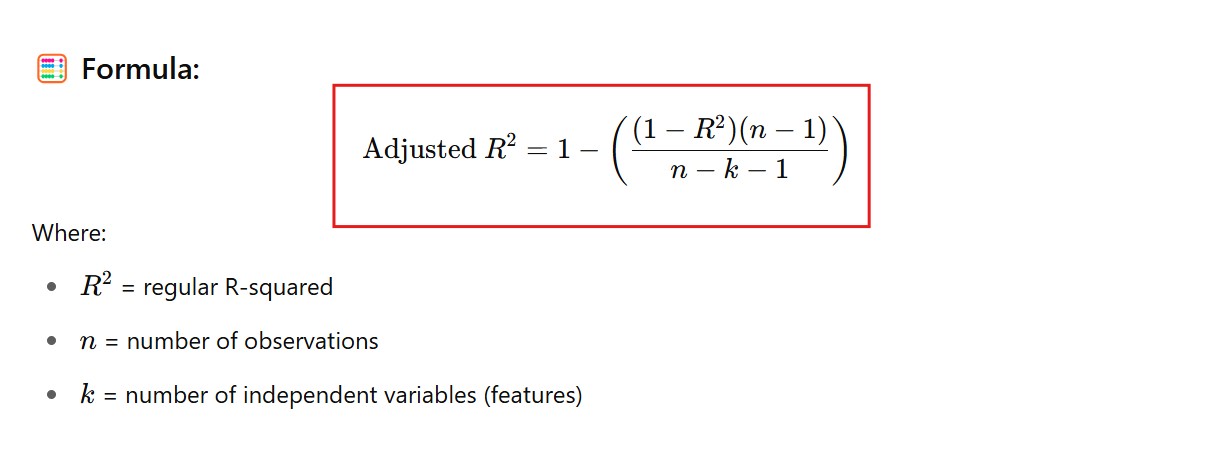

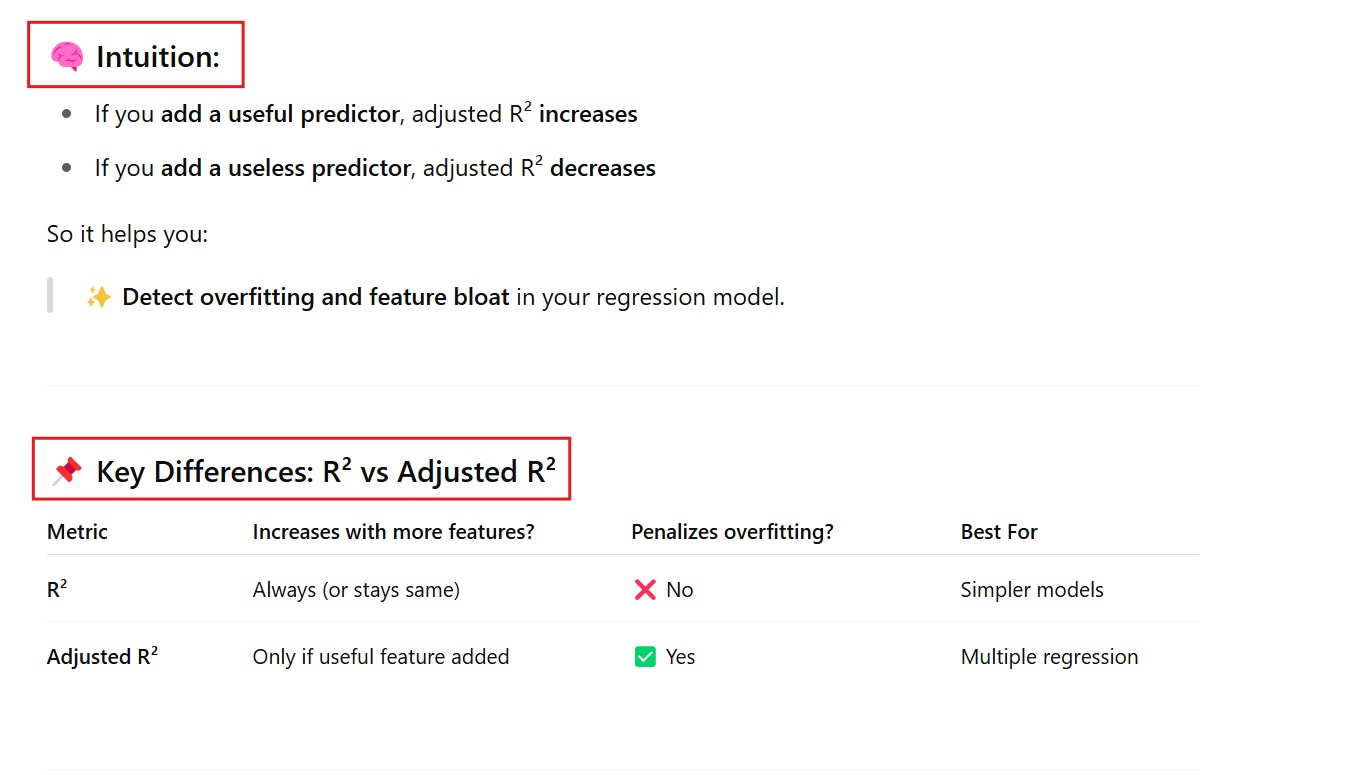

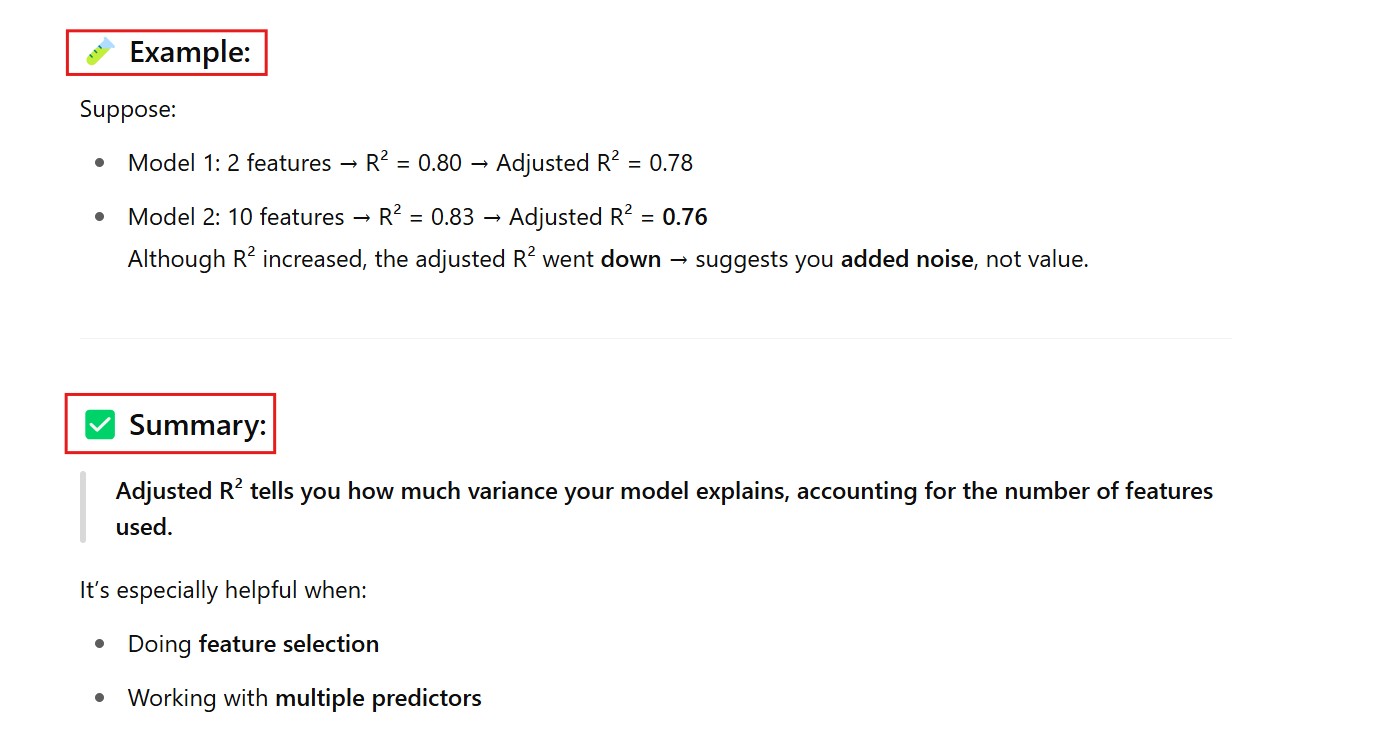

(12) What Is Adjusted R – Squared ?

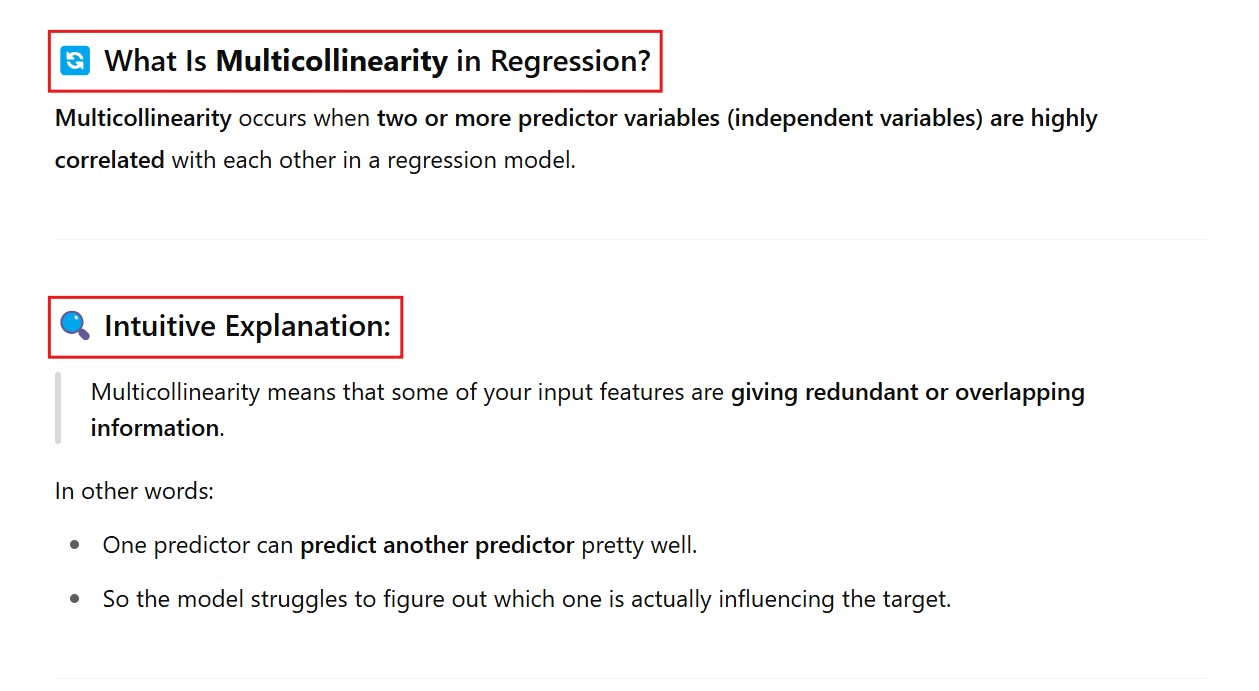

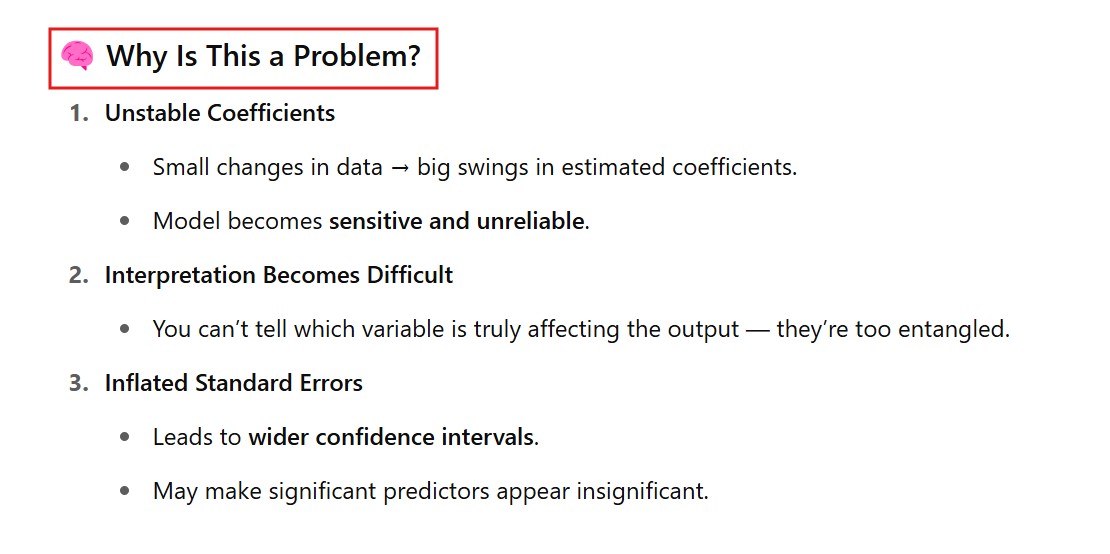

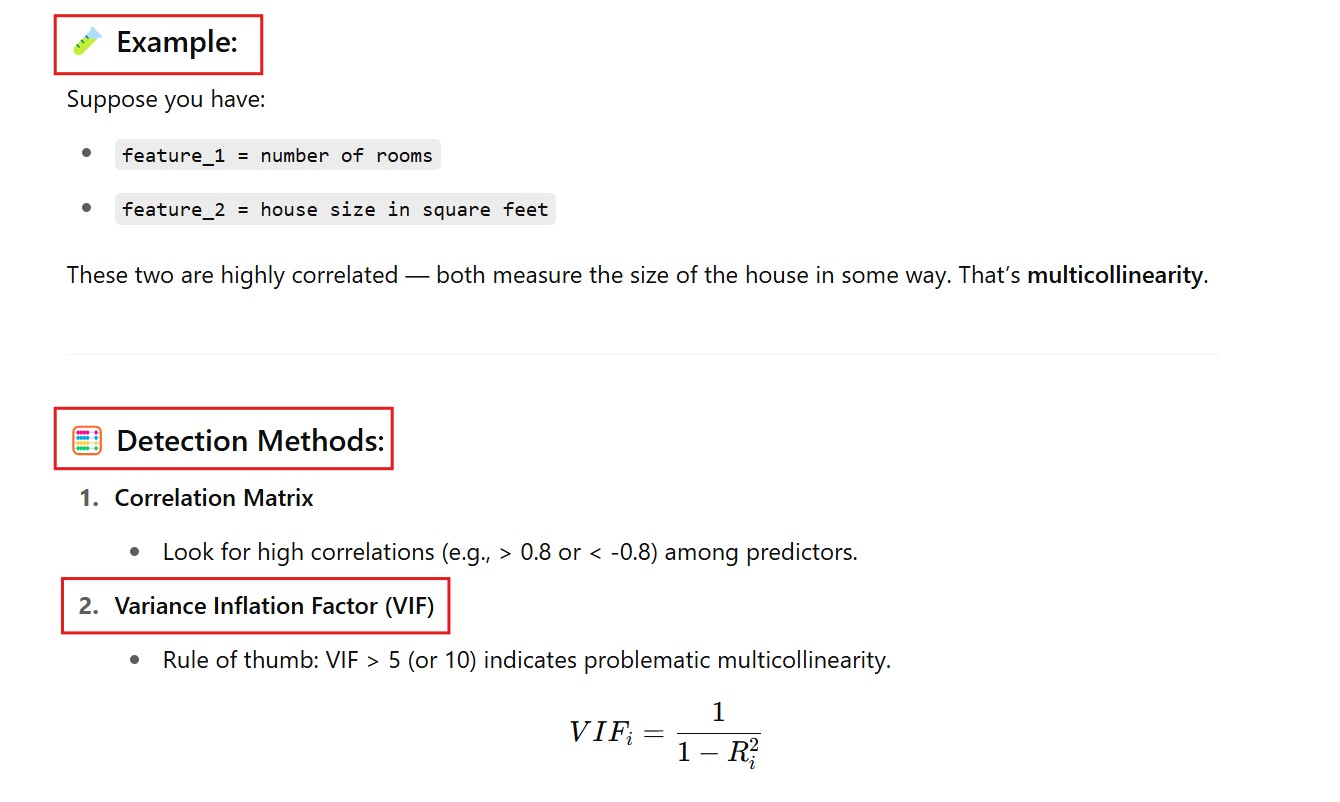

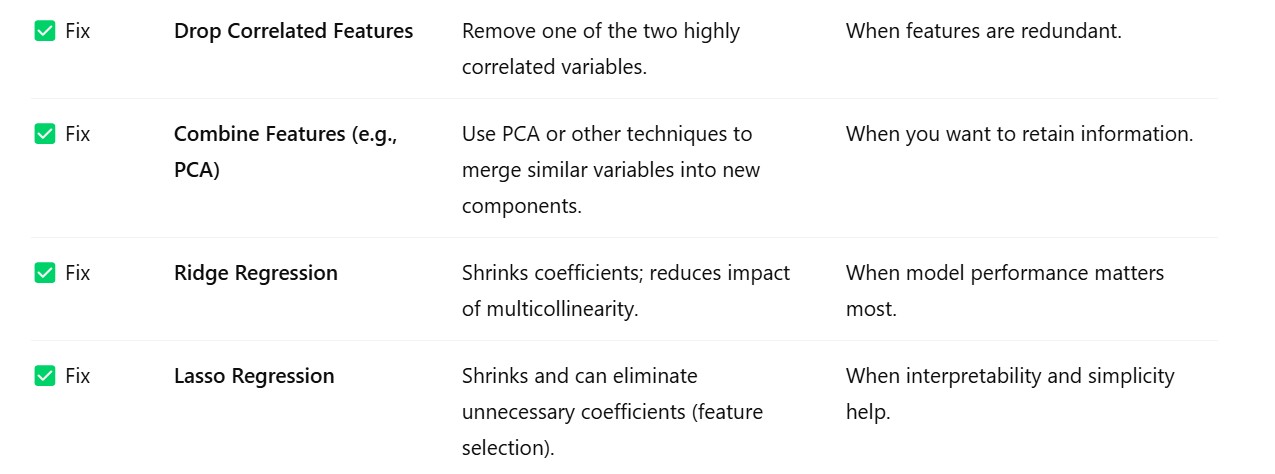

(13) What Is Multicollinearity ?

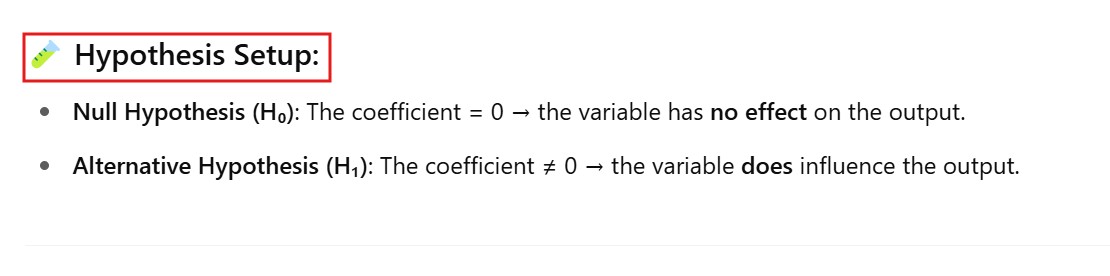

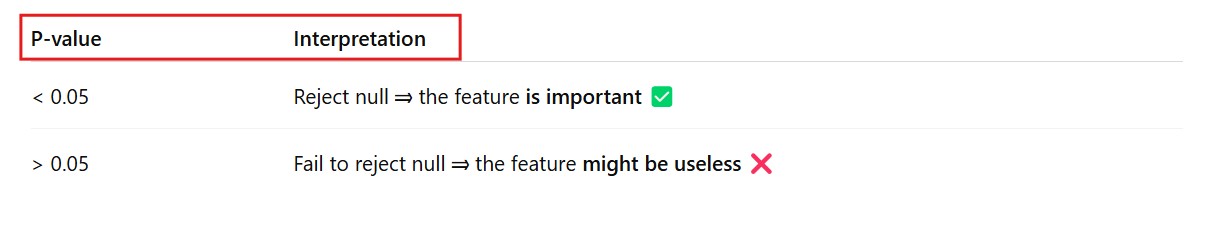

(14) What Is The Role Of P – Value In Linear Regression ?

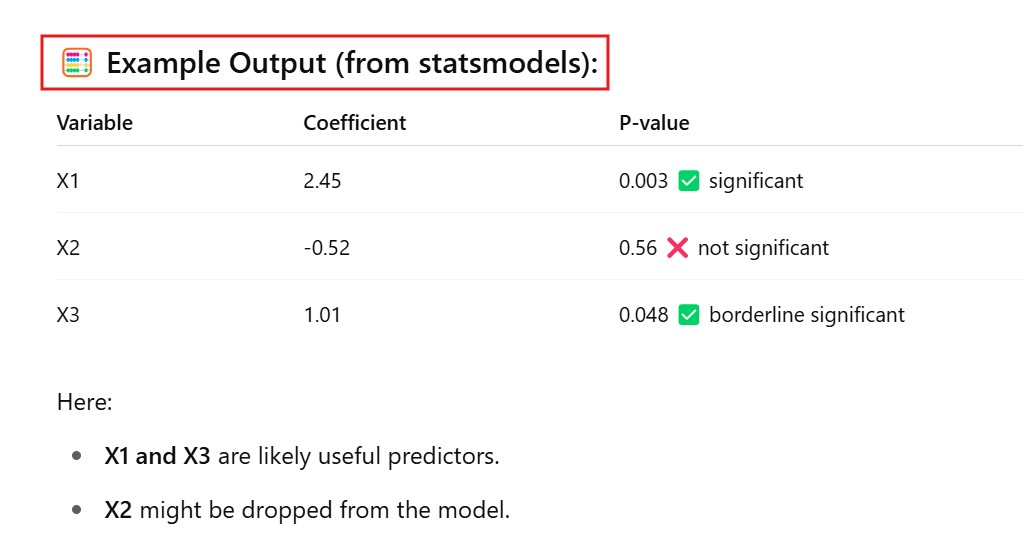

(15) What Is The Difference Between MSE, MAE, and RMSE ?

(16) What Happens If The Linear Regression Assumptions Are Violated?

(17) What Is Linearity Assumption ?

(18) What Is Homoscedasticity Assumption ?

(19) What Is Error Normality Assumption ?

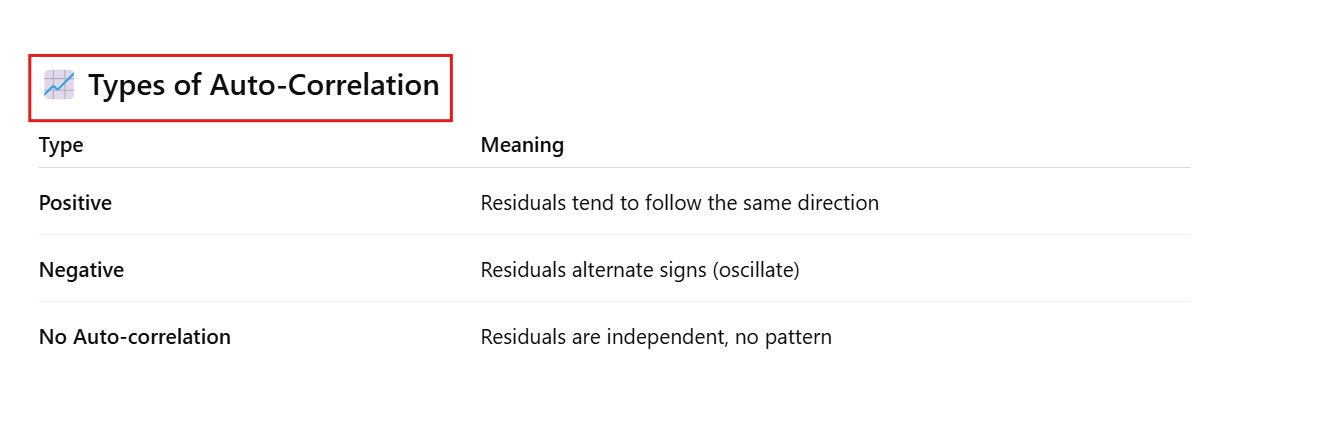

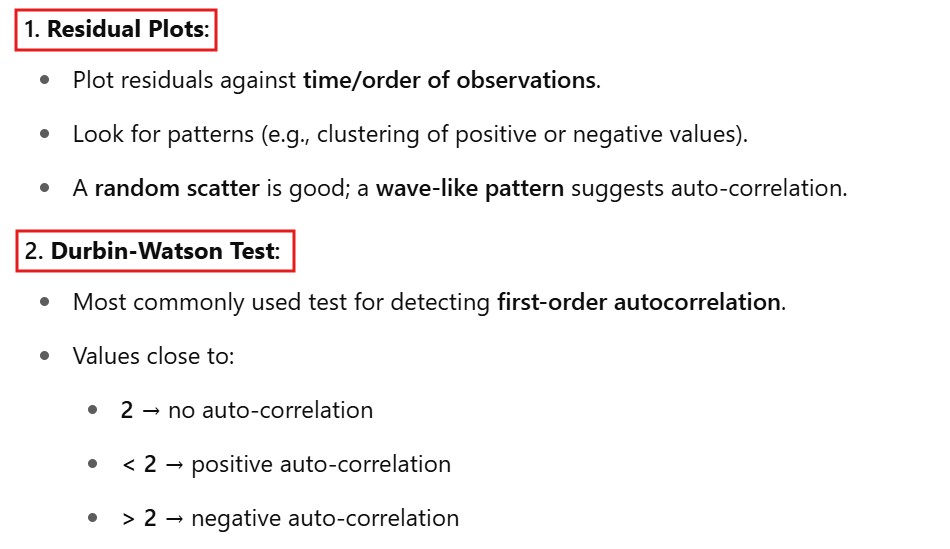

(20) What Is No Auto-Correlation Assumption ?

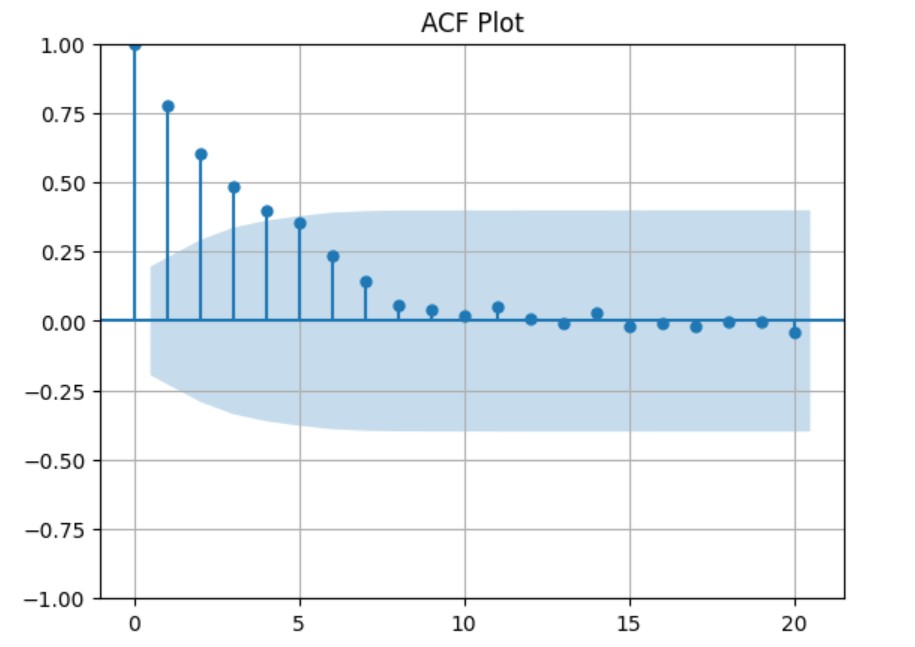

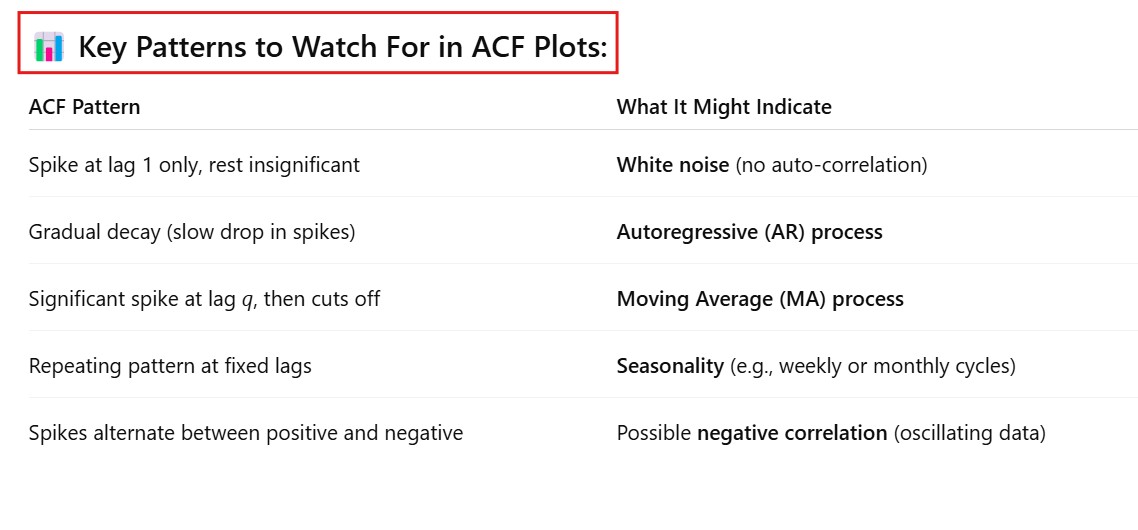

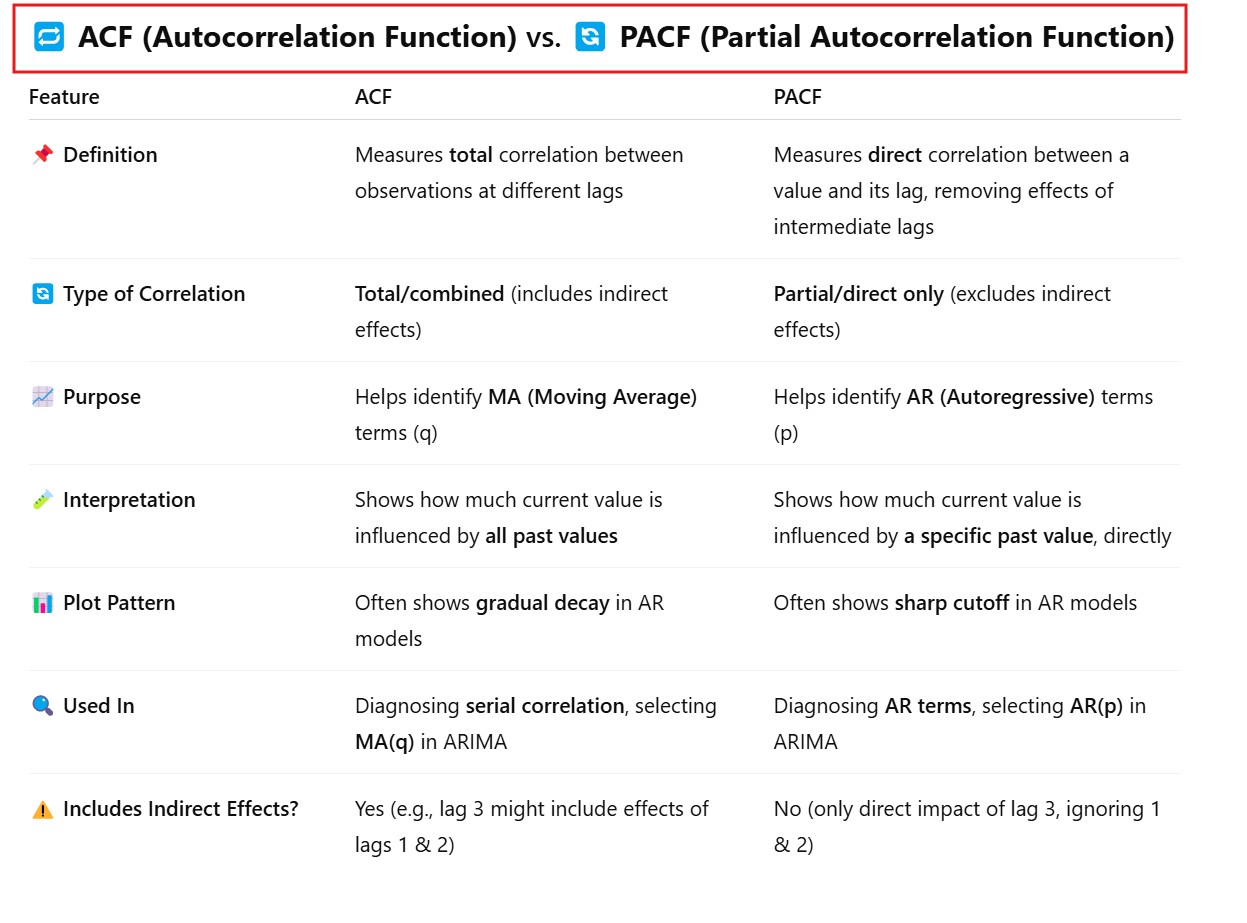

(21) What Is ACF Plot ?

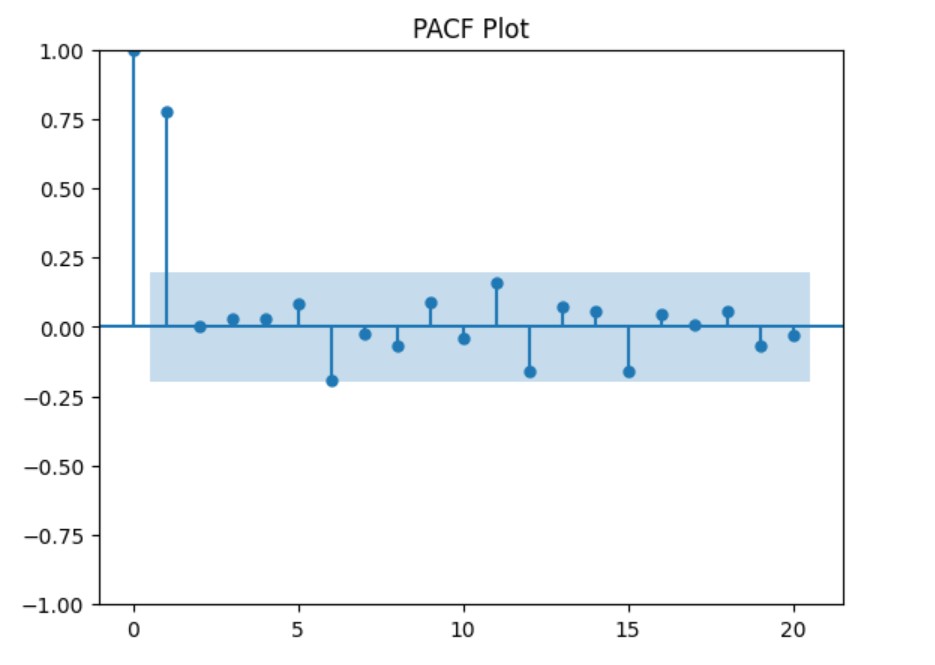

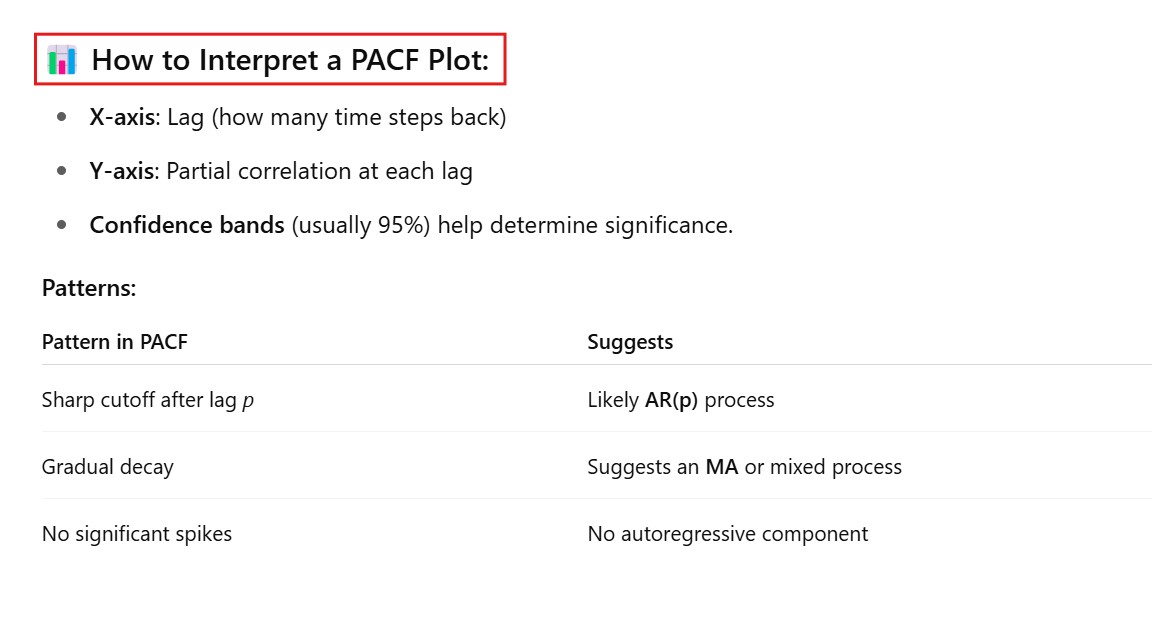

(21) What Is PACF Plot ?

(22) Difference In ACF & PACF .

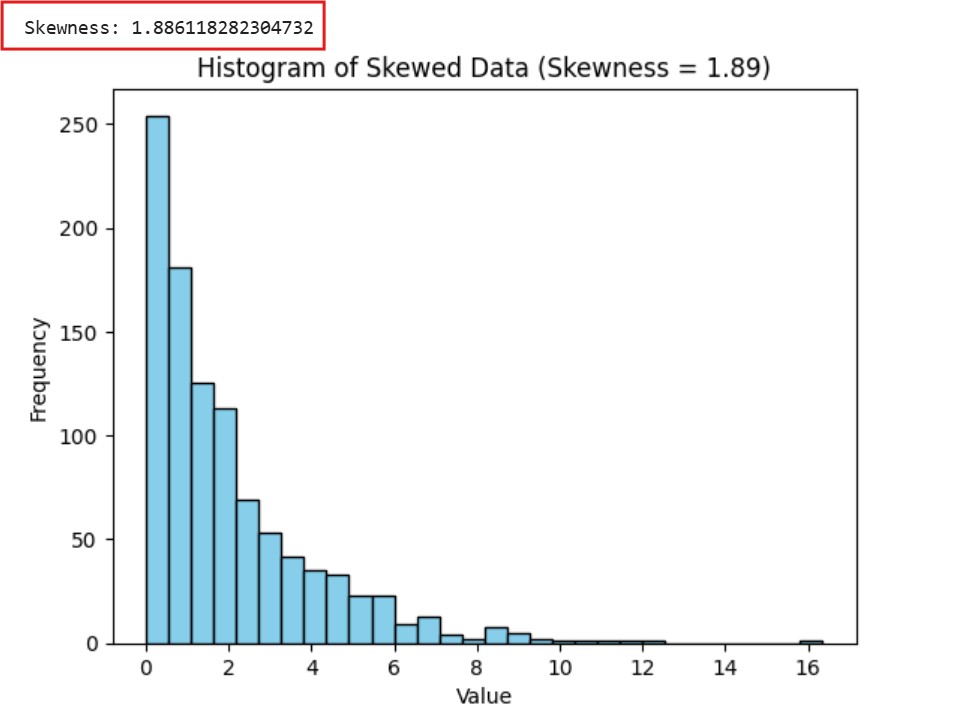

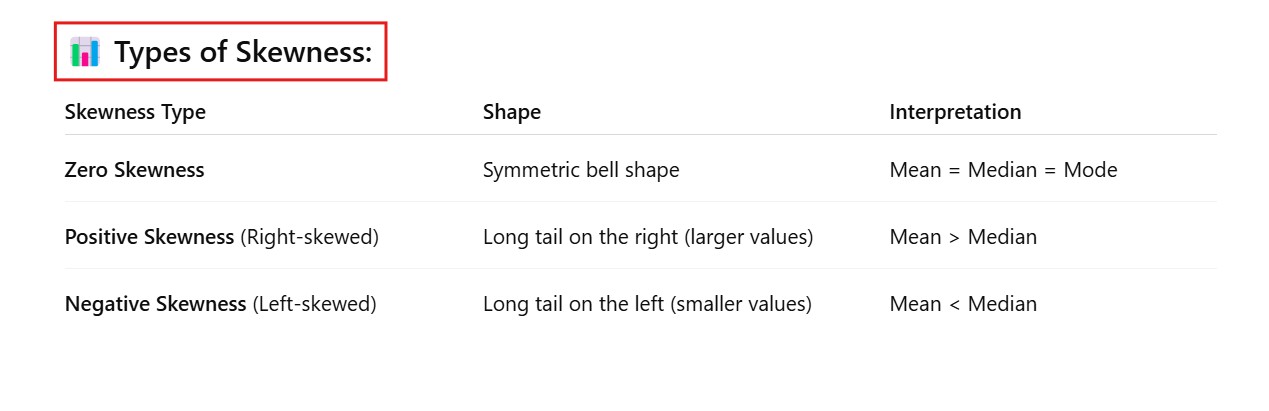

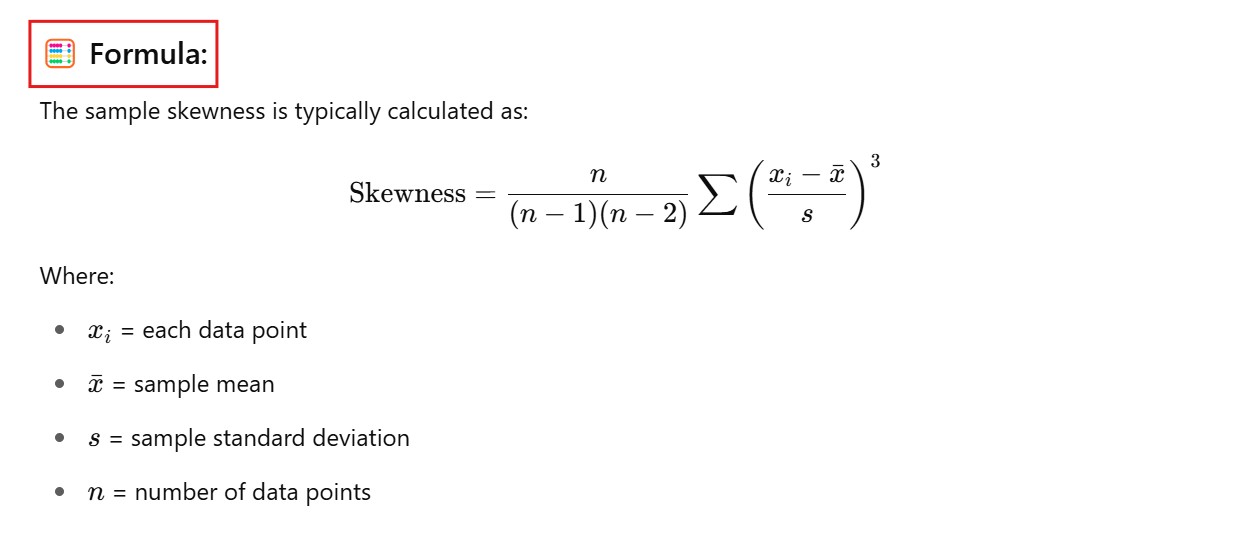

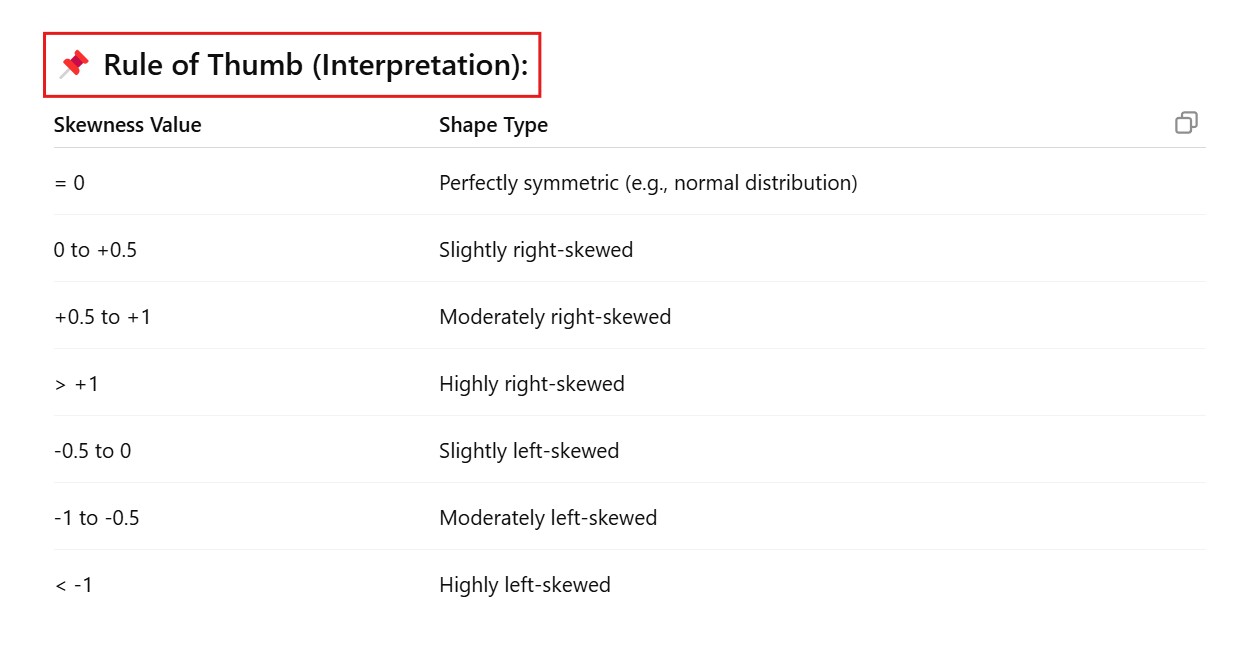

(23) What Is Skewness ?