Self Attention Geometric Intuition!!

Table Of Contents:

- What Is Self Attention?

- Why Do We Need Self Attention?

- How Self Attention Works?

- Example Of Self Attention.

- Where is Self-Attention Used?

- Geometric Intuition Of Self-Attention.

(1) What Is Self Attention?

- Self-attention is a mechanism in deep learning that allows a model to focus on different parts of an input sequence when computing word representations.

- It helps the model understand relationships between words, even if they are far apart, by assigning different attention weights to each word based on its importance in the context.

(2) Why Do We Need Self Attention?

(3) How Self Attention Works?

- Given an input sentence, self-attention follows these steps:

(4) Example Of Self Attention

(5) Where is Self-Attention Used?

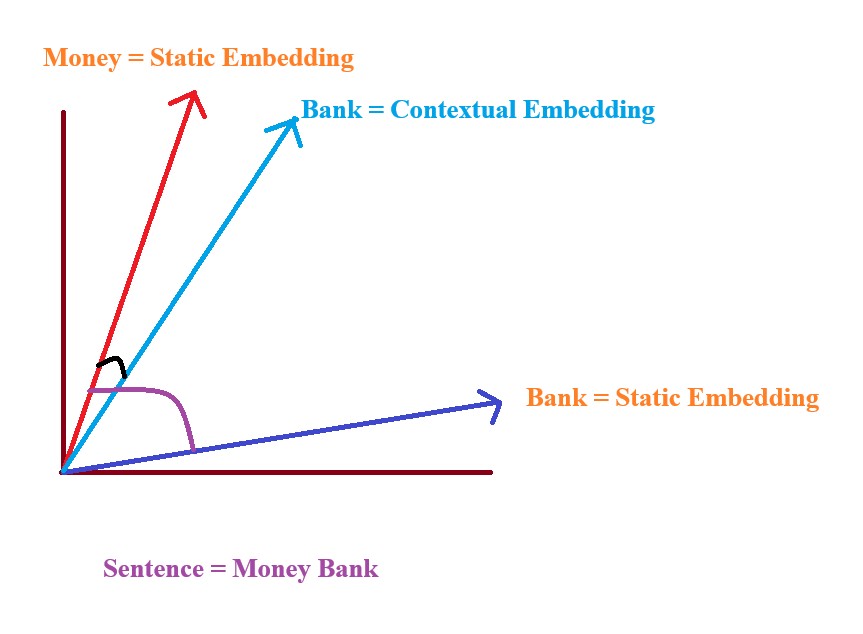

(6) Geometric Intuition Of Self-Attention.

- Input Sentence : “Money Bank”

- First we plot the static word embeddings of the word ‘Money’ and ‘Bank’.

- You can see that the vectors are quite far apart from each other.

- Once we derive the Contextual word embedding of the word ‘Bank’ we can see that it is closer to the word ‘Money’ because ‘Bank’ is used in the same context as ‘Money’.