What Is Batch Normalization ?

Table Of Contents:

- What Is Batch Normalization ?

- Why Is Batch Normalization Needed ?

- Why Is Batch Normalization Needed ?

- Example Of Batch Normalization.

- Why is Internal Covariate Shift (ICS) a Problem If Different Distributions Are Natural?

(1) What Is Batch Normalization ?

- Batch Normalization is a technique used in Deep Learning to speed up training and improve stability by normalizing the inputs of each layer.

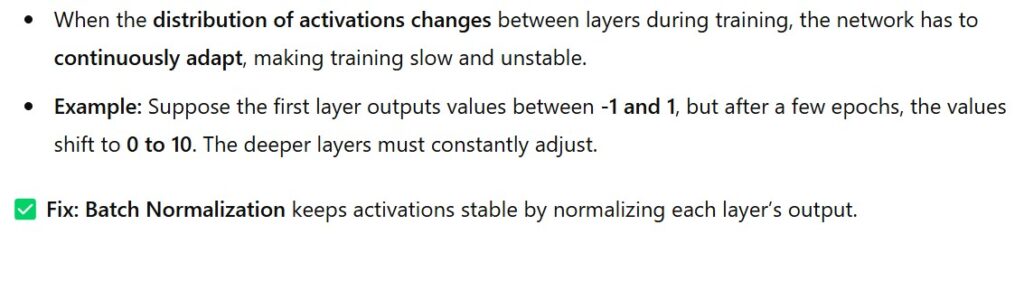

- Batch Normalization keeps activations stable by normalizing each layer’s output.

- Without Batch Normalization it can lead to unstable training, slow convergence, overfitting, or underfitting.

Special Note:

- If at every batch your input to the each layer changing frequently , like at batch-1 the input range is from 0 to 10 an in second batch the range has increased to 0 to 100 suddenly , it will be difficult for the deep layers to adopt the weights and biases frequently.

- It will cause slower learning process and unstable weights.

- To solve this issue we have introduced the Batch normalization process.

- In this process we convert the inputs of each layer in the deep neural network into a single range. Which will be from 0 to 1, or -1 to 1 etc.

- It will help the neural network to focus on the data not on the scale of the data.

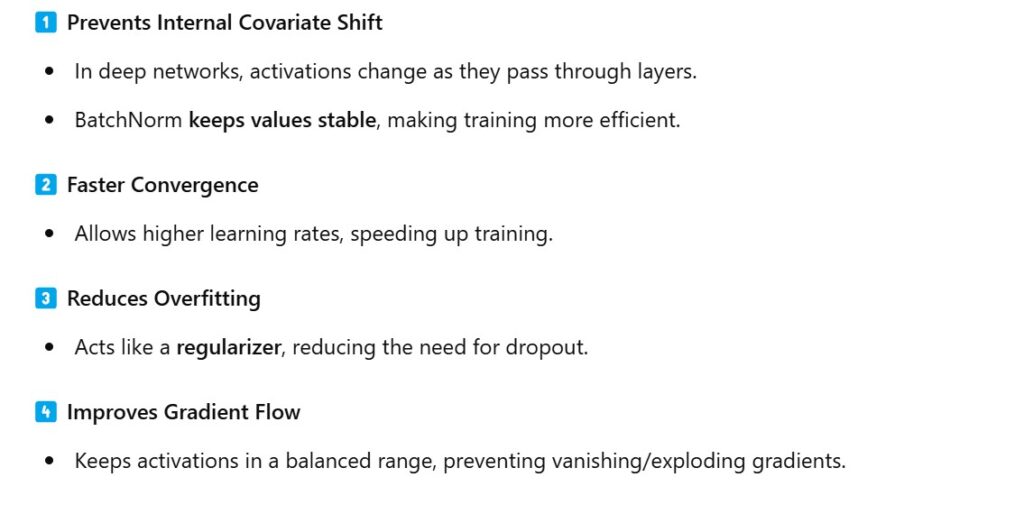

(2) Why Is Batch Normalization Needed ?

(3) Why Is Batch Normalization Needed ?

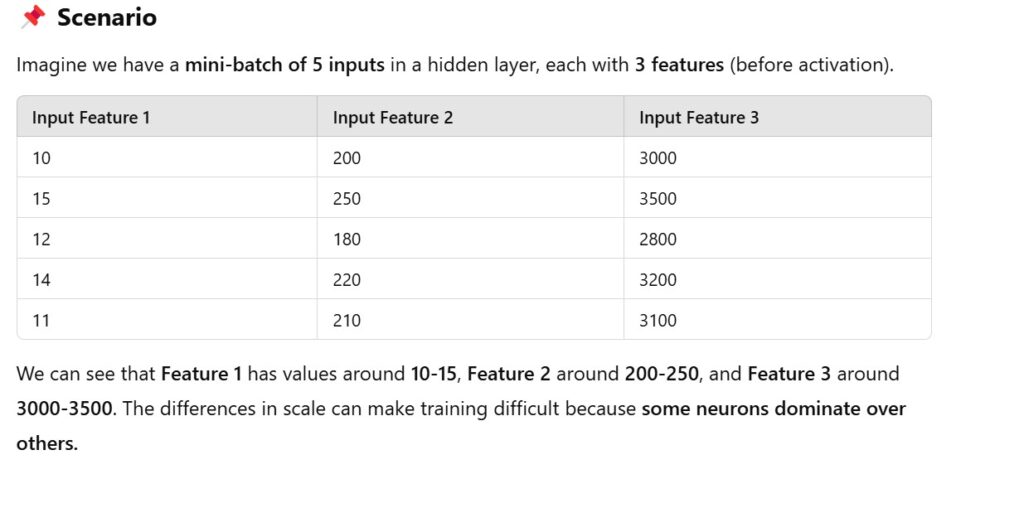

(4) Example Of Batch Normalization.

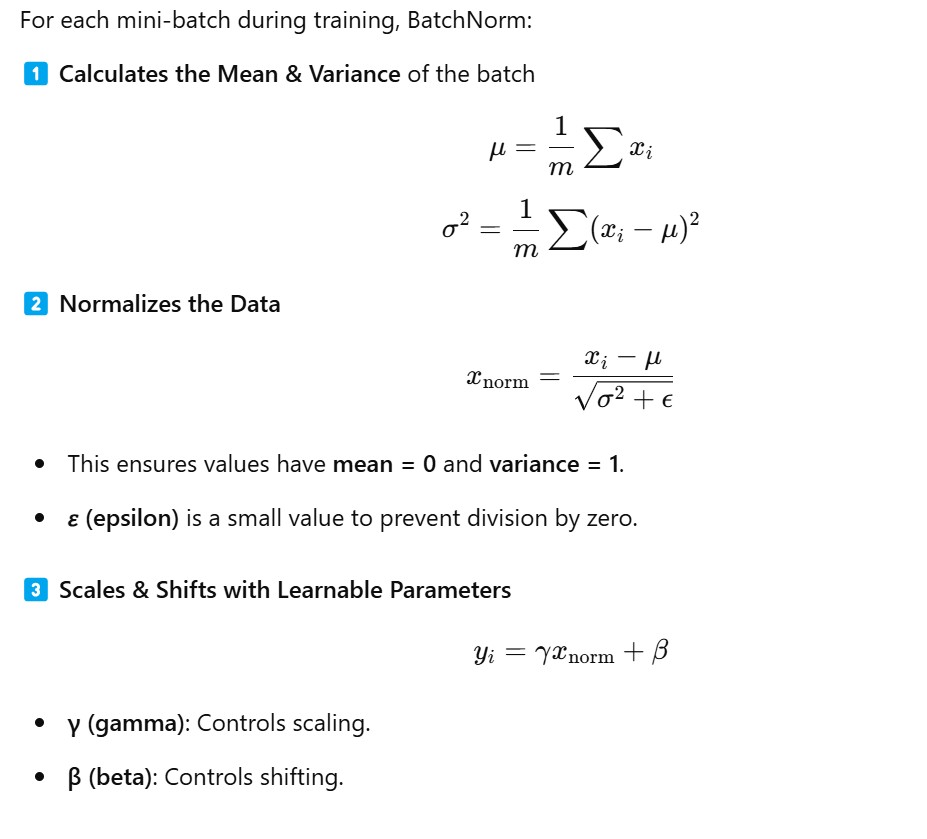

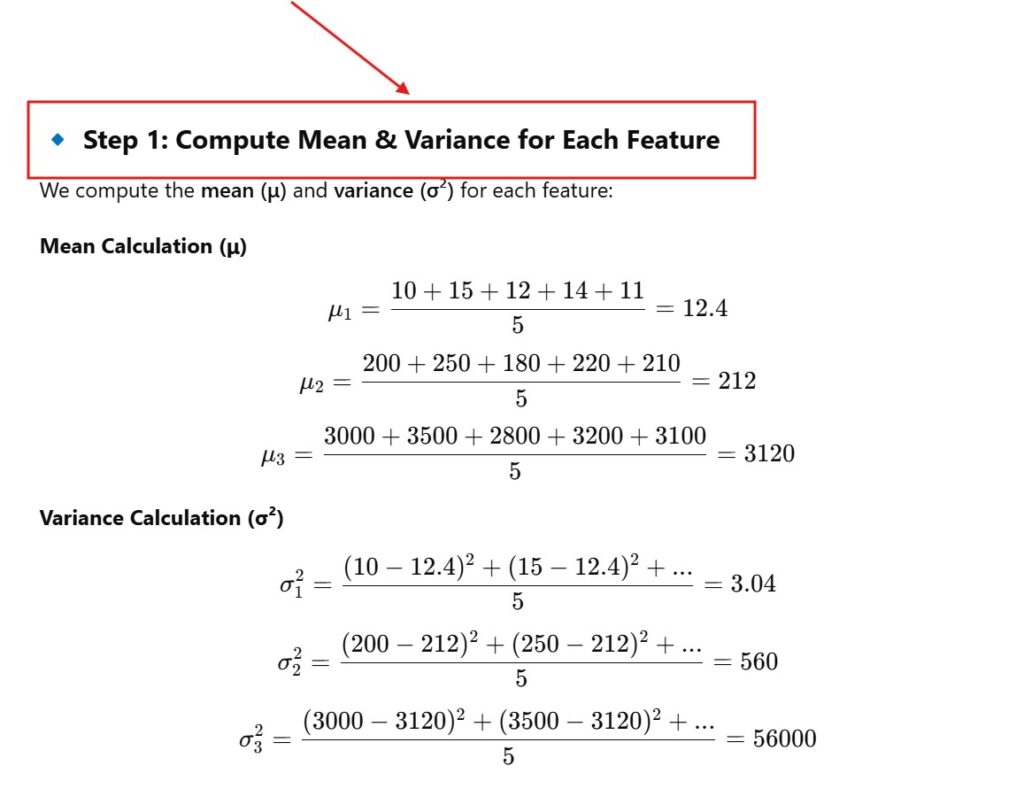

Step – 1: Compute The Mean & Variance Of Each Feaature

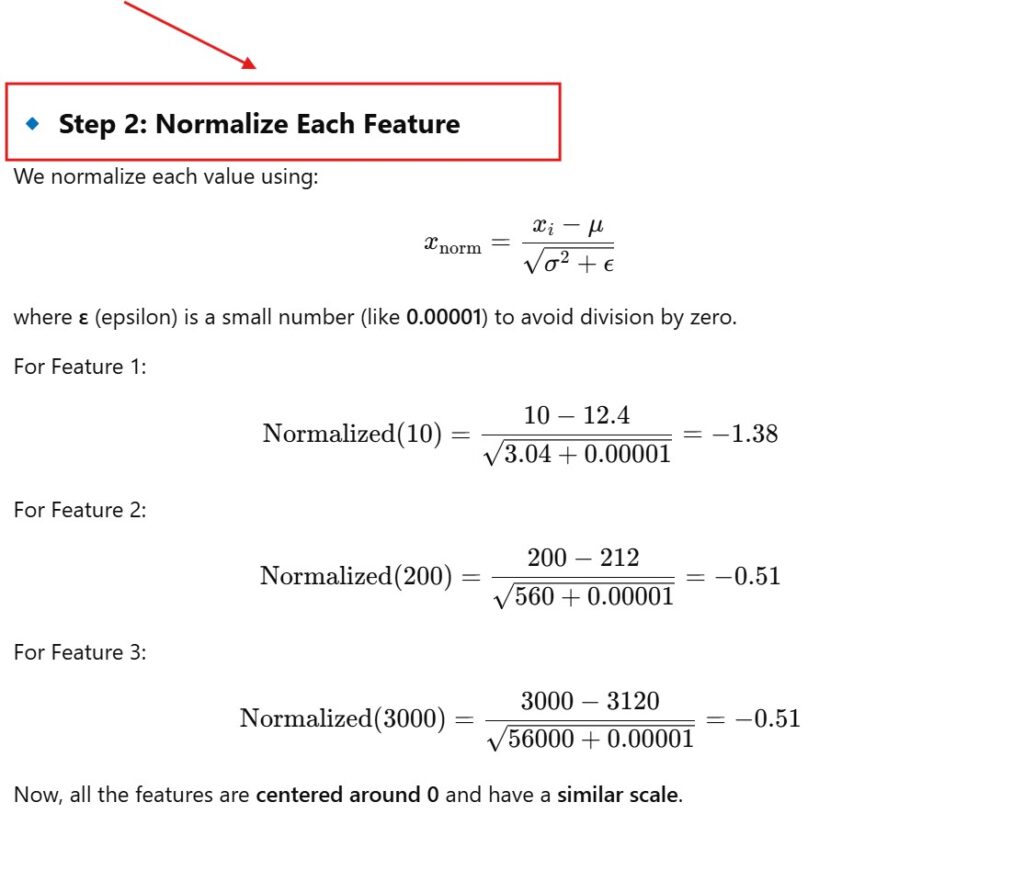

Step – 2: Normalize Each Feature

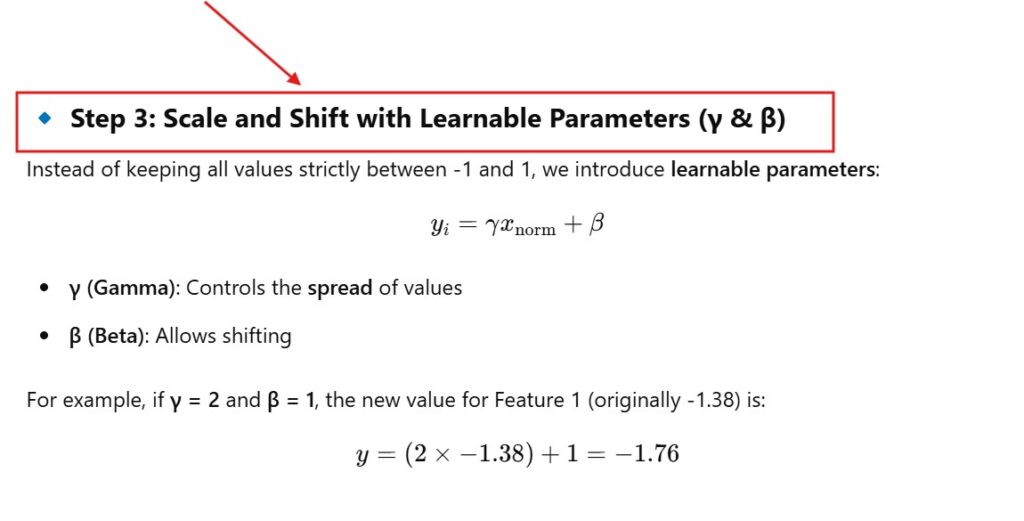

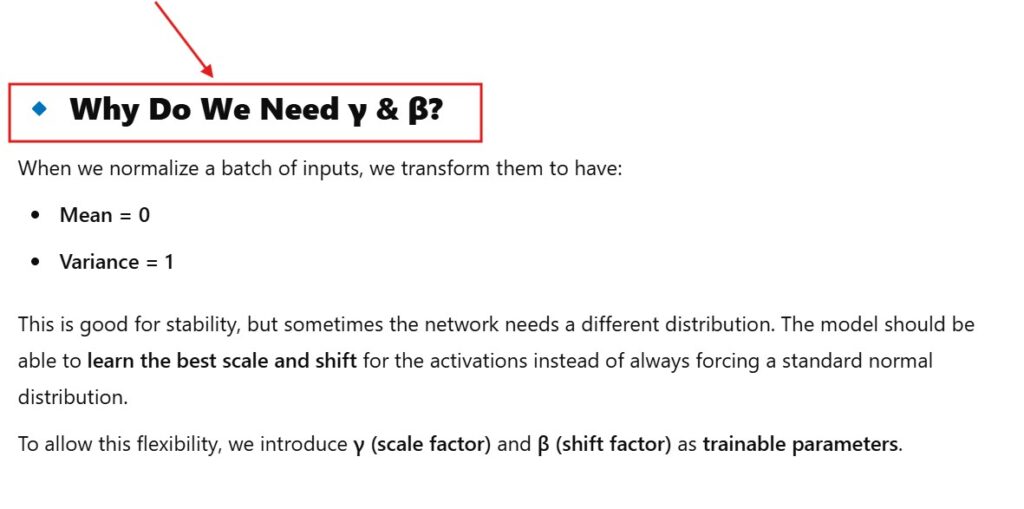

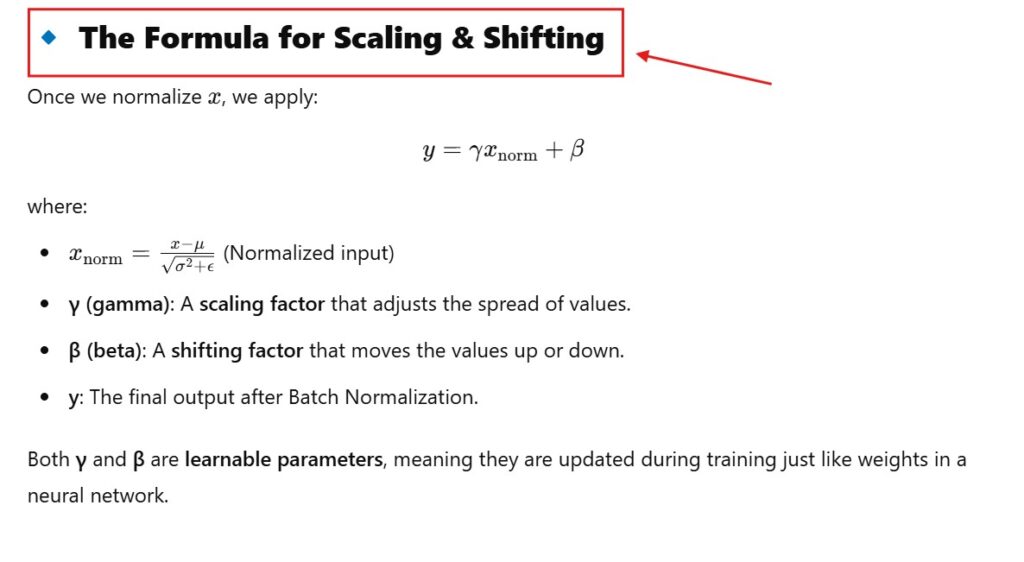

Step – 3: Scale and Shift with Learnable Parameters (γ & β)

Step – 4: Scale and Shift with Learnable Parameters (γ & β)

(5) Why is Internal Covariate Shift (ICS) a Problem If Different Distributions Are Natural?