GenAI – What Is QLoRA Fine Tuning ?

Table Of Contents:

- What Is Quantization Process ?

- What Is QLoRA ?

- Benefits Of QLoRA.

- Example Of QLoRA.

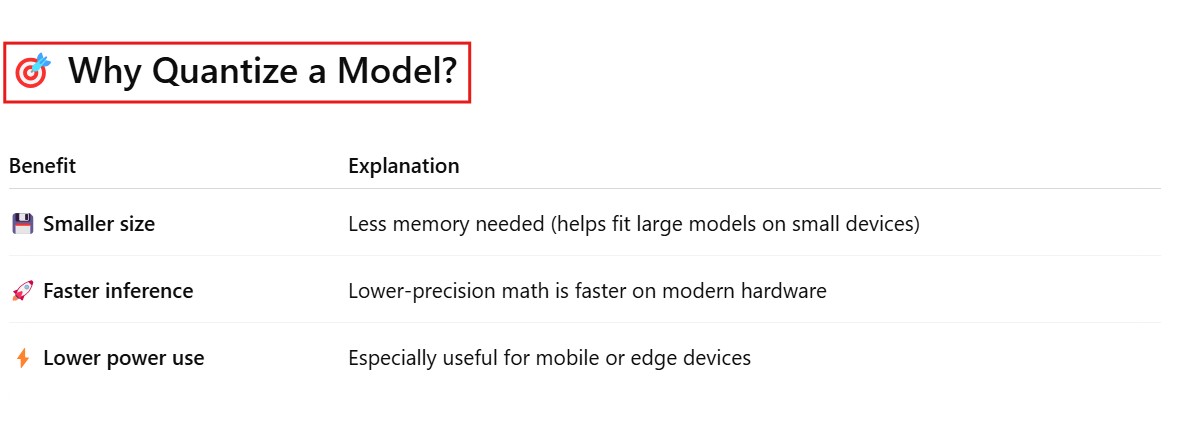

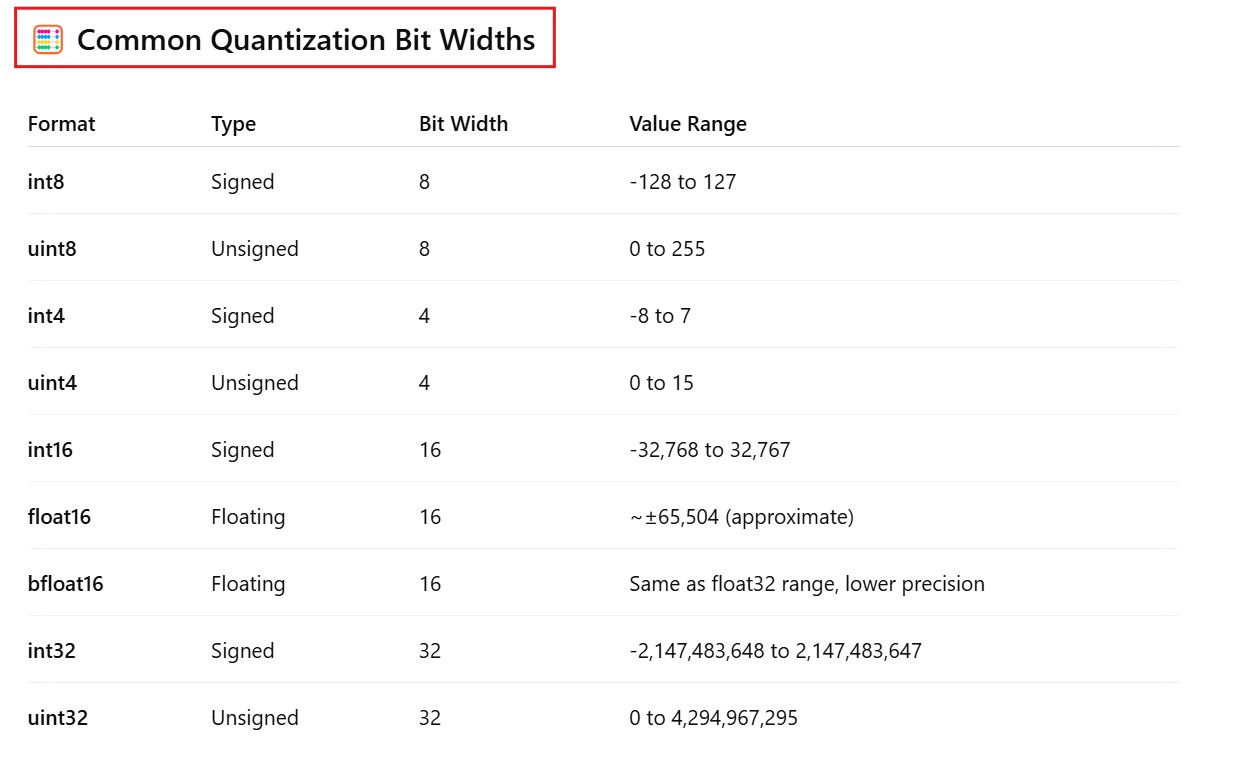

(1) What Is Quantization Process ?

(2) What Is QLoRA ?

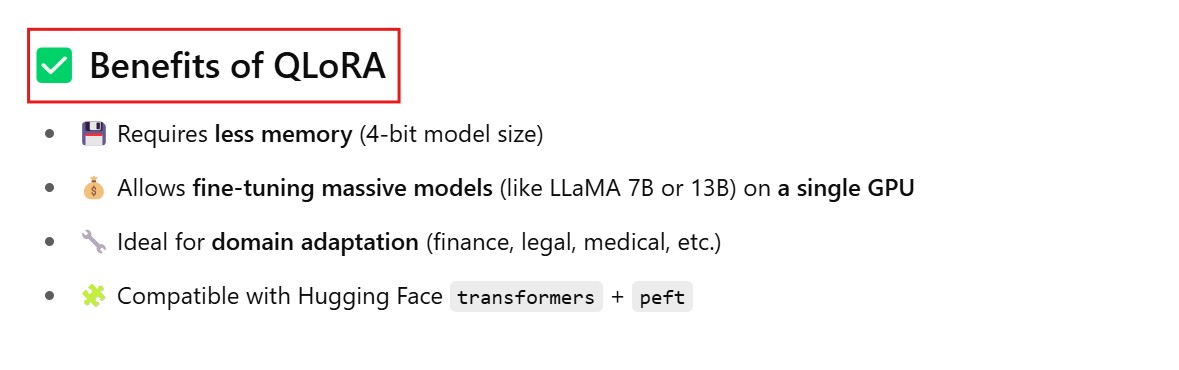

(3) Benefits Of QLoRA ?

(4) Example Of QLoRA .

transformers

peft

bitsandbytes

datasetsfrom transformers import AutoModelForCausalLM, AutoTokenizer, TrainingArguments, Trainer

from peft import prepare_model_for_kbit_training, get_peft_model, LoraConfig, TaskType

from datasets import load_dataset

from transformers import DataCollatorForLanguageModeling

# Load 4-bit quantized model

model_name = "meta-llama/Llama-2-7b-hf"

model = AutoModelForCausalLM.from_pretrained(

model_name,

load_in_4bit=True,

device_map="auto"

)

# Load tokenizer

tokenizer = AutoTokenizer.from_pretrained(model_name)

# Prepare for QLoRA training

model = prepare_model_for_kbit_training(model)

# Configure LoRA

lora_config = LoraConfig(

r=8,

lora_alpha=32,

lora_dropout=0.1,

bias="none",

task_type=TaskType.CAUSAL_LM,

target_modules=["q_proj", "v_proj"]

)

# Inject LoRA adapters

model = get_peft_model(model, lora_config)

# Load dataset

dataset = load_dataset("wikitext", "wikitext-2-raw-v1", split="train")

# Tokenize

def tokenize(example):

return tokenizer(example["text"], truncation=True, padding="max_length", max_length=256)

tokenized_dataset = dataset.map(tokenize, batched=True)

# Data collator

data_collator = DataCollatorForLanguageModeling(tokenizer=tokenizer, mlm=False)

# Training arguments

training_args = TrainingArguments(

output_dir="./qlora-output",

per_device_train_batch_size=4,

num_train_epochs=3,

learning_rate=2e-4,

fp16=True,

logging_steps=10,

save_steps=50,

report_to="none"

)

# Trainer

trainer = Trainer(

model=model,

args=training_args,

train_dataset=tokenized_dataset,

tokenizer=tokenizer,

data_collator=data_collator

)

# Train!

trainer.train()