NLP – BERT Architecture

Table Of Contents:

- Introduction to BERT

- BERT Architecture

- Input Representation

- Pretraining Objectives

- Fine-Tuning BERT

- Variants of BERT

- BERT Evaluation and Benchmarks

- Advanced Concepts

- Implementation with Libraries

- Limitations and Challenges

- Applications of BERT

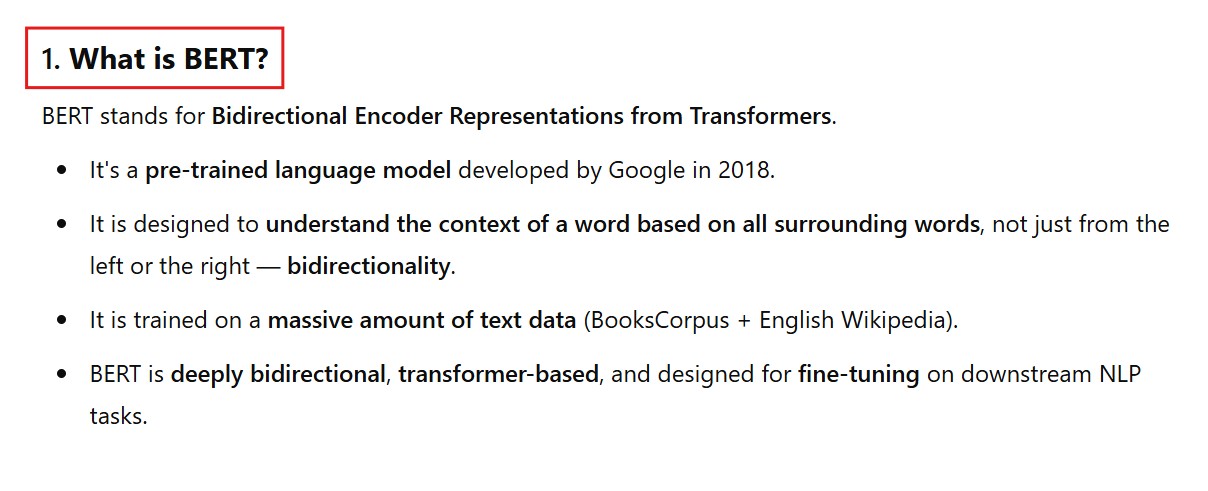

(1) Introduction To BERT.

(2) BERT – Questions

- What is BERT and the transformer, and why do I need to understand it? Models like BERT are already massively impacting academia and business, so we’ll outline some of the ways these models are used, and clarify some of the terminology around them.

- What did we do before these models? To understand these models, it’s important to look at the problems in this area and understand how we tackled them before models like BERT came on the scene. This way we can understand the limits of previous models and better appreciate the motivation behind the key design aspects of the Transformer architecture, which underpins most SOTA models like BERT.

- NLPs “ImageNet moment; pre-trained models: Originally, we all trained our own models, or you had to fully train a model for a specific task. One of the key milestones which enabled the rapid evolution in performance was the creation of pre-trained models which could be used “off-the-shelf” and tuned to your specific task with little effort and data, in a process known as transfer learning. Understanding this is key to seeing why these models have been, and continue to perform well in a range of NLP tasks.

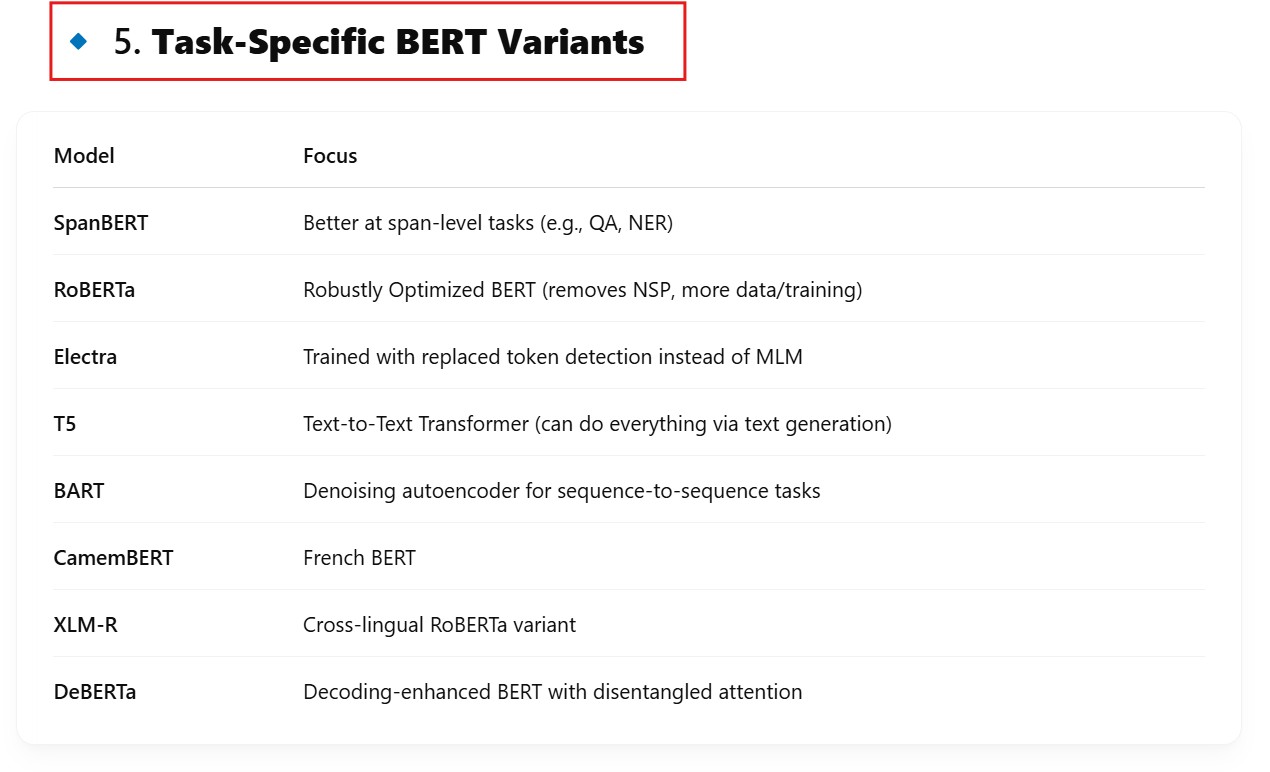

- Understanding the Transformer: You’ve probably heard of BERT and GPT-3, but what about RoBERTa, ALBERT, XLNet, or the LONGFORMER, REFORMER, or T5 Transformer? The amount of new models seems overwhelming, but if you understand the Transformer architecture, you’ll have a window into the internal workings of all of these models. It’s the same as when you understand RDBMS technology, giving you a good handle on software like MySQL, PostgreSQL, SQL Server, or Oracle. The relational model that underpins all of the DBs is the same as the Transformer architecture that underpins our models. Understand that, and RoBERTa or XLNet becomes just the difference between using MySQL or PostgreSQL. It still takes time to learn the nuances of each model, but you have a solid foundation and you’re not starting from scratch.

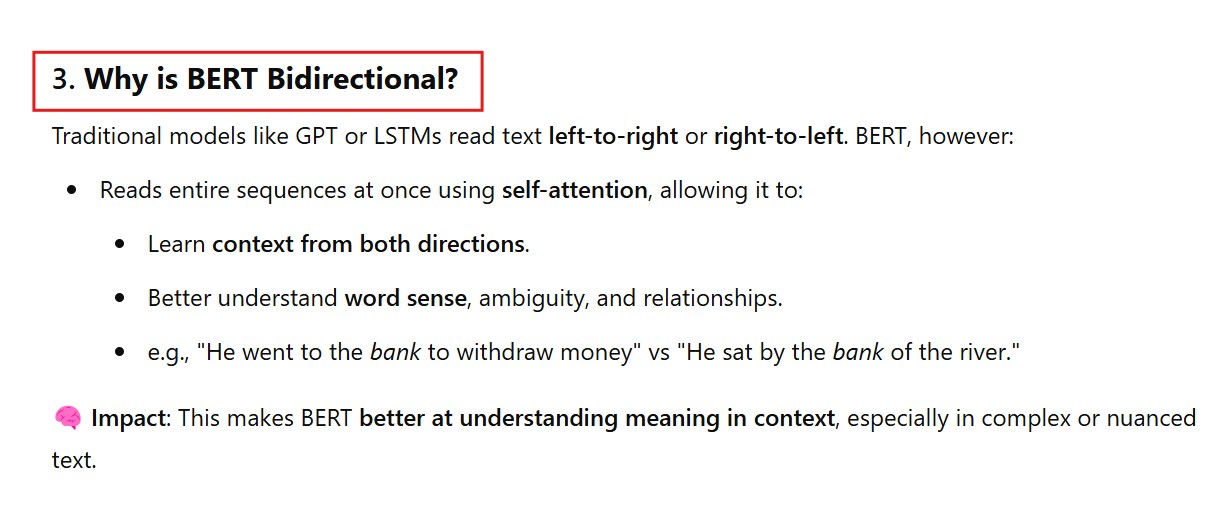

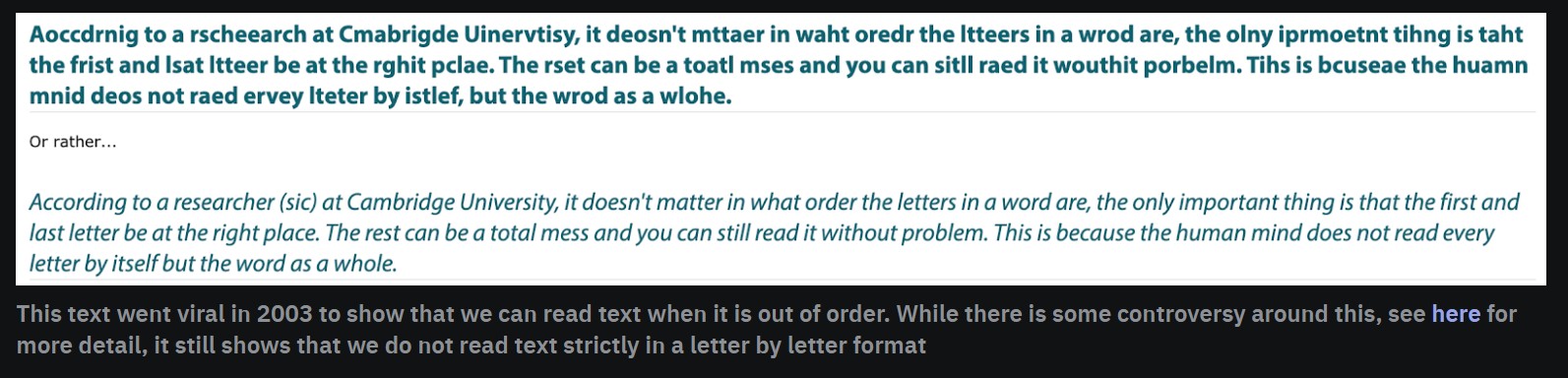

- The Importance Of Bidirectionality: As you’re reading this, you’re not strictly reading from one side to the other. You’re not reading this sentence letter by letter in one direction from one side to the other. Instead, you’re jumping ahead and learning context from the words and letters ahead of where you are right now. It turns out this is a critical feature of the Transformer architecture. The Transformer architecture enables models to process text in a bidirectional manner, from start to finish and from finish to start. This has been central to the limits of previous models which could only process text from start to finish.

- How are BERT and the Transformer Different? BERT uses the Transformer architecture, but it’s different from it in a few critical ways. With all these models it’s important to understand how they’re different from the Transformer, as that will define which tasks they can do well and which they’ll struggle with.

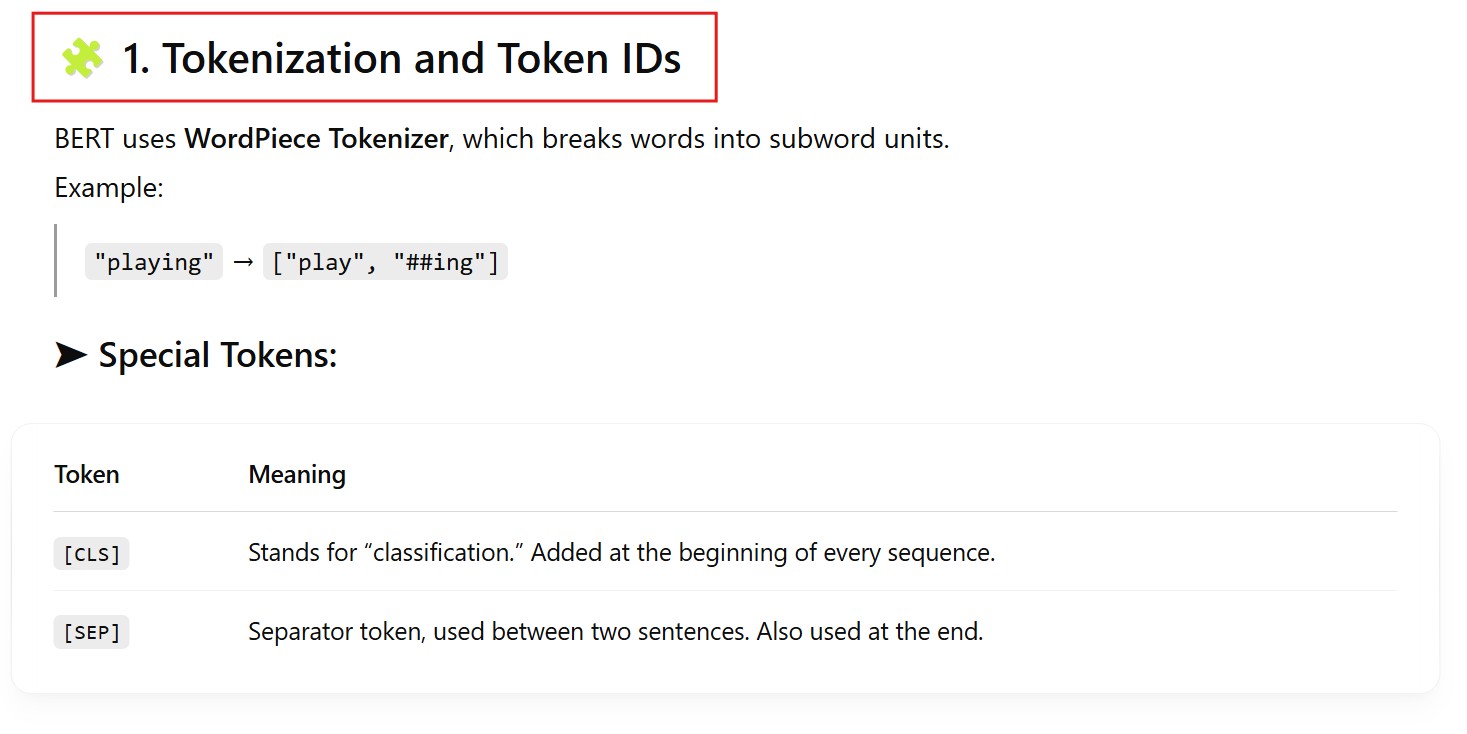

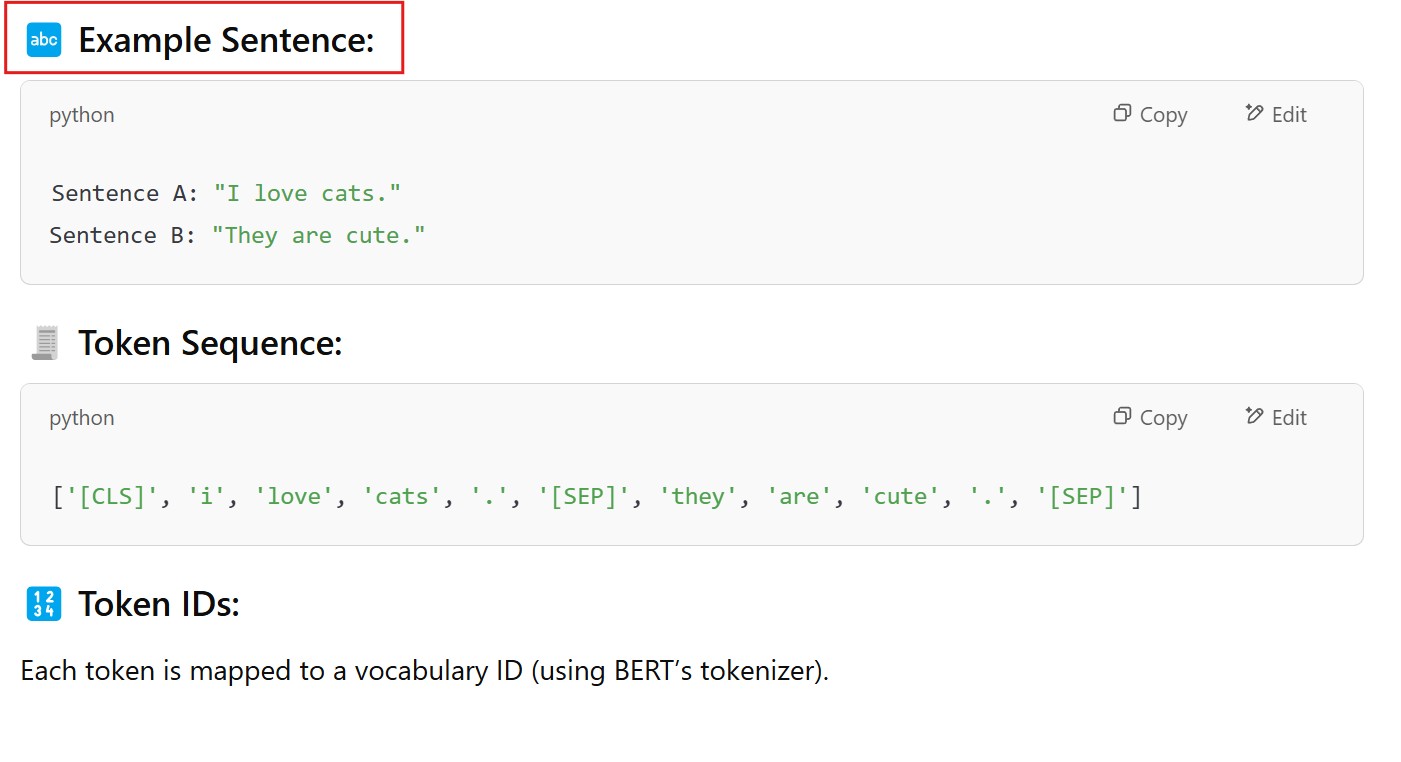

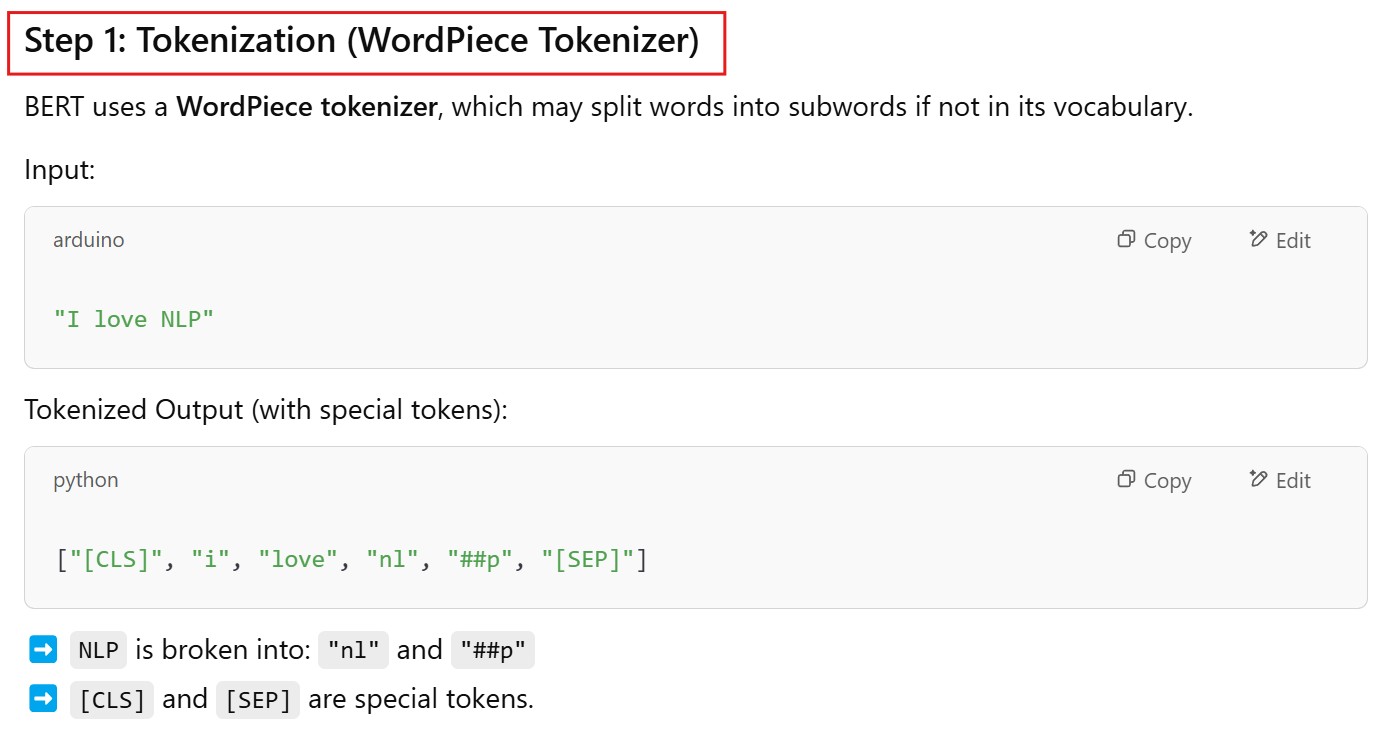

- Tokenizers – how these models process text: Models don’t read like you and me, so we need to encode the text so that it can be processed by a deep learning algorithm. How you encode the text has a massive impact on the performance of the model and there are tradeoffs to be made in each decision here. So, when you look at another model, you can first look at the tokenizer used and already understand something about that model.

- Masking – smart work versus hard work: You can work hard, or you can work smart. It’s no different with Deep Learning NLP models. Hard work here is just using a vanilla Transformer approach and throwing massive amounts of data at the model so it performs better. Models like GPT-3 have an incredible number of parameters enabling it to work this way. Alternatively, you can try to tweak the training approach to “force” your model to learn more from less. This is what models like BERT try to do with masking. By understanding that approach you can again use that to look at how other models are trained. Are they employing innovative techniques to improve how much “knowledge” these models can extract from a given piece of data? Or are they taking a more brute force, scale-it-till-you-break-it approach?

- Fine-Tuning and Transfer Learning: One of the key benefits of BERT is that it can be fine-tuned to specific domains and trained on a number of different tasks. How do models like BERT and GPT-3 learn to perform different tasks?

- Avocado chairs – what’s next for BERT and other Transformer models? To bring our review of BERT and the Transformer architecture, we’ll look forward to what the future holds for these models.

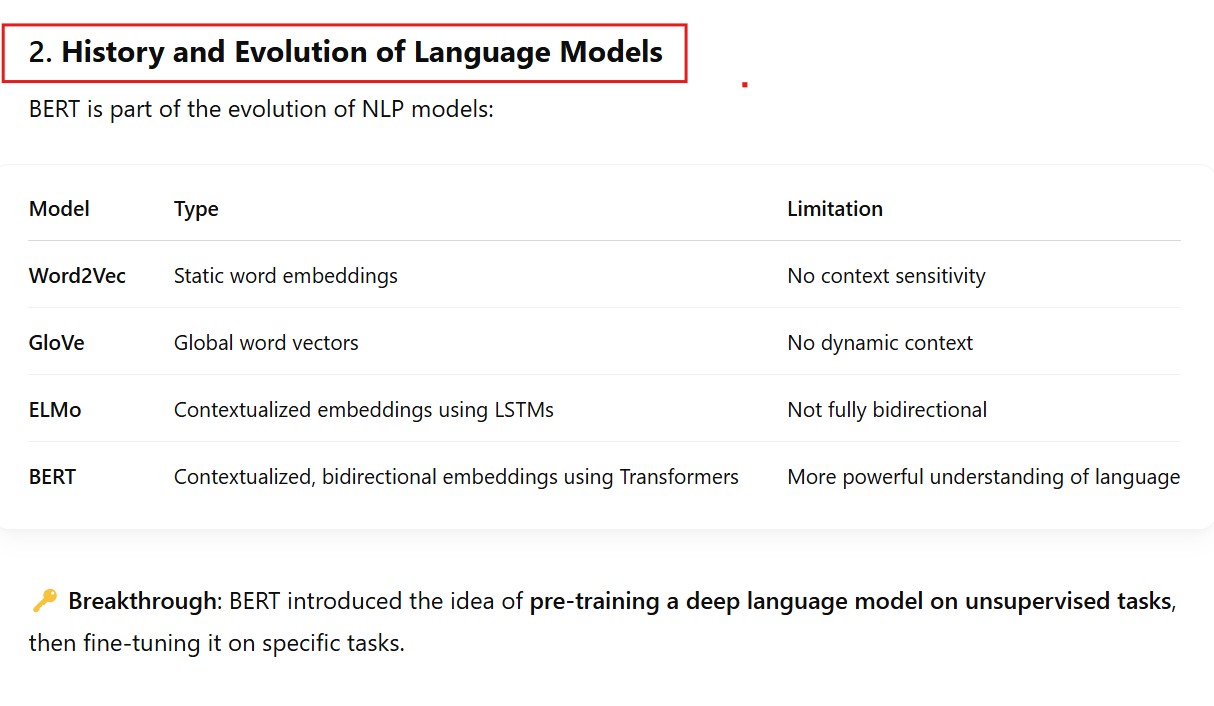

(3) What Existed Before BERT ?

- BERT, and the Transformer architecture itself, can both be seen in the context of the problem they were trying to solve.

- Like other business and academic domains, progress in machine learning and NLP can be seen as an evolution of technologies that attempt to address failings or shortcomings of the current technology.

- Henry Ford made automobiles more affordable and reliable, so they became a viable alternative to horses.

- The telegraph improved on previous technologies by being able to communicate with people without being physically present.

- Before BERT, the biggest breakthroughs in NLP were:

- 2013: Word2Vec paper, Efficient Estimation of Word Representations in Vector Space was published. Continuous word embeddings started being created to more accurately identify the semantic meaning and similarity of words.

- 2015: Sequence to sequence approach to text generation was released in the paper A Neural Conversation Model. It builds on some of the technology first showcased in Word2Vec, namely the potential power of Deep Learning neural networks to learn semantic and syntactic information from large amounts of unstructured text.

- 2018: Embeddings from Language Models (ELMo) paper Deep contextualized word representations were released. ELMo (this is where the whole muppet naming things started and, unfortunately, it hasn’t stopped, see ERNIE, Big Bird and KERMIT) was a leap forward in terms of word embeddings. It tried to account for the context in which a word was used rather than the static, one word, one meaning limits of Word2Vec.

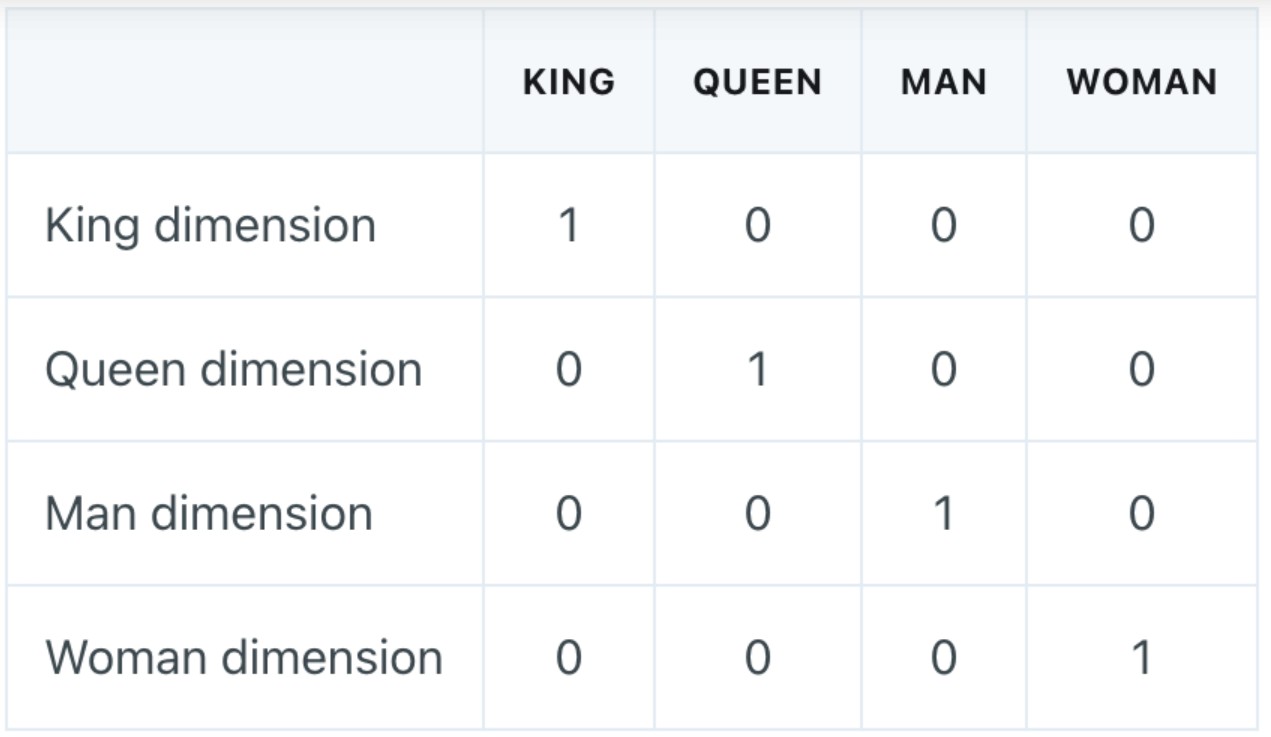

- Before Word2Vec, word embeddings were either simple models with massive sparse vectors which used a one hot encoding technique, or we used TF-IDF approaches to create better embeddings for ignoring common, low-information words like “the”, “this”, “that”.

(4) The Importance Of Bidirectionality

- We noted earlier that RNNs were the architectures used to process text prior to the Transformer. RNNs use recurrence or looping to be able to process sequences of textual input. Processing text in this way creates two problems:

- Its slow: Processing text sequentially, in one direction, is costly since it creates a bottleneck. It’s like a single lane road during peak time where there are long tailbacks, versus the road with few cars on it off peak. We know that generally speaking, these models perform better if they’re trained on more data, so this bottleneck was a big problem if we wanted better models.

- It misses key information: We know that humans don’t read text in an absolutely pure sequential manner. As psychologist Daniel Willingham notes in his book “The Reading Mind”, “we don’t read letter by letter, we read in letter clumps, figuring out a few letters at a time”. The reason is we need to know a little about what is ahead to understand what we’re reading now. The same is true for NLP language models. Processing text in one direction limits their ability to learn from data

- The mouse was on the table near the laptop

- The mouse was on the table near the cat

We saw that ELMo attempted to address this via a method we referred to as “shallow” bidirectionality. It processed the text in one direction, then reversed the text, i.e. started from the end, and processed the text in that way. By concatenating both these embeddings, the hope was that this would help capture the different meaning in sentences like:

The “mouse” in both these sentences refers to a very different entity depending on whether the last word in the sentence is “laptop” or “cat”. By reversing the sentence and starting with the word “cat”, ELMo attempts to learn the context so that it can encode the different meaning of the word “mouse”. By processing the word “cat” first, ELMo is able to incorporate the different meaning into the “reversed” embedding. This is how ELMo was able to improve on traditional, static, Word2Vec embeddings which could only encode one meaning per word.

Without understanding the nuance involved in how this process works, we can see that the ELMo approach is not ideal.

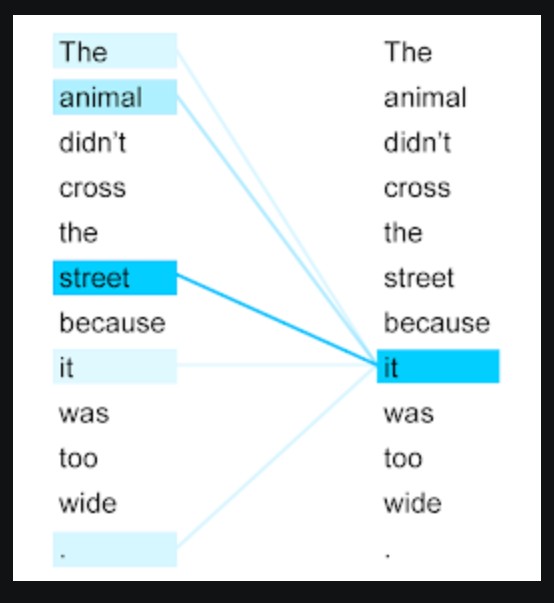

We would prefer a mechanism which enables the model to look at the other words in the sentence during the encoding, so it can know whether or not we should be worried that there is a mouse on the table! And this is exactly what the attention mechanism of the Transformer architecture enables these models to do.

Being able to read text bidirectionally is one of the key reasons that Transformer models like BERT can achieve such impressive results in traditional NLP tasks. As we see from the above example, being able to know what “it” refers to is difficult when you read text in only one direction, and have to store all the state sequentially.

I guess it’s no surprise that this is a key feature of BERT, since the B in BERT stands for “Bidirectional”. The attention mechanism of the Transformer architecture allows models like BERT to process text bidirectionally by:

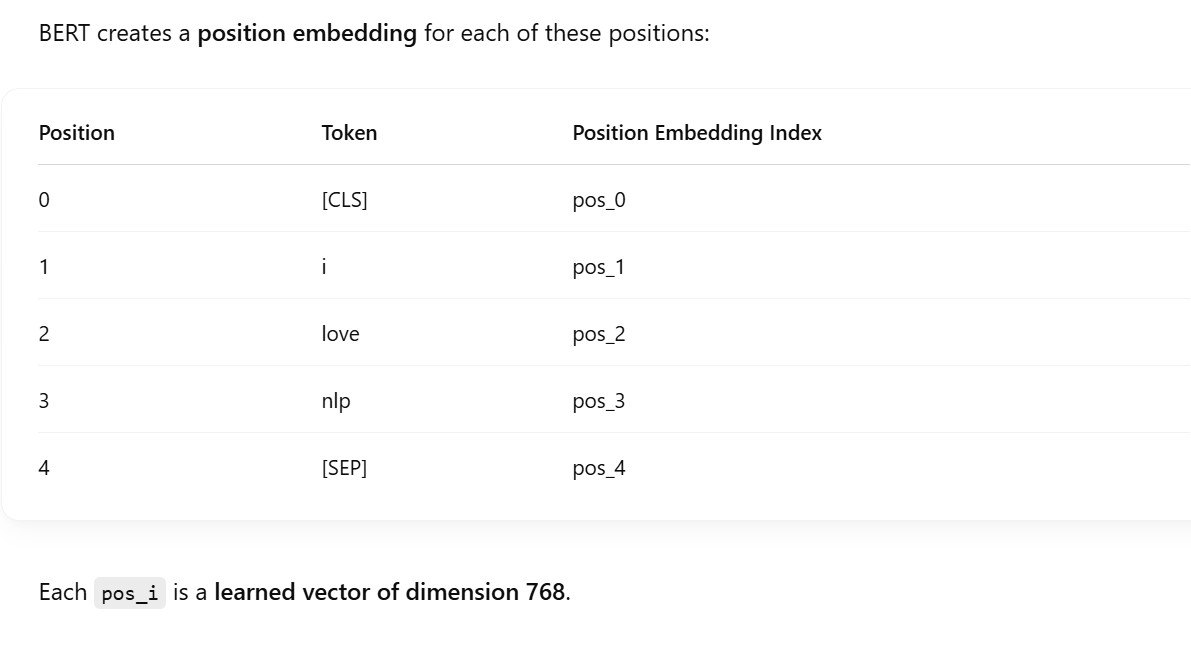

- Allowing parallel processing: Transformer-based models can process text in parallel, so they’re not limited by the bottleneck of having to process text sequentially like RNN-based models. This means that at any time the model is able to look at any word in the sentence it’s processing. But this introduces other problems. If you’re processing all the text in parallel, how do you know the order of the words in the original text? This is vital. If we don’t know the order, we’ll just have a bag of words-type model, unable to fully extract the meaning and context from the sentence.

- Storing the position of the input: To address ordering issues, the Transformer architecture encodes the position of the word directly into the embedding. This is a “marker” that lets attention layers in the model identify where the word or text sequence they’re looking at was located. This nifty little trick means that these models can keep processing sequences of text in parallel, in large volumes with different lengths, and still know exactly what order they occur in the sentence.

- Making lookup easy: We noted earlier that one of the issues with RNN type models is that when they need to process text sequentially, it makes retrieving earlier words difficult. So in our “mouse” example sentence, an RNN would like to understand the relevance of the last word in the sentence, i.e. “laptop” or “cat”, and how it relates to the earlier part of the sentence. To do this, it has to go from N-1 word, to N-2, to N-3 and so on, until it reaches the start of the sentence. This makes lookup difficult, and that’s why context is tricky for unidirectional models to discover. By contrast, the Transformer based models can simply look up any word in the sentence at any time. In this way, it has a “view” of all the words in the sequence at every step in the attention layer. So it can “look ahead” to the end of the sentence when processing the early part of the sentence, or vice versa. (There is some nuance to this depending on the way the attention layers are implemented, e.g. encoders can look at the location of any word while decoders are limited to only looking “back” at words they have already processed. But we don’t need to worry about that for now).

As a result of these factors, being able to process text in parallel, embedding the position of the input in the embedding and enabling easy lookup of each input, models like BERT can “read” text bidirectionally.

Technically it’s not bidirectional, since these models are really looking at all the text at once, so it’s non-directional. But it’s better to understand it as a way to try and process text bidirectionally to improve the model’s ability to learn from the input.

Being able to process text this way introduces some problems that BERT needed to address with a clever technique called “masking”, which we’ll discuss in section 8. But, now that we understand a little bit more about the Transformer architecture, we can look at the differences between BERT and the vanilla Transformer architecture in the original “Attention is all you need” paper.

(5) How BERT & Transformer Are Different ?

- When you read about the latest models, you’ll see them called “Transformer” models. Sometimes the word will be used loosely, and models like BERT and GPT-3 will both be referred to as “Transformer” models. But, these models are very different in some important ways.

Understanding these differences will help you know which model to use for your own unique use case. The key to understanding the different models is knowing how and why they deviate from the original Transformer architecture. In general, the main things to look out for are:

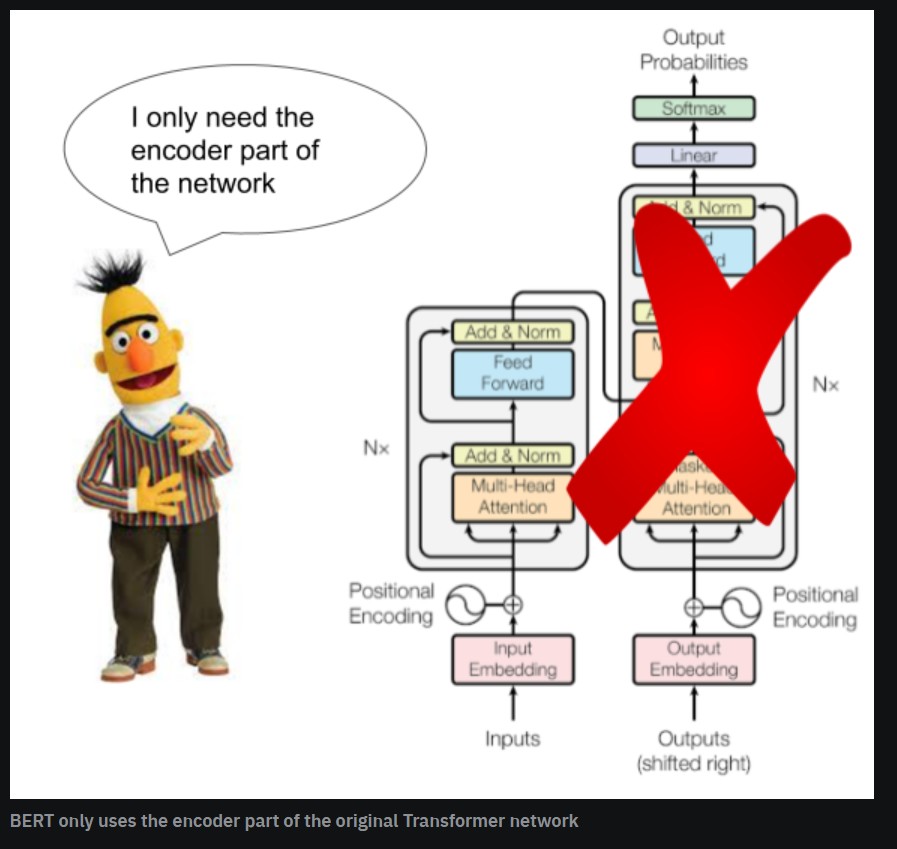

- Is the encoder used? The original Transformer architecture needed to translate text so it used the attention mechanism in two separate ways. One was to encode the source language, and the other was to decode the encoded embedding back into the destination language. When looking at a new model, check if it uses the encoder. This means it’s concerned with using the output in some way to perform another task, i.e. as an input to another layer for training a classifier, or something of that nature.

- Is the decoder used? Alternatively, a model might not use the encoder part, and only use the decoder. The decoder implements the attention mechanism slightly differently to the encoder. It works more like a traditional language model, and only looks at previous words when processing the text. This would be suitable for tasks like language generation, which is why the GPT models use the decoder part of the Transformer, since they’re mainly concerned with generating text in response to an input sequence of text.

- What new training layers are added? The last thing to look at is what extra layers, if any, the model adds to perform training. The attention mechanism opens up a whole range of possibilities by being able to process text in parallel and bidirectionally, as we mentioned earlier. Different layers can build on this, and train the model for different tasks like question and answering, or text summarization.

Now that we know what to look for, how does BERT differ from the vanilla Transformer?

- BERT uses the encoder: BERT uses the encoder part of the Transformer, since it’s goal is to create a model that performs a number of different NLP tasks. As a result, using the encoder enables BERT to encode the semantic and syntactic information in the embedding, which is needed for a wide range of tasks. This already tells us a lot about BERT. First, it’s not designed for tasks like text generation or translations, because it uses the encoder. It can be trained on multiple languages, but it’s not a machine translation model itself. Similarly, it can still predict words, so it can be used as a text generating model, but that’s not what it’s optimized for.

- BERT doesn’t use the decoder: As noted, BERT doesn’t use the decoder part of the vanilla Transformer architecture. So, the output of BERT is an embedding, not a textual output. This is important – if the output is an embedding, it means that whatever you use BERT for you’ll need to do something with the embedding. You can use techniques like cosine similarity to compare embeddings and return a similarity score for example. By contrast, if you used the decoder, the output would be a text so you could use that directly without needing to perform any further actions.

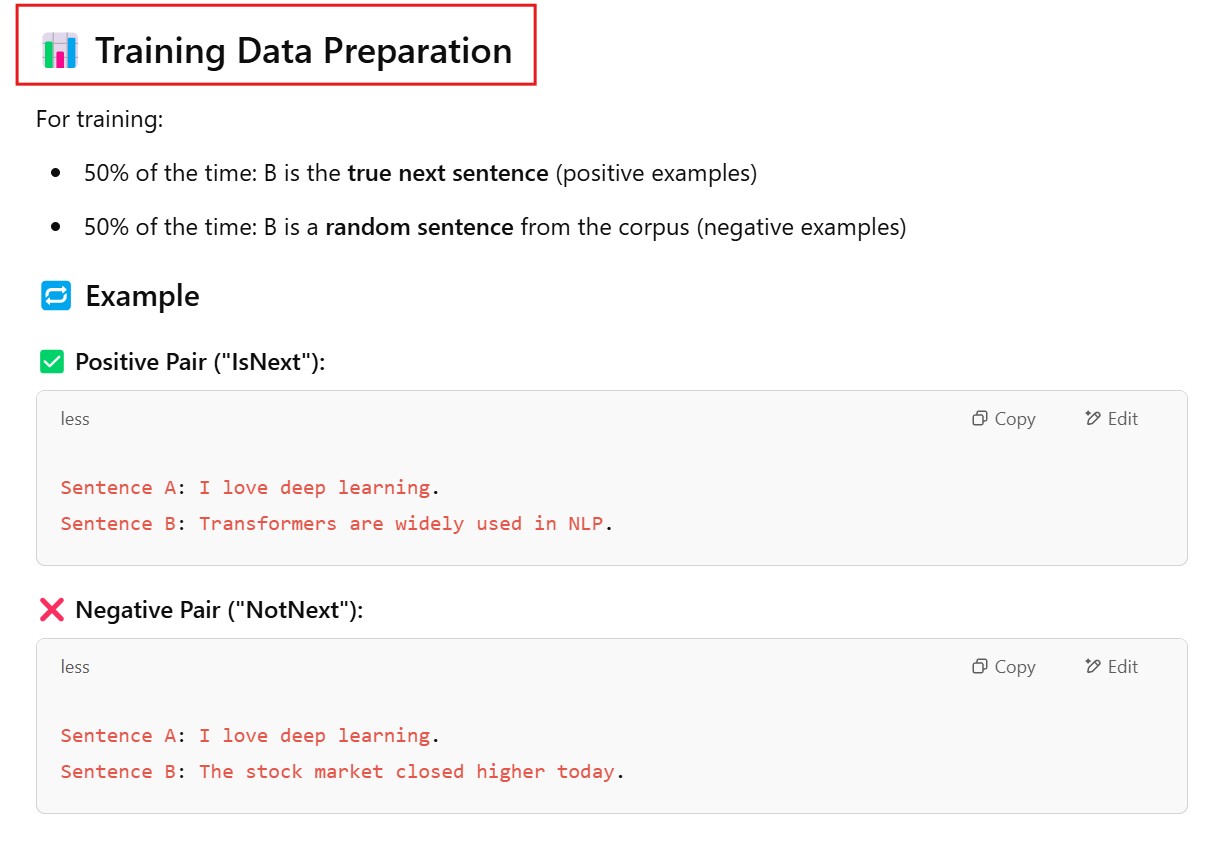

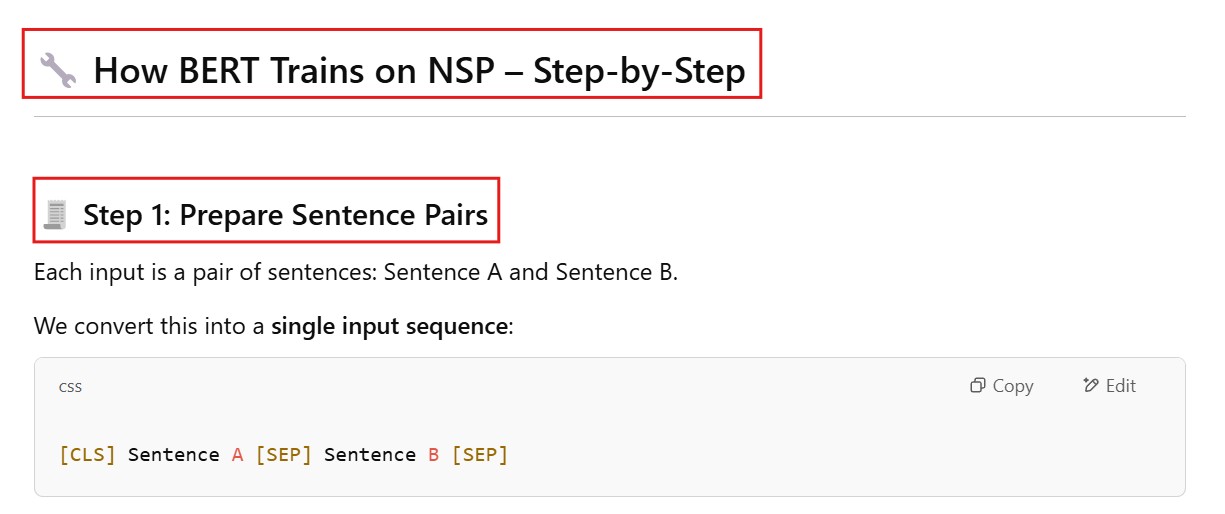

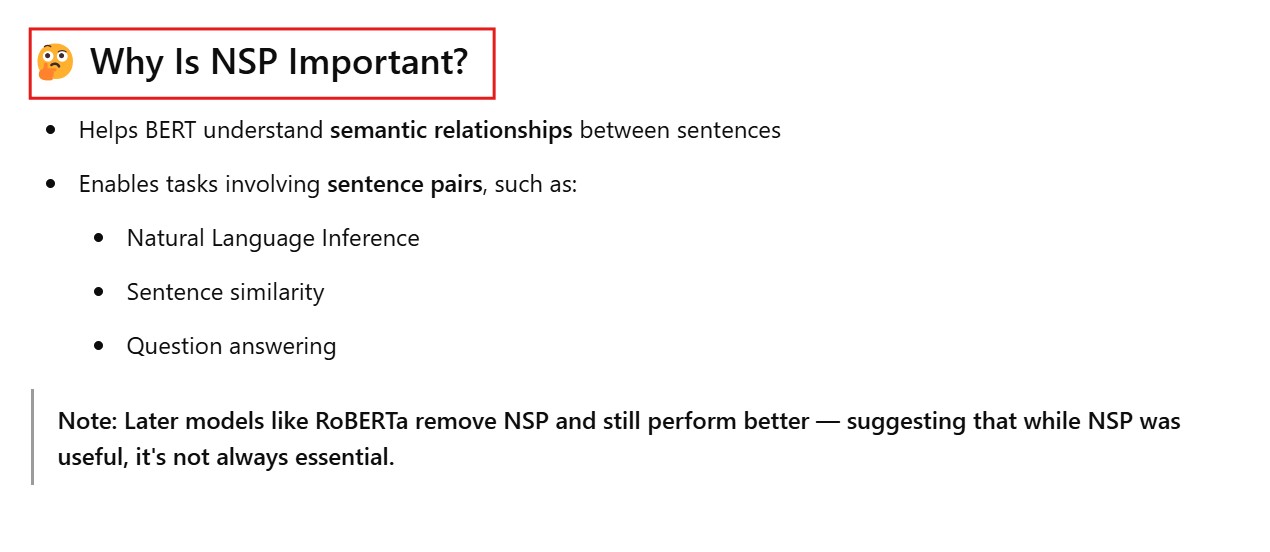

- BERT uses an innovative training layer: BERT takes the output of the encoder, and uses that with training layers which perform two innovative training techniques, masking and Next Sentence Prediction (NSP). These are ways to unlock the information contained in the BERT embeddings to get the models to learn more information from the input. We will discuss these techniques more in section 8, but the gist is that BERT gets the Transformer encoder to try and predict hidden or masked words. By doing this, it forces the encoder to try and “learn” more about the surrounding text and be better able to predict the hidden or “masked” word. Then, for the second training technique, it gets the encoder to predict an entire sentence given the preceding sentence. BERT introduced these “tweaks” to take advantage of the Transformer, specifically the attention mechanism, and create a model that generated SOTA results for a range of NLP tasks. At the time, it surpassed anything that had been done before.

Now that we know how BERT differs from the vanilla Transformer architecture, we can look closely at these parts of the BERT model. But first, we need to understand how BERT “reads” text.

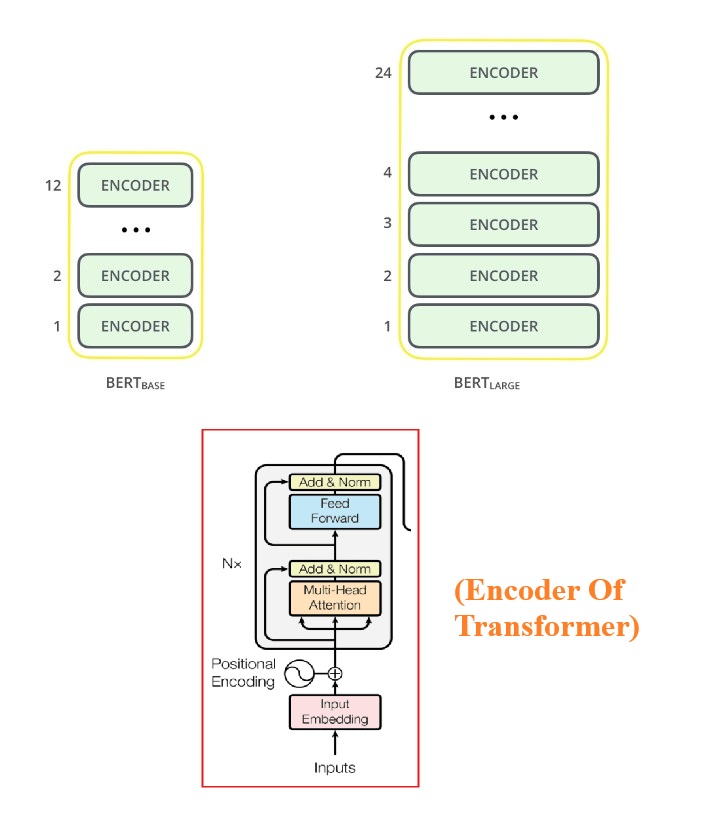

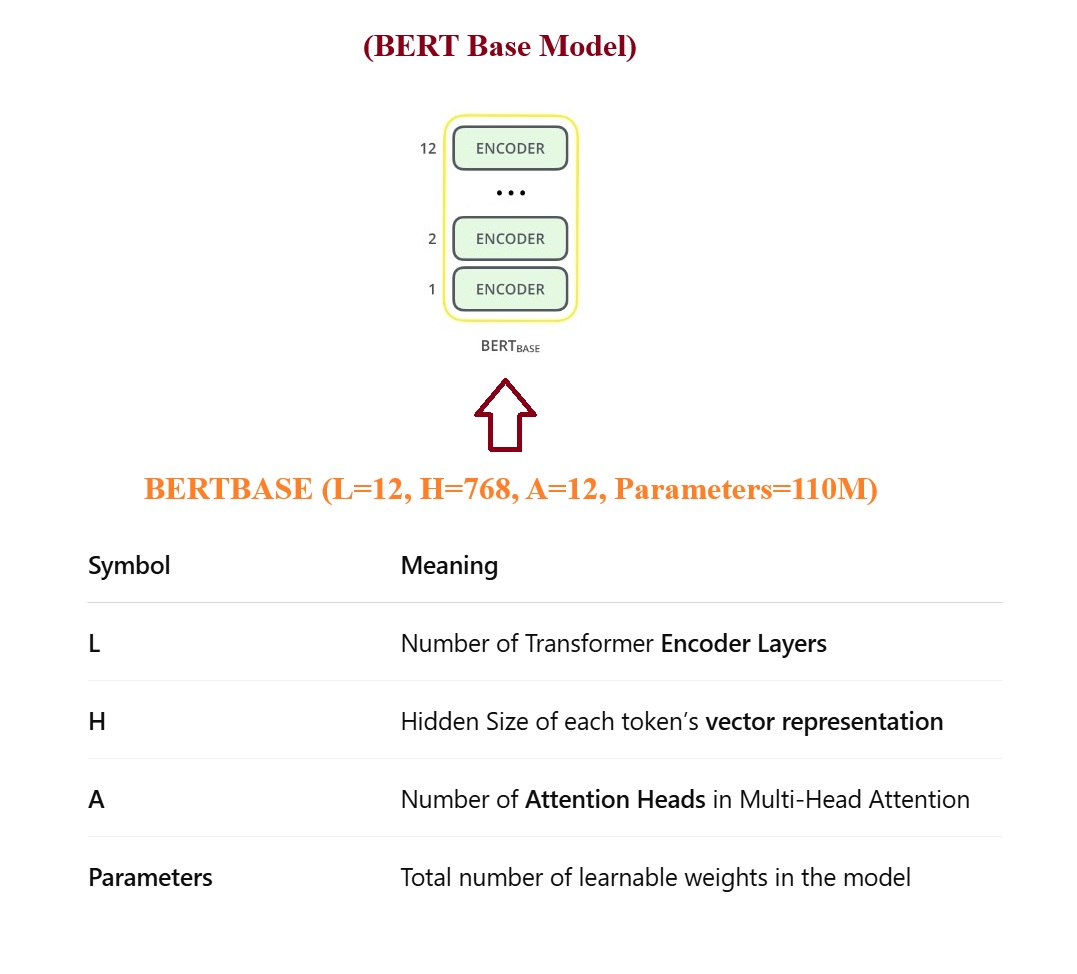

(6) BERT Architecture

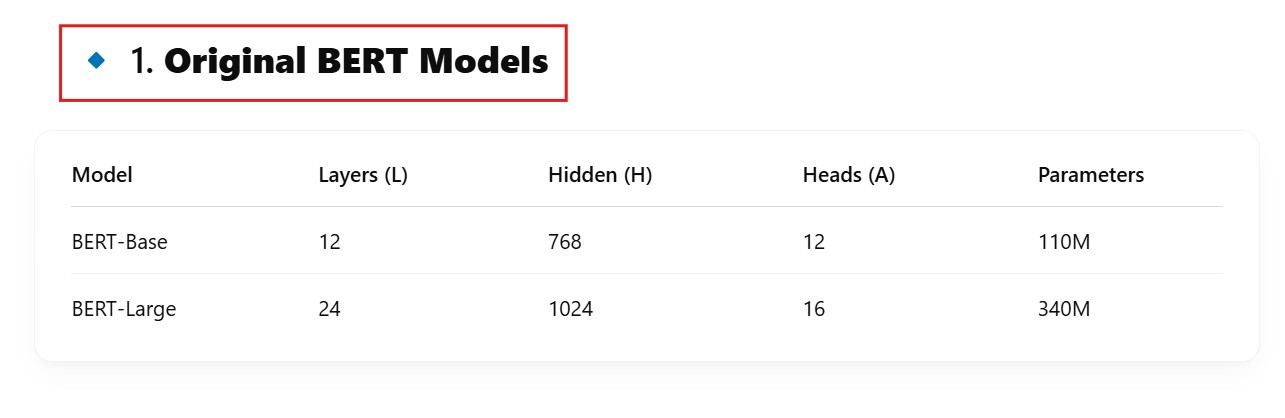

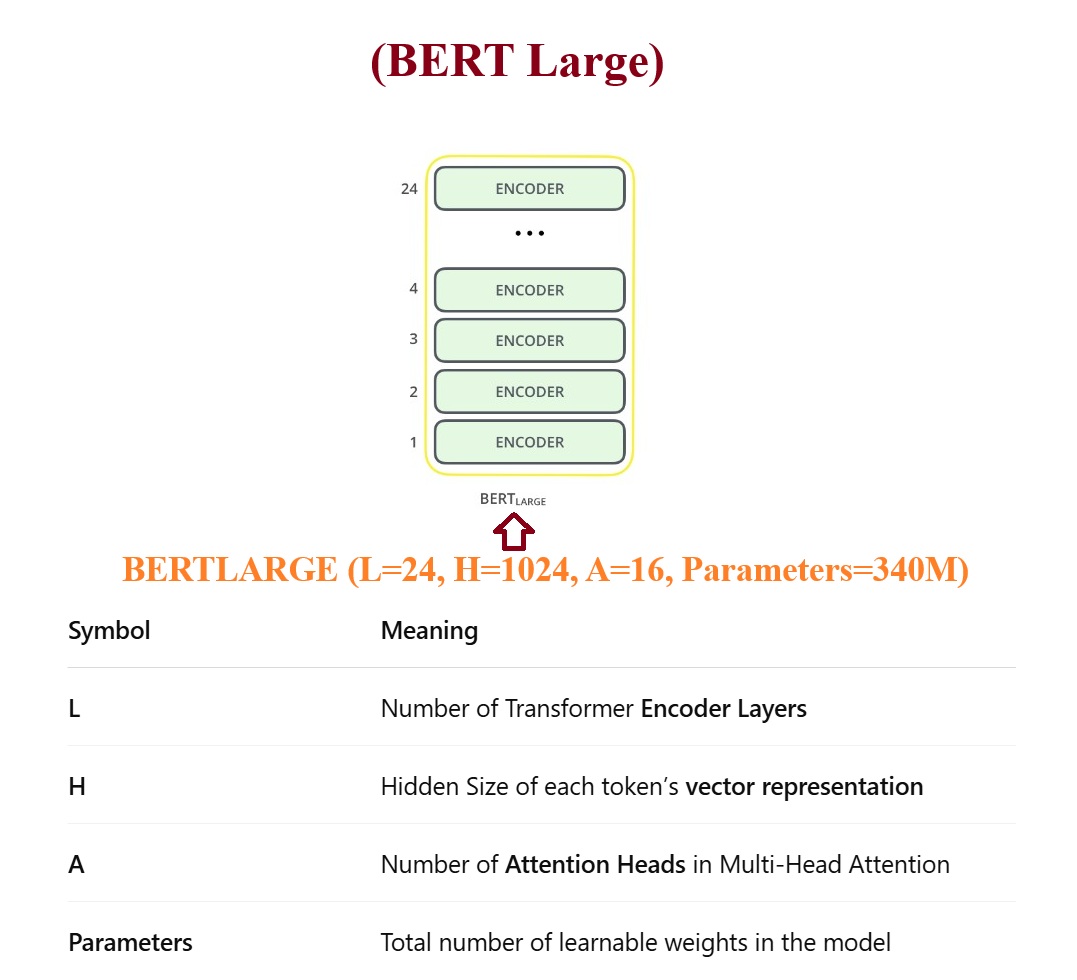

The BERT architecture builds on top of Transformer. We currently have two variants available:

- BERT Base: 12 layers (transformer blocks), 12 attention heads, and 110 million parameters

- BERT Large: 24 layers (transformer blocks), 16 attention heads and, 340 million parameters

- All of these Transformer layers are Encoder-only blocks.

(7) Input To BERT Model

- Once the input tokens are ready, they keep flowing up the stack. Each layer applies self-attention, passes its results through a feed-forward network, and then hands it off to the next encoder. In terms of architecture, this remains identical to the Transformer up until this point. It’s at the output that we first start seeing how things diverge.

- With advancements like BERT language model, sentence prediction, pre-trained BERT models, natural language processing (NLP), next sentence prediction, masked language model, NLP tasks, masked language modeling, sentiment analysis, and human language, the capabilities and applications of such models expand significantly.

(8) How Does BERT Processes The Input ?

- Embedding Layer.

- Transformer Encoder Layers

- Final Hidden States

- BERT Follows these steps to process the input text.

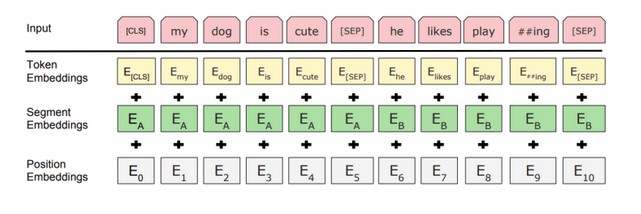

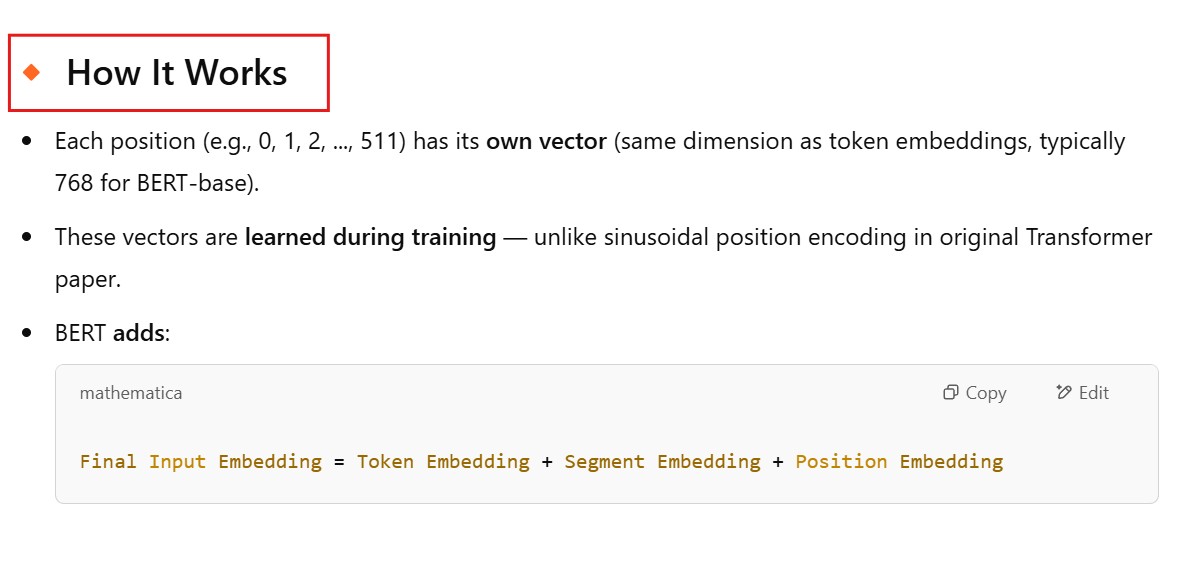

Step-1: Embedding Layer

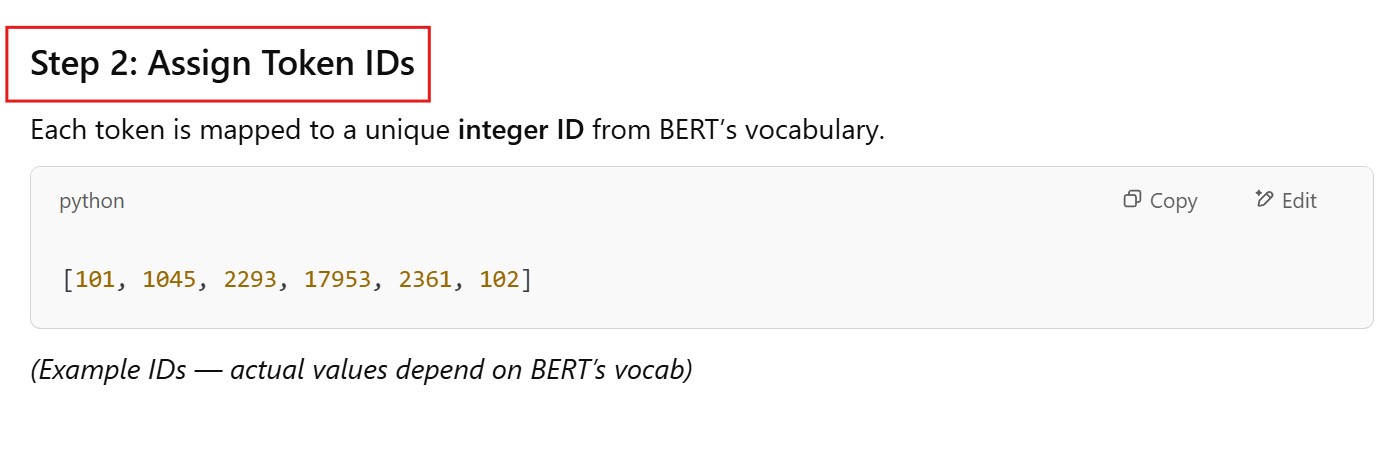

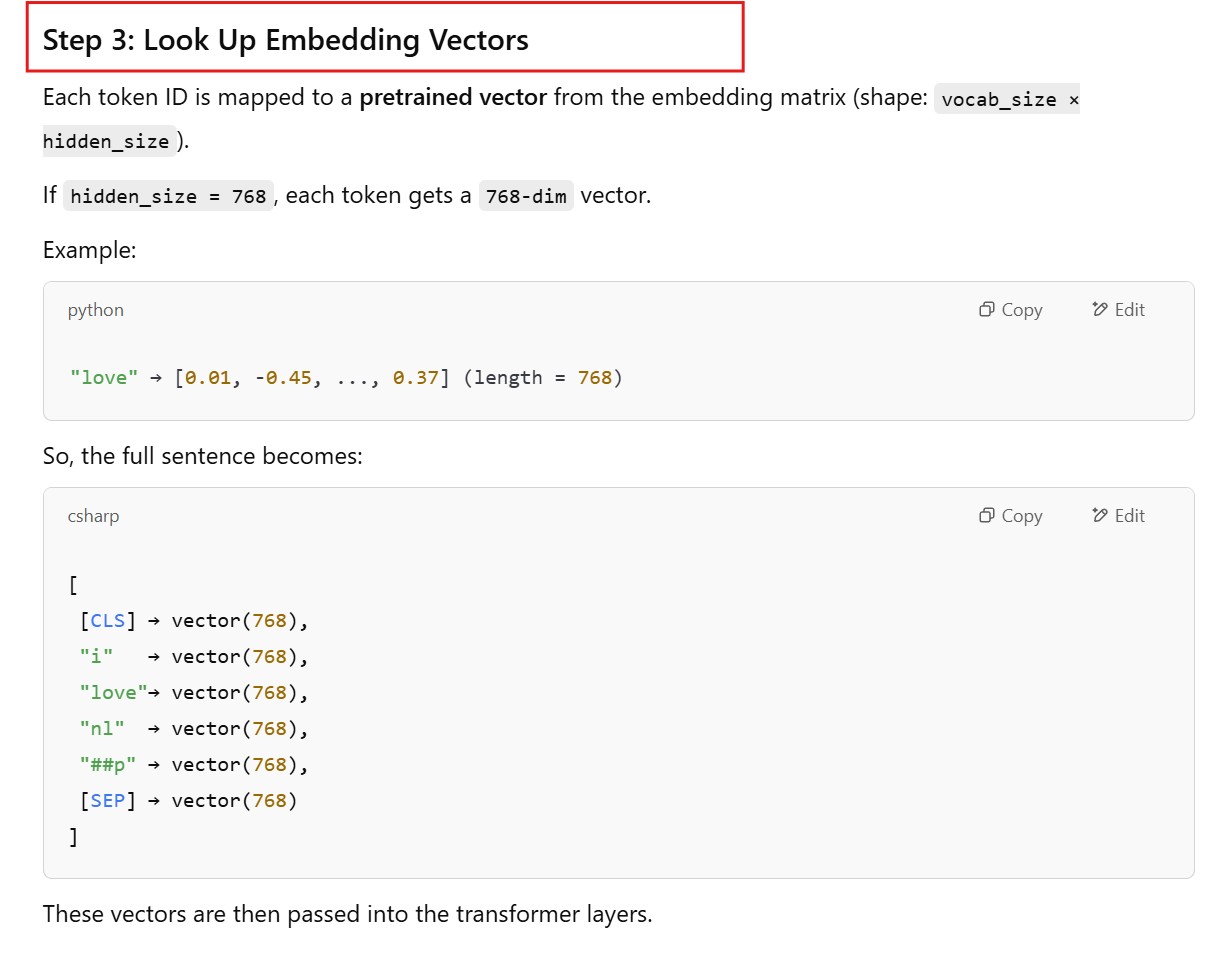

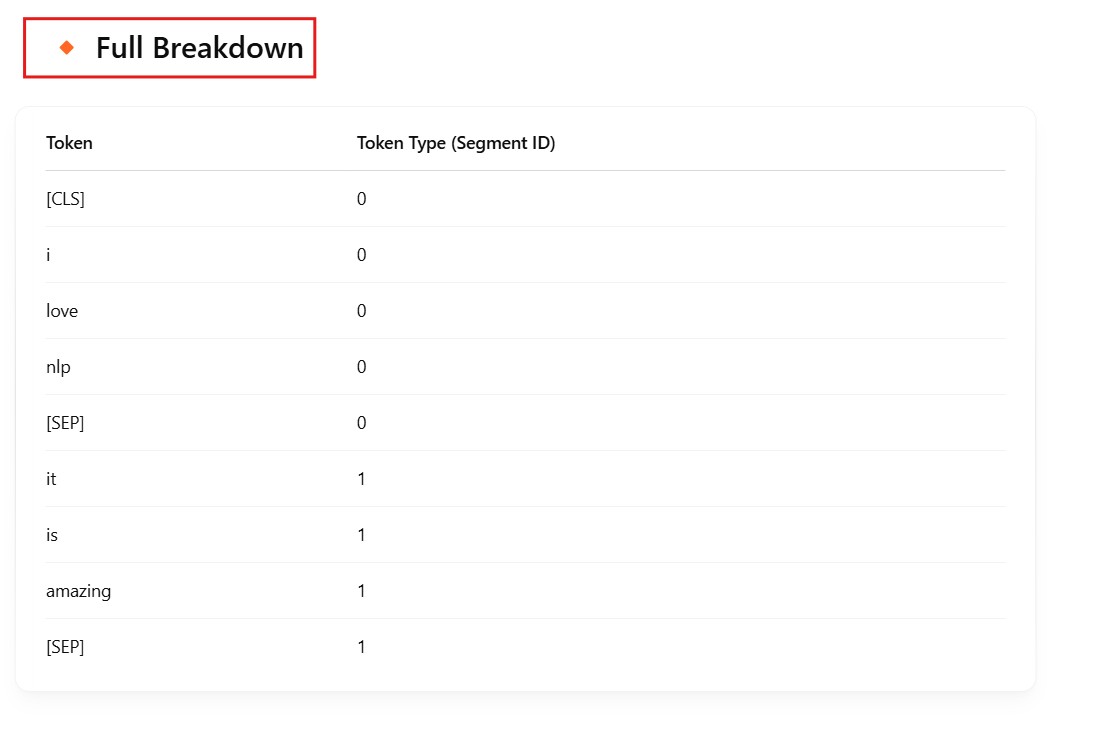

Token Embedding

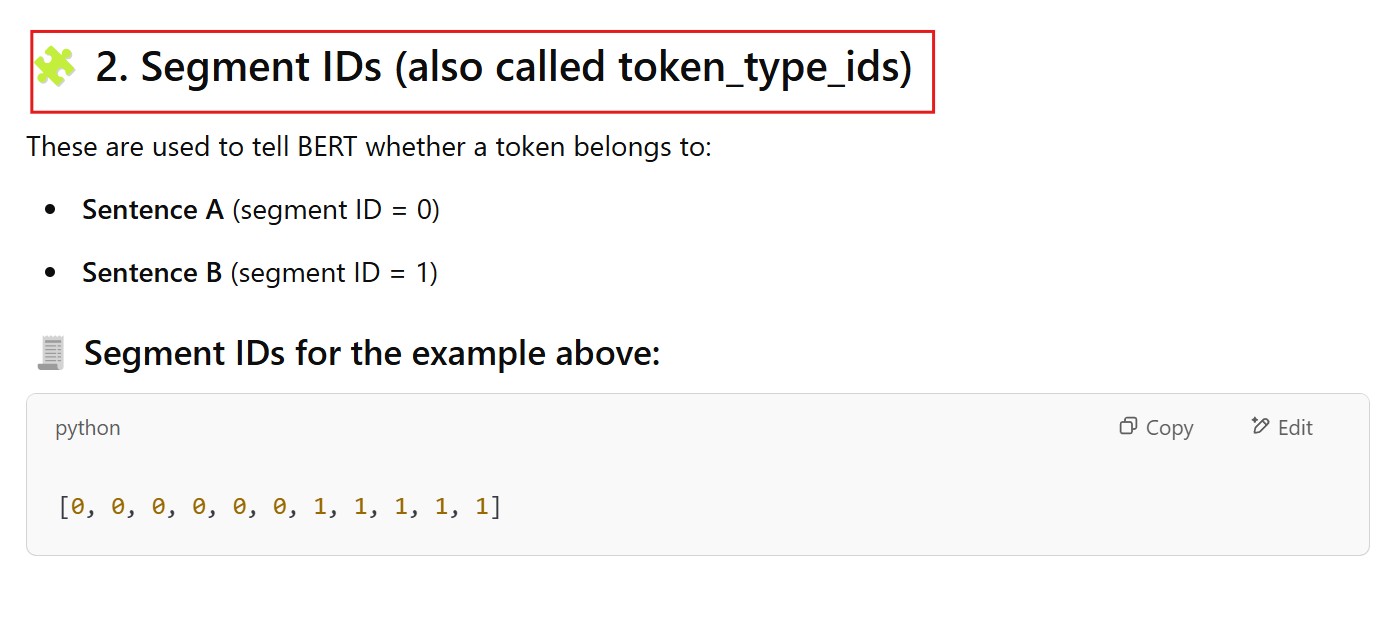

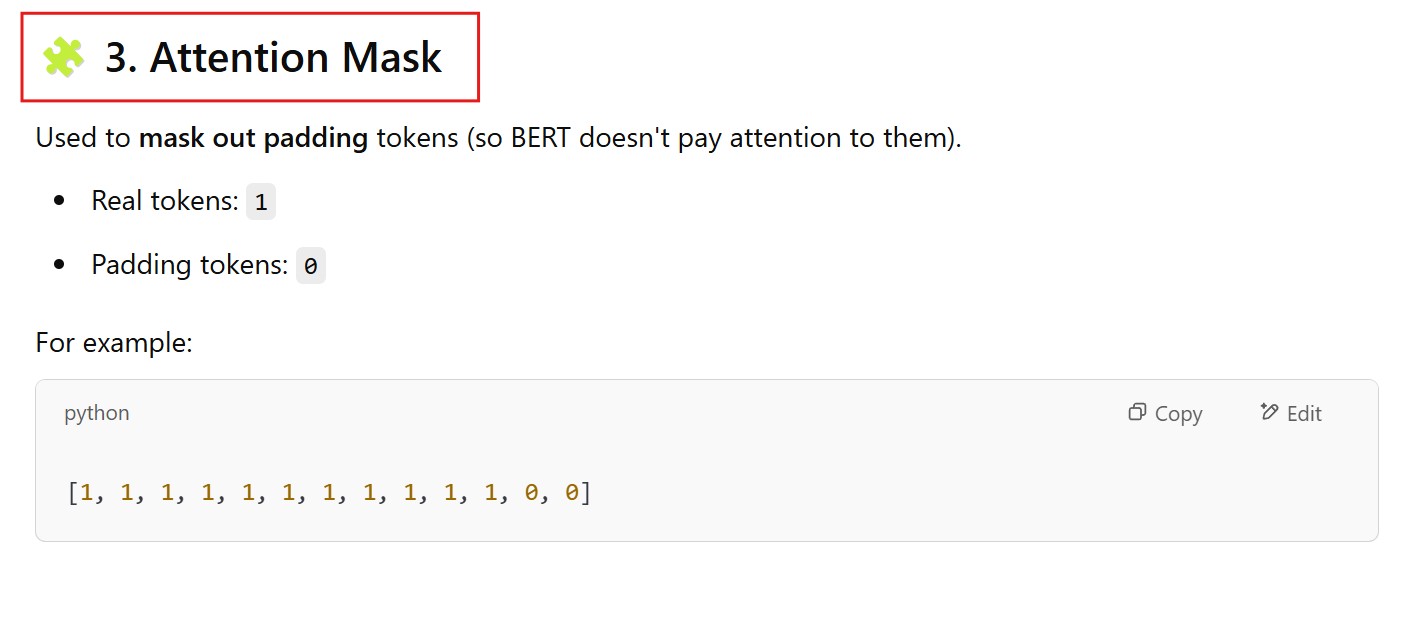

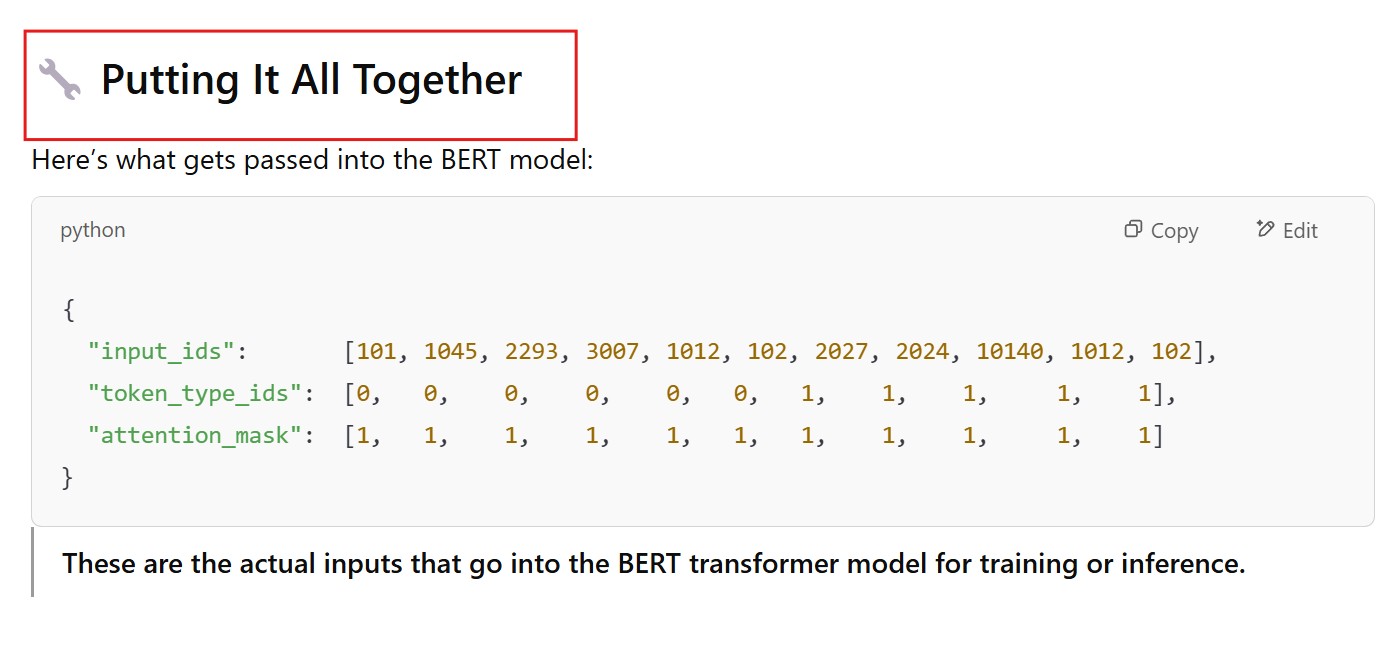

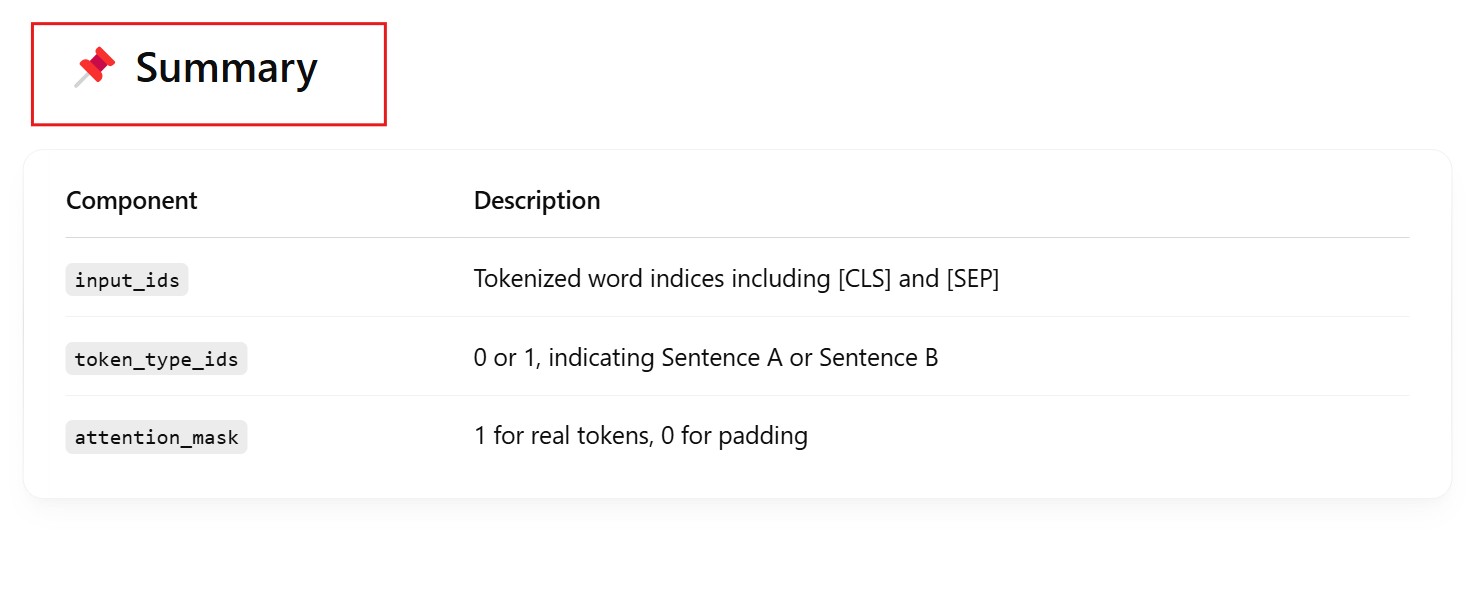

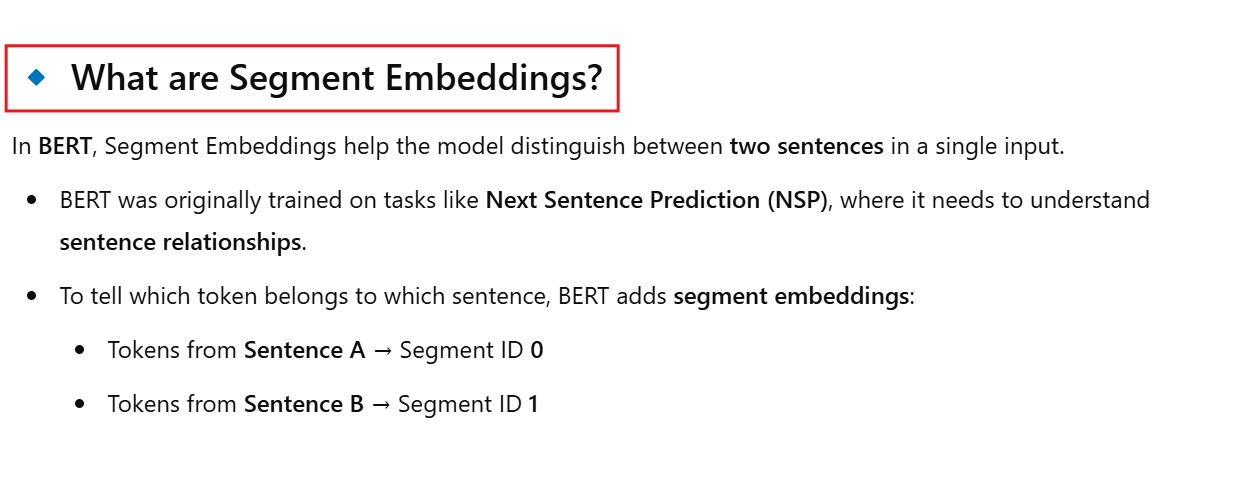

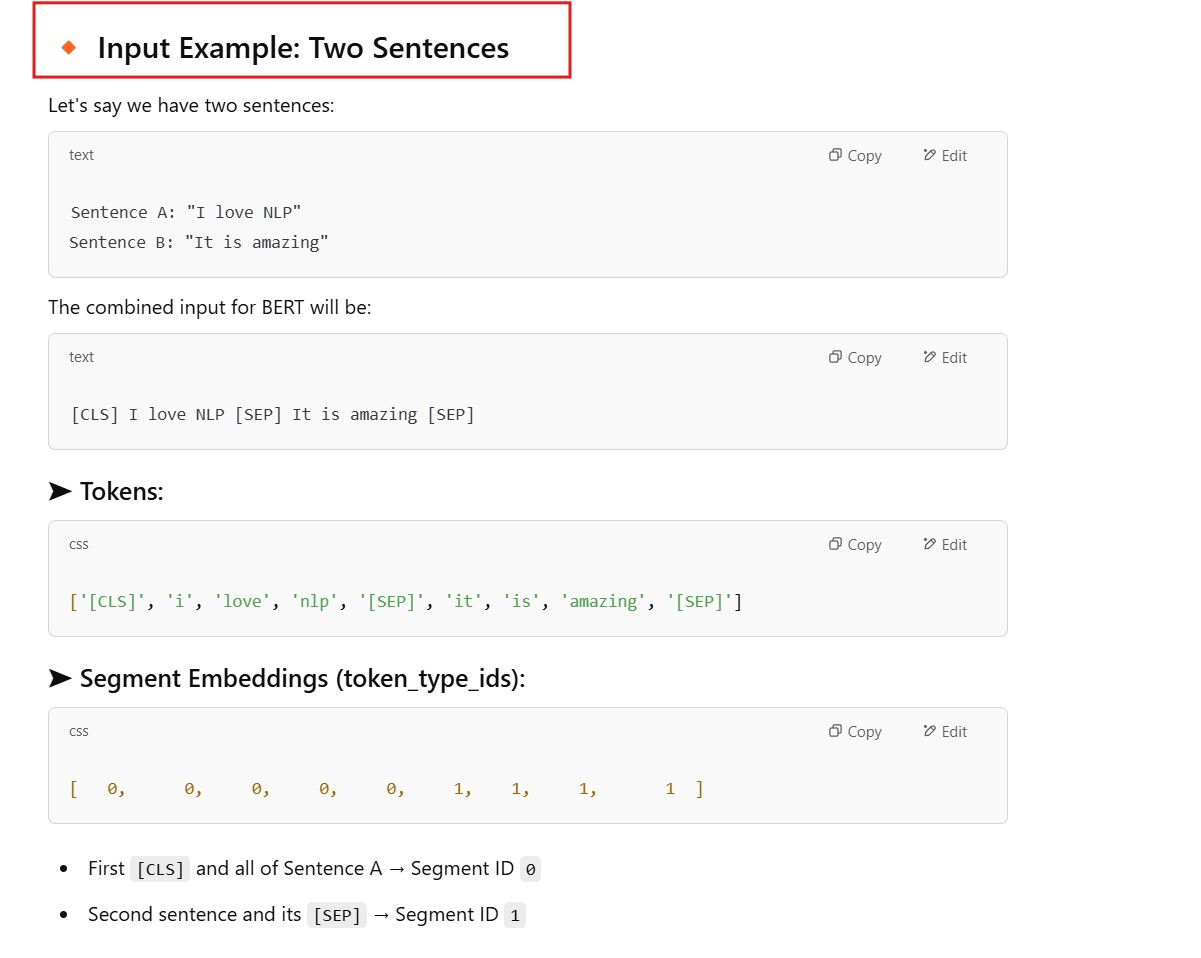

Segment Embedding

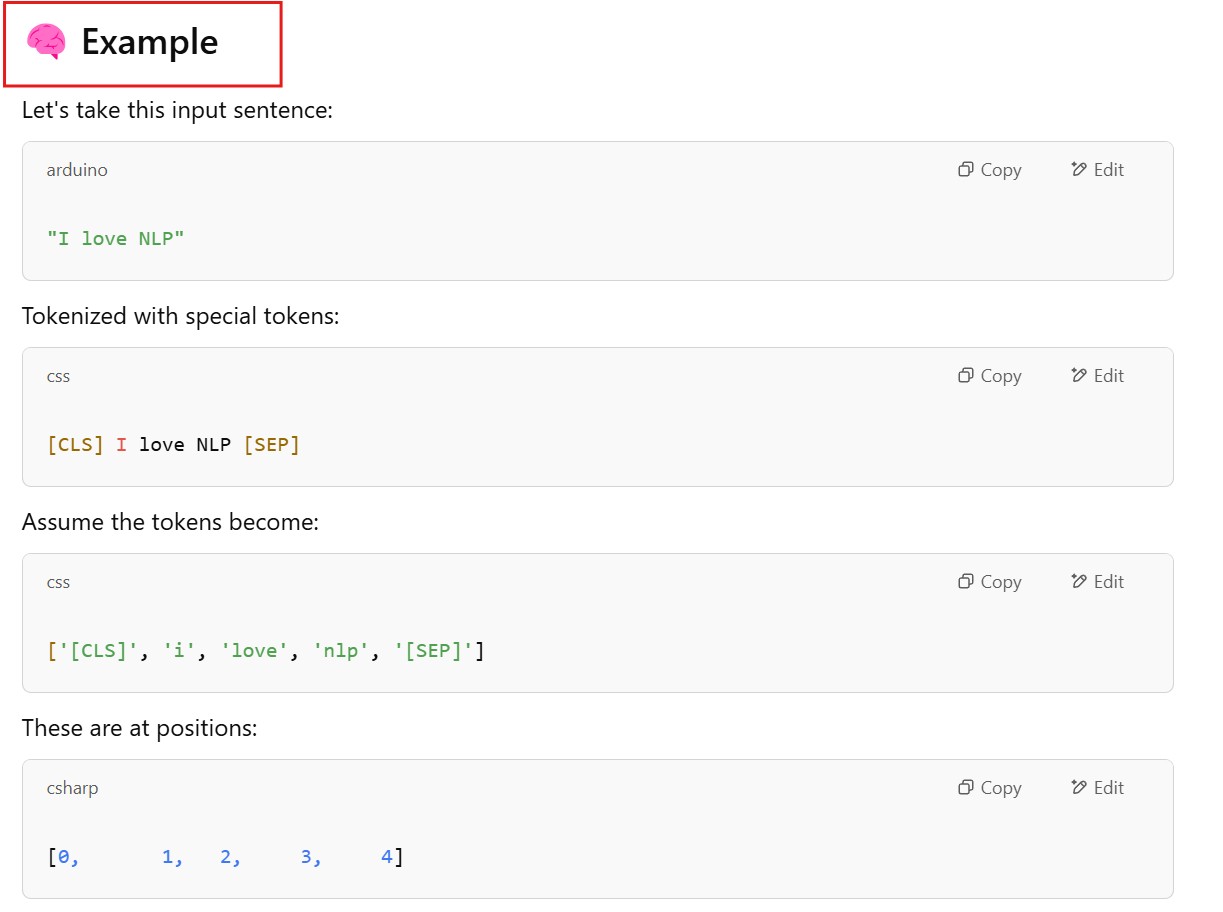

Positional Embedding

Step-2: Transformer Encoder Layer

- BERT consists of L = 12 (base) or 24 (large) transformer blocks (or layers). Each block includes:

- Each token goes through all layers, getting increasingly rich, contextual representations.

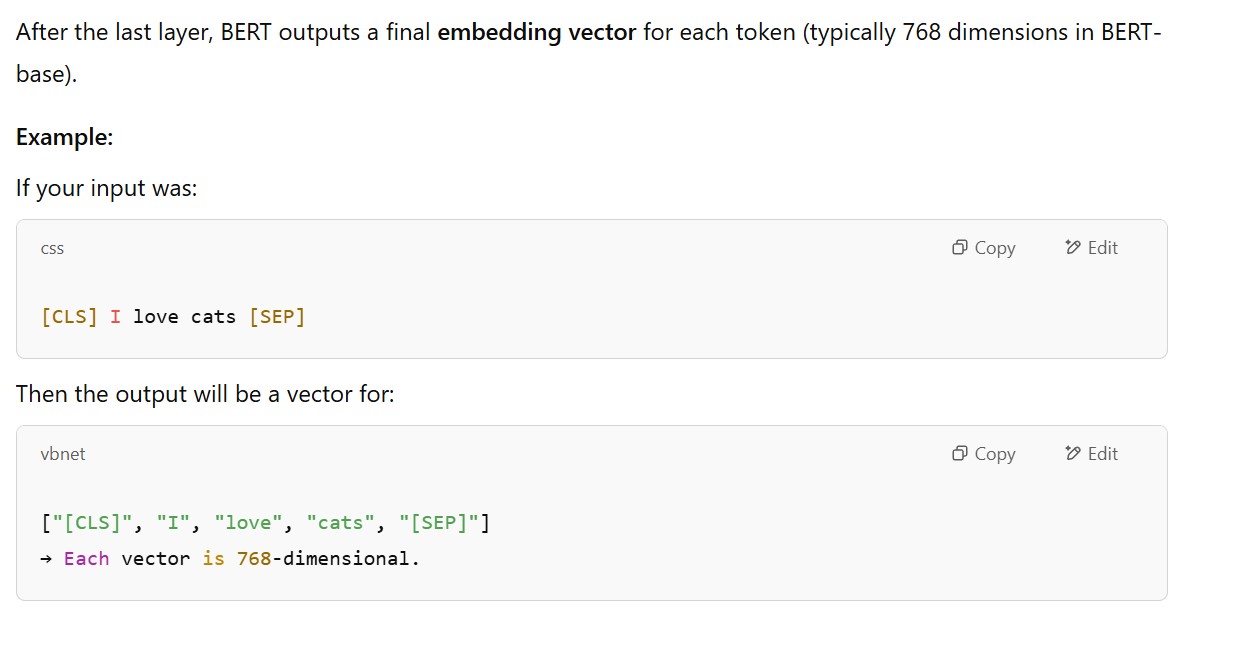

Step-3: Final Hidden States

(9) How BERT Is Pre-Trained ?

- Masked Language Modeling (MLM)

- Next Sentence Prediction (NSP)

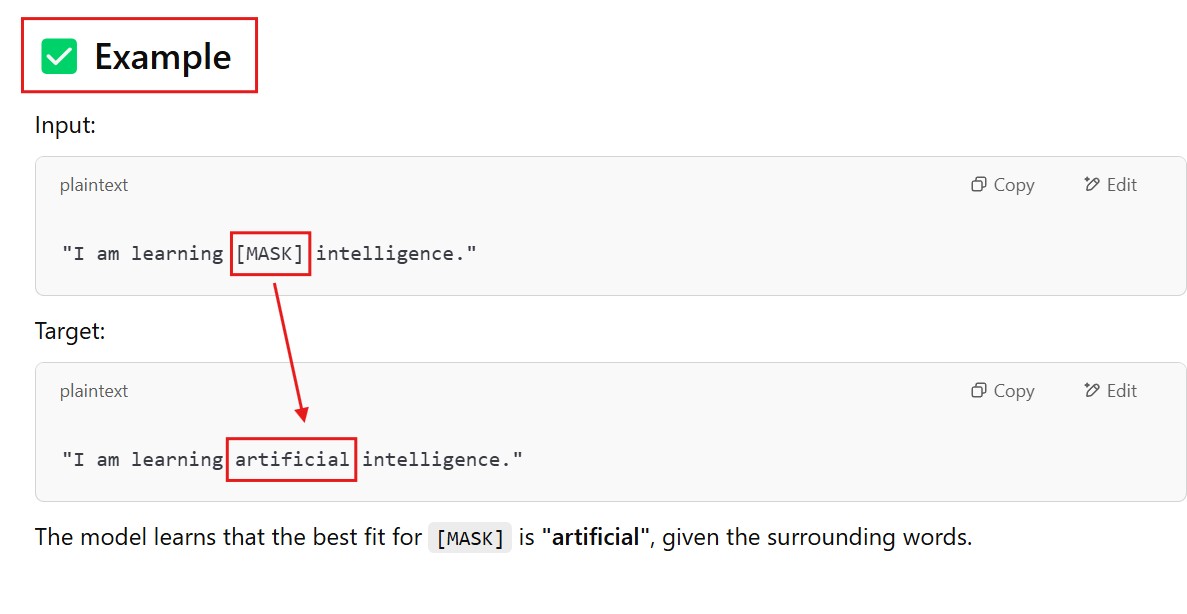

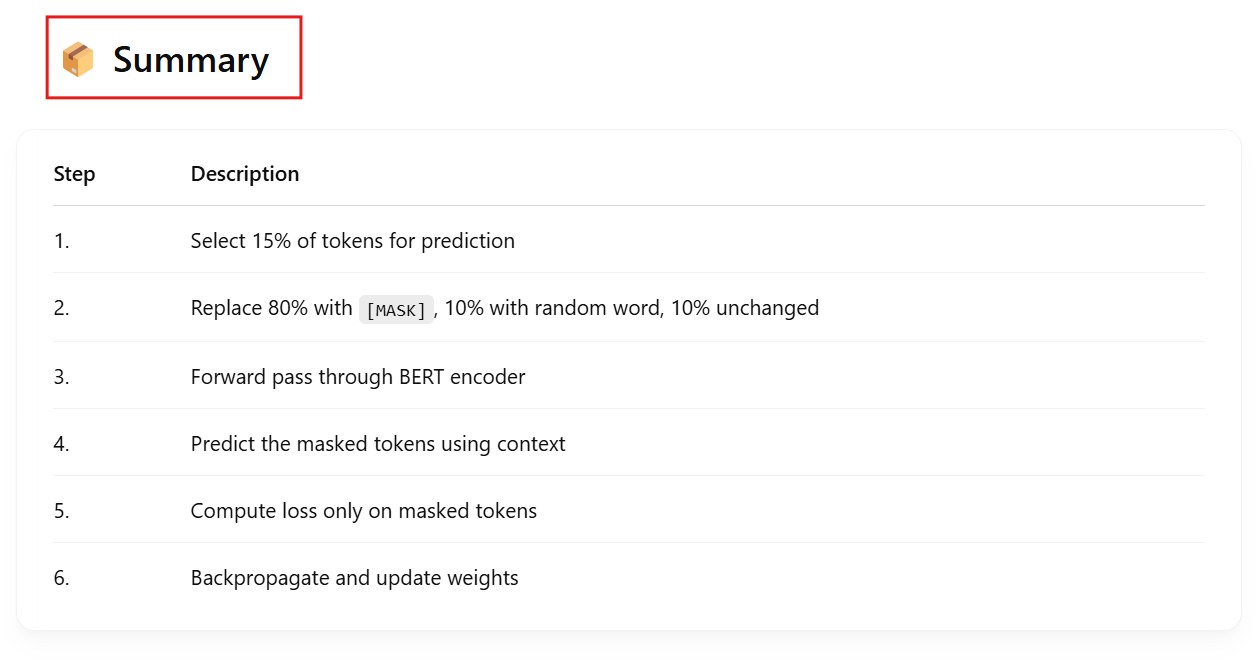

Masked Language Modeling (MLM)

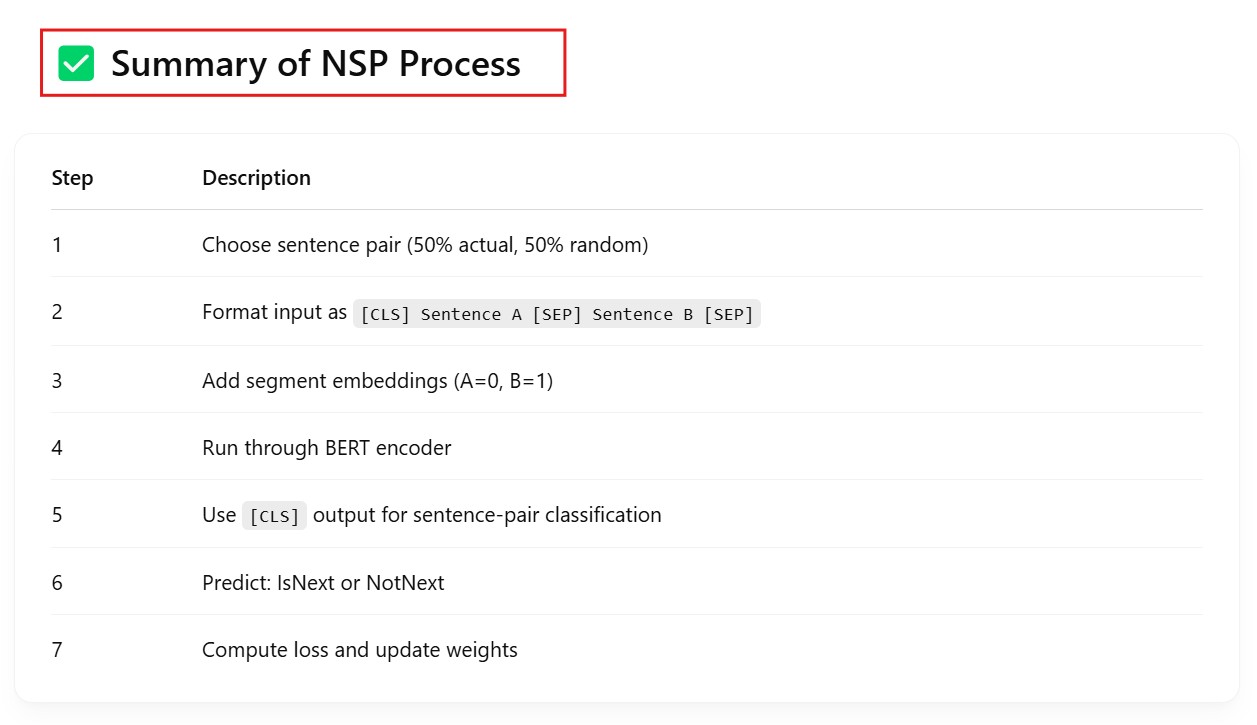

Next Sentence Prediction(NSP):

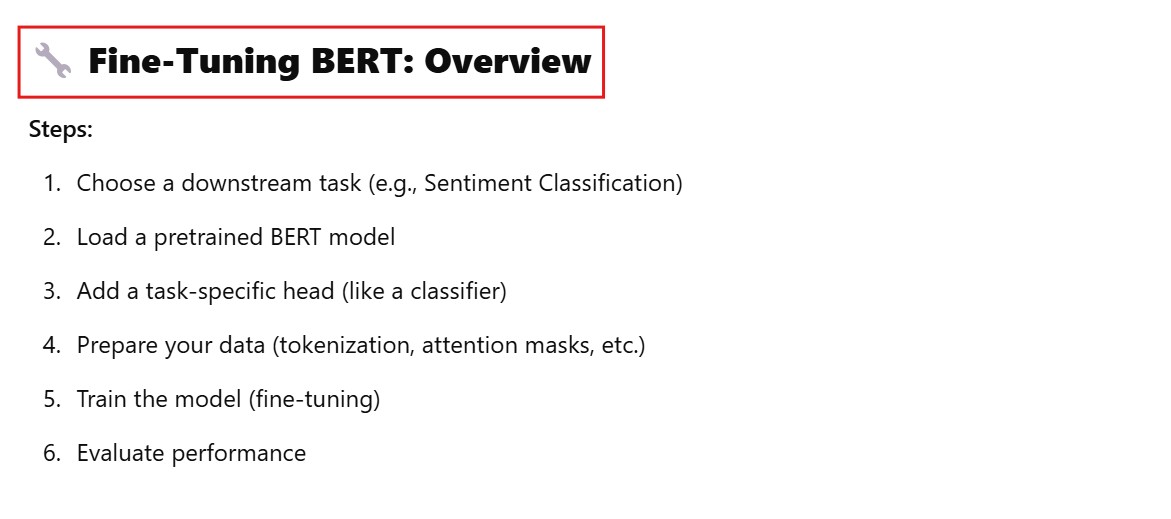

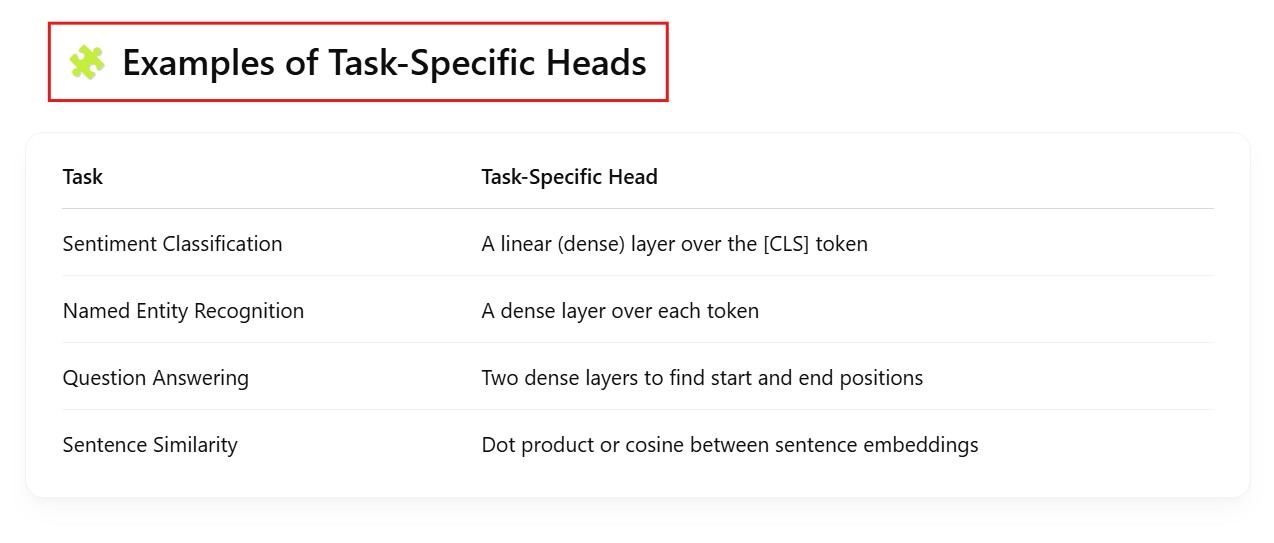

(10) Fine Tuning BERT

Fine-tuning a BERT model means taking the pretrained BERT and adapting it to a specific downstream NLP task like sentiment analysis, text classification, named entity recognition (NER), question answering, etc.

Let’s break it down step-by-step:

What Is Add Task Specific Work ?

from transformers import BertForSequenceClassification

model = BertForSequenceClassification.from_pretrained("bert-base-uncased", num_labels=2)

- num_labels = 2, means we have 2 classes to train with.

- Under the hood, this adds a linear layer on top of the [CLS] token embedding like:

output = BERT(input_ids) → shape: [batch_size, seq_len, hidden_size]

cls_output = output[:, 0, :] # take [CLS] token

logits = Linear(hidden_size, num_labels)(cls_output)

- Then apply

Softmaxonlogitsto get class probabilities.

from transformers import BertForTokenClassification

model = BertForTokenClassification.from_pretrained("bert-base-uncased", num_labels=9)

from transformers import BertForQuestionAnswering

model = BertForQuestionAnswering.from_pretrained("bert-base-uncased")

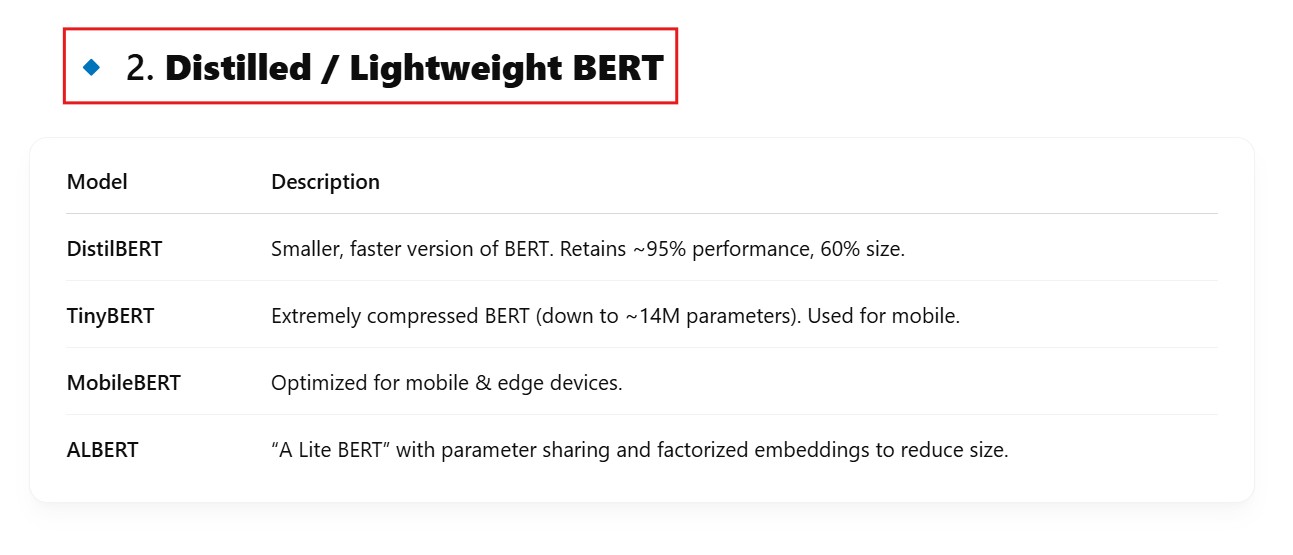

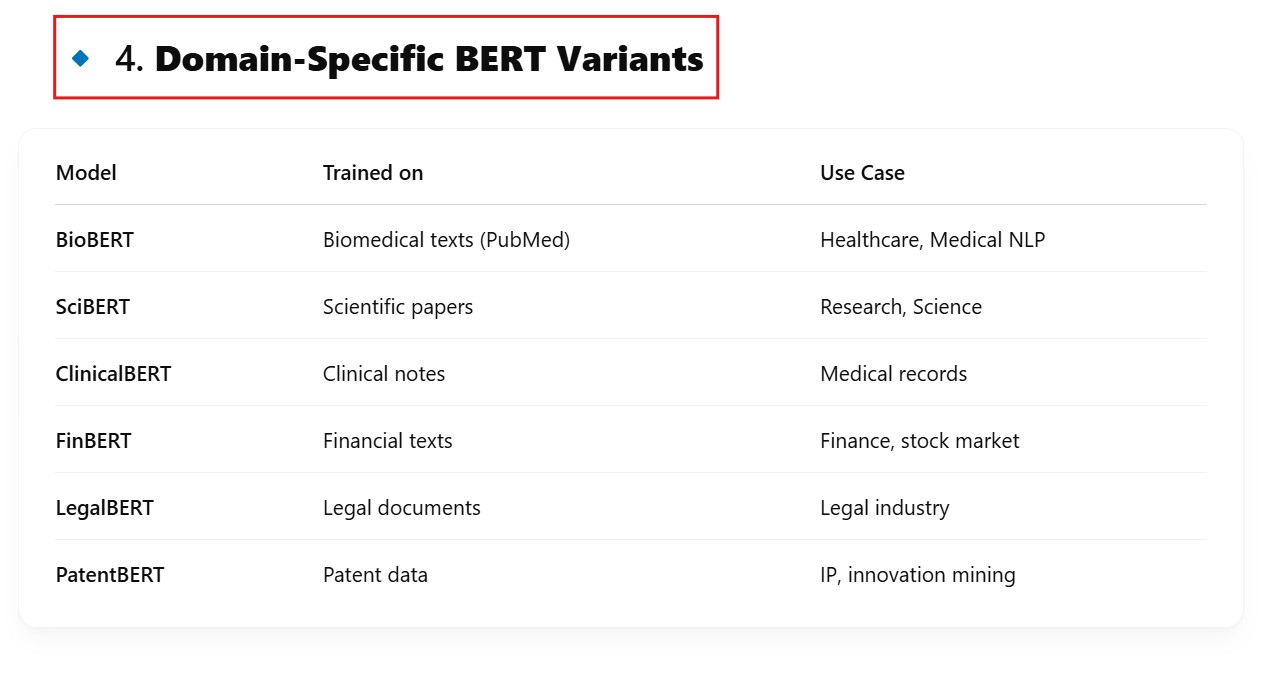

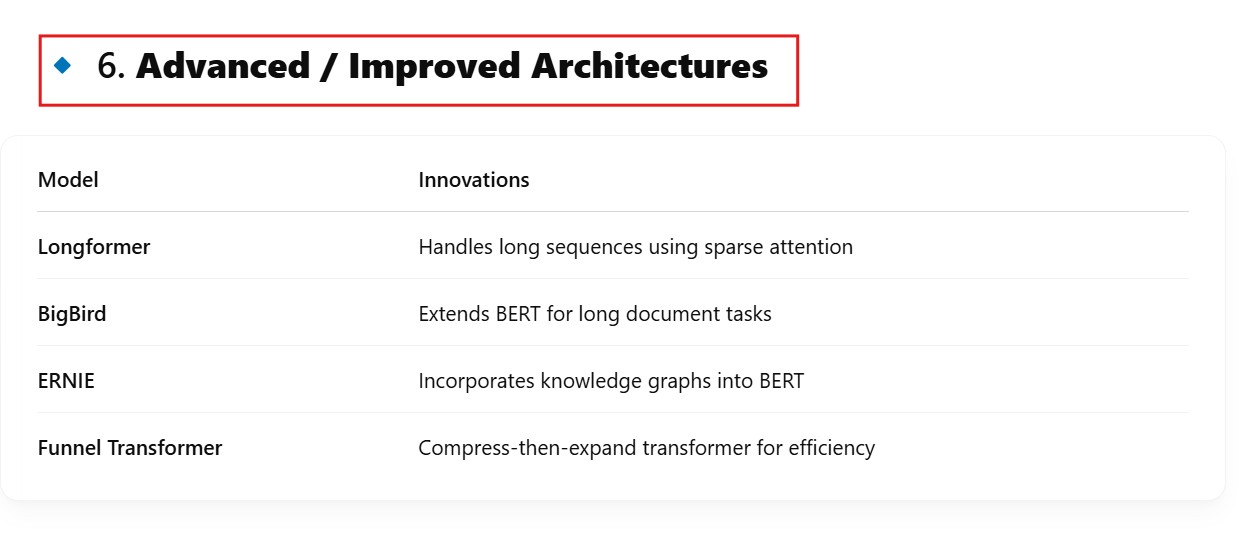

(11) Variant Of BERT Model