GenAI – Types Of Prompting Techniques.

Table Of Contents:

- Zero Short Prompting.

- Few Short Prompting.

- Chain Of Thoughts Prompting.

- Role Prompting.

- System Prompting.

- Instruction Tuning.

- Self Consistency Prompting.

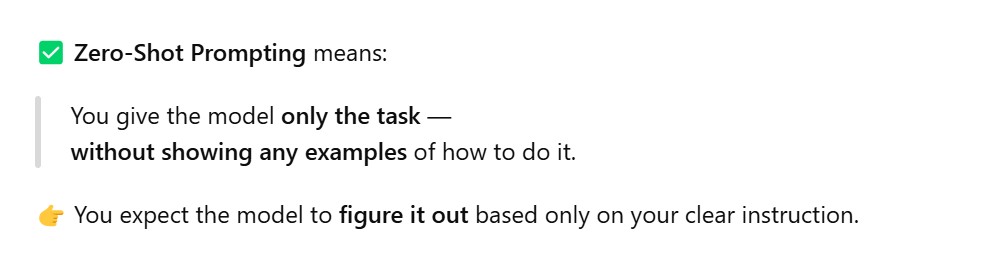

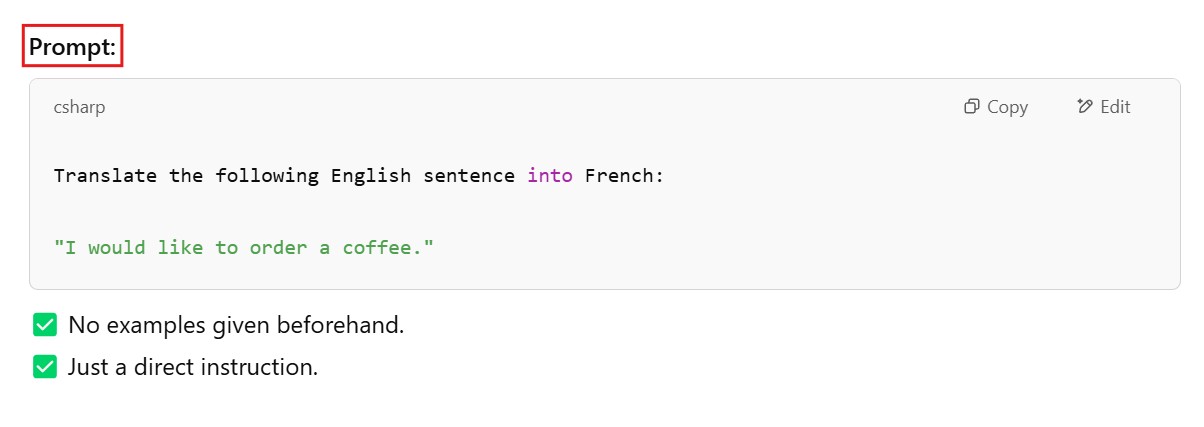

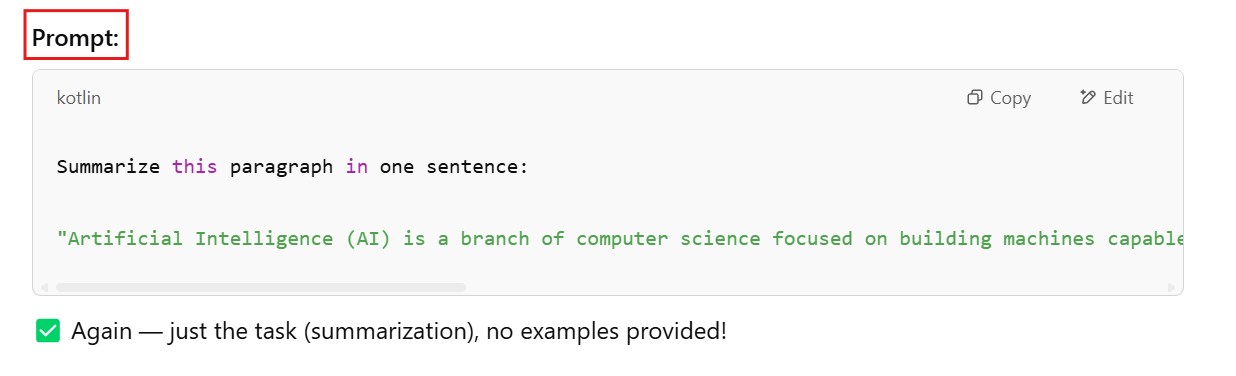

(1) Zero Shot Prompting

Example – 1

Example – 2

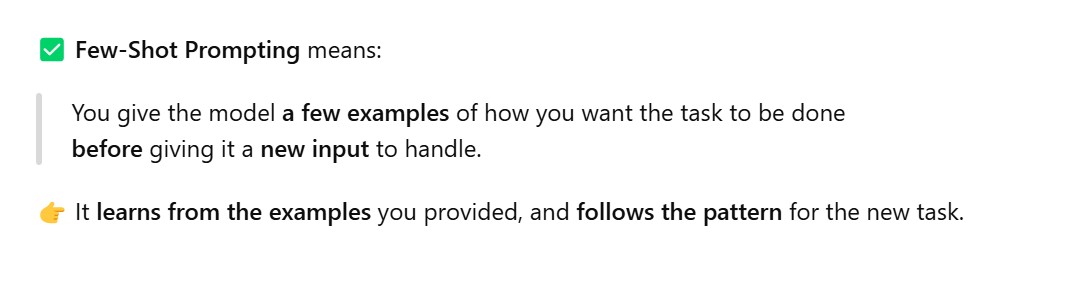

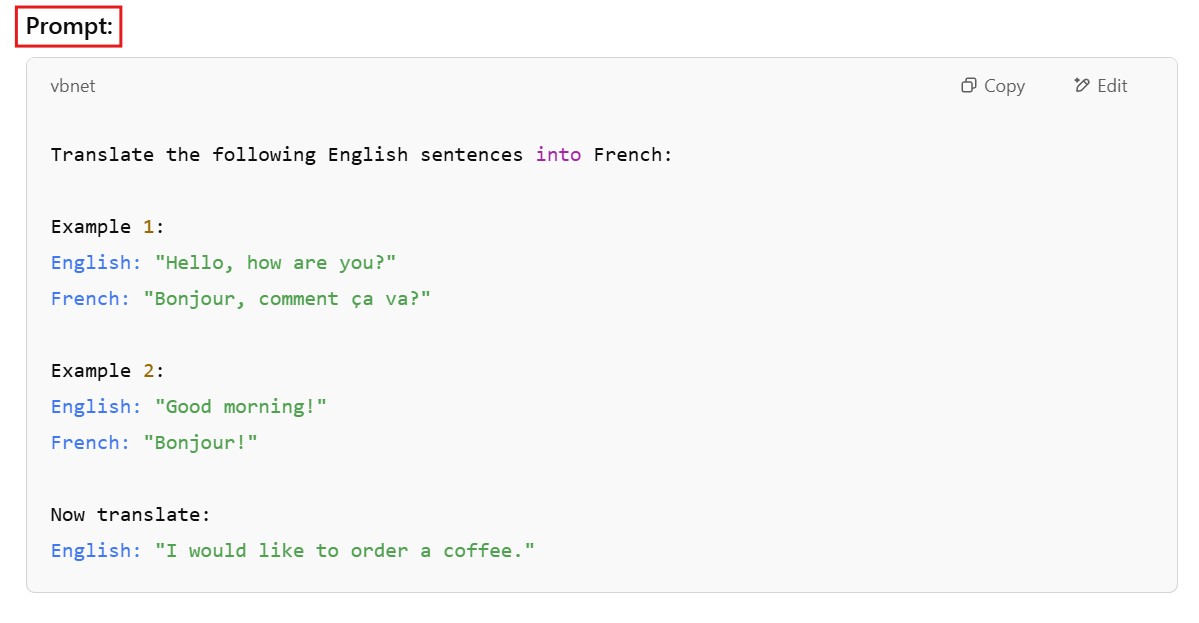

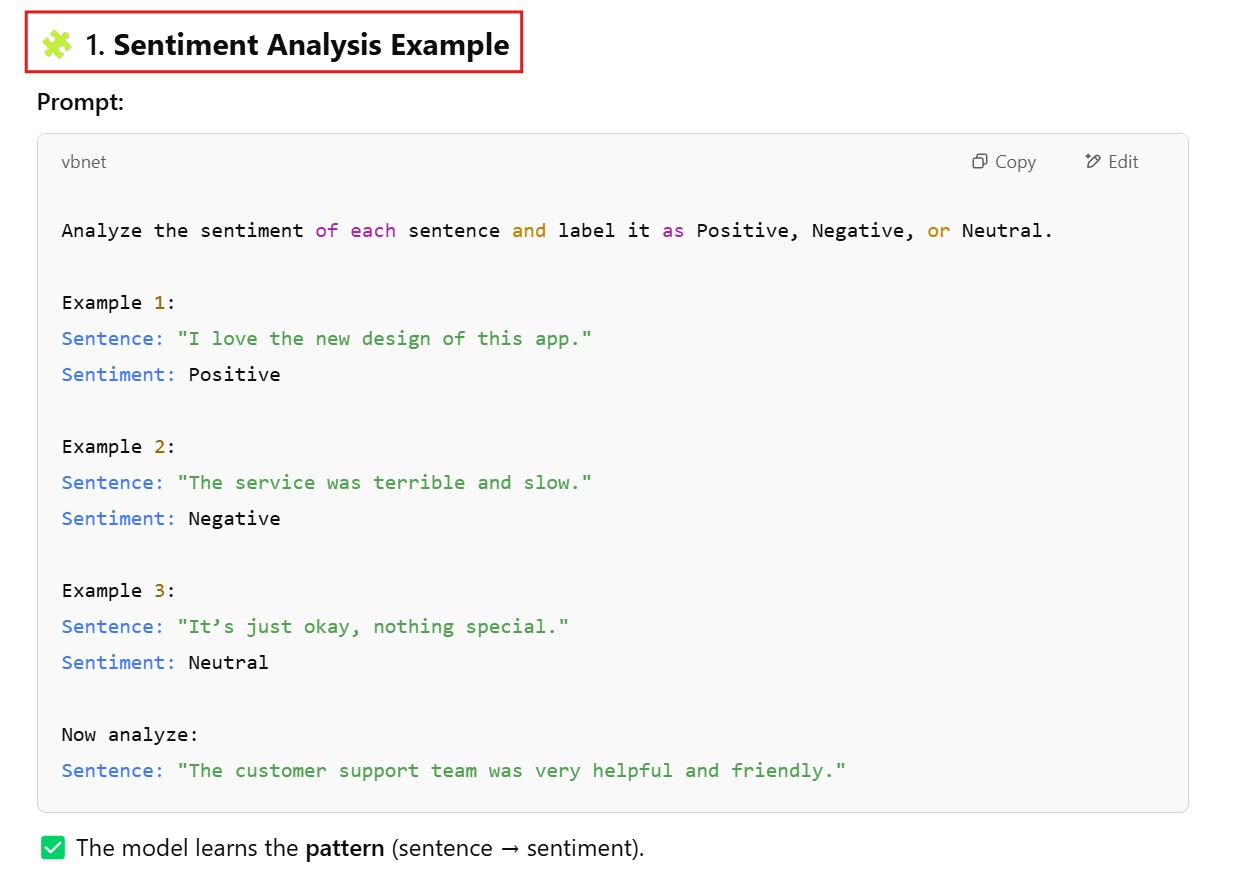

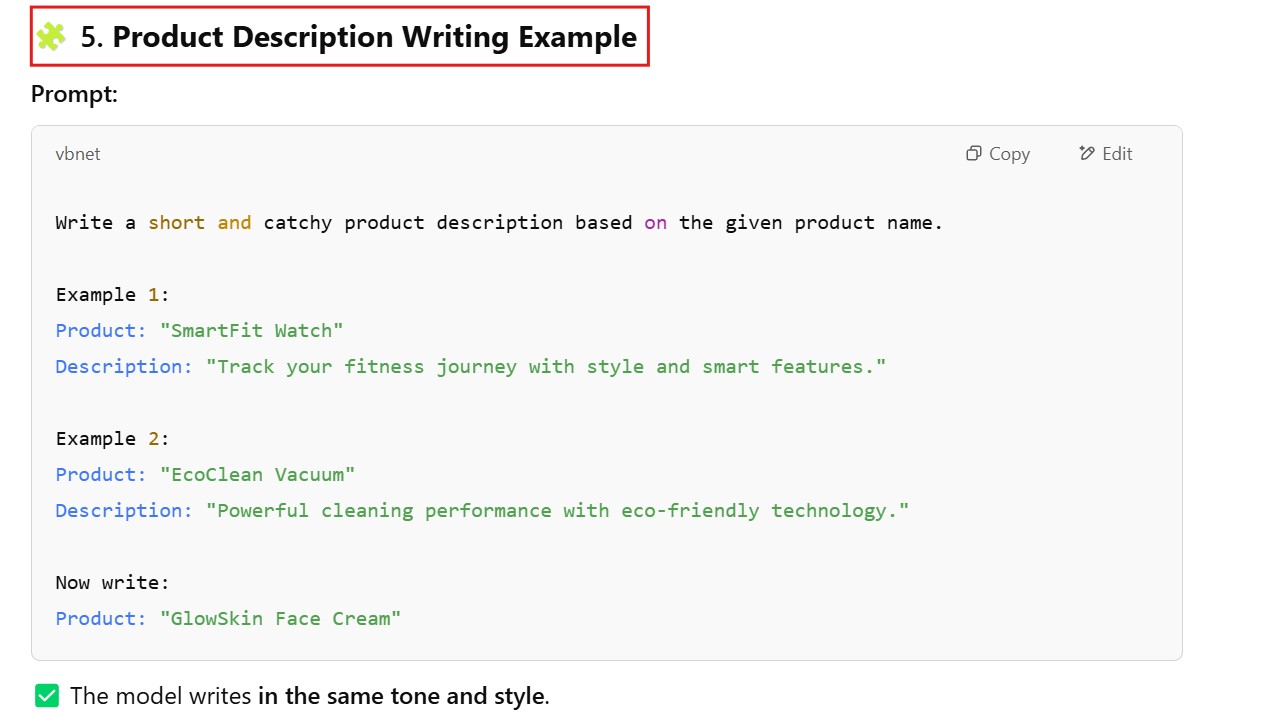

(2) Few Shot Prompting

Example – 1

Example – 2

Example – 3

Example – 4

Example – 5

Example – 6

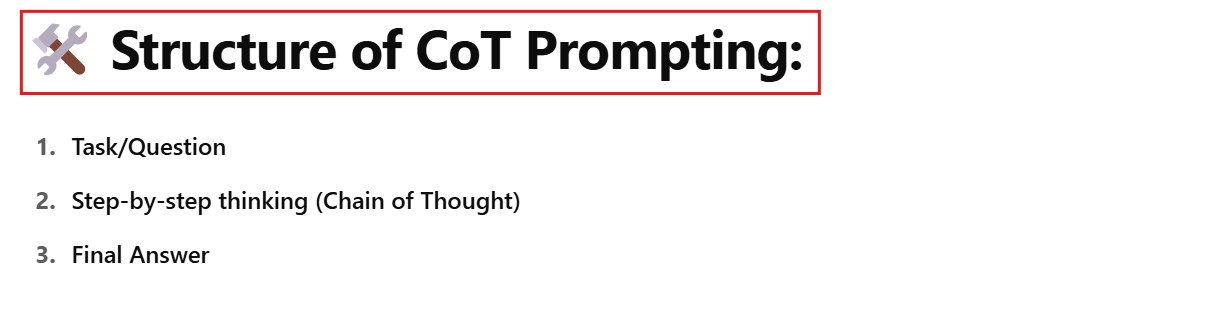

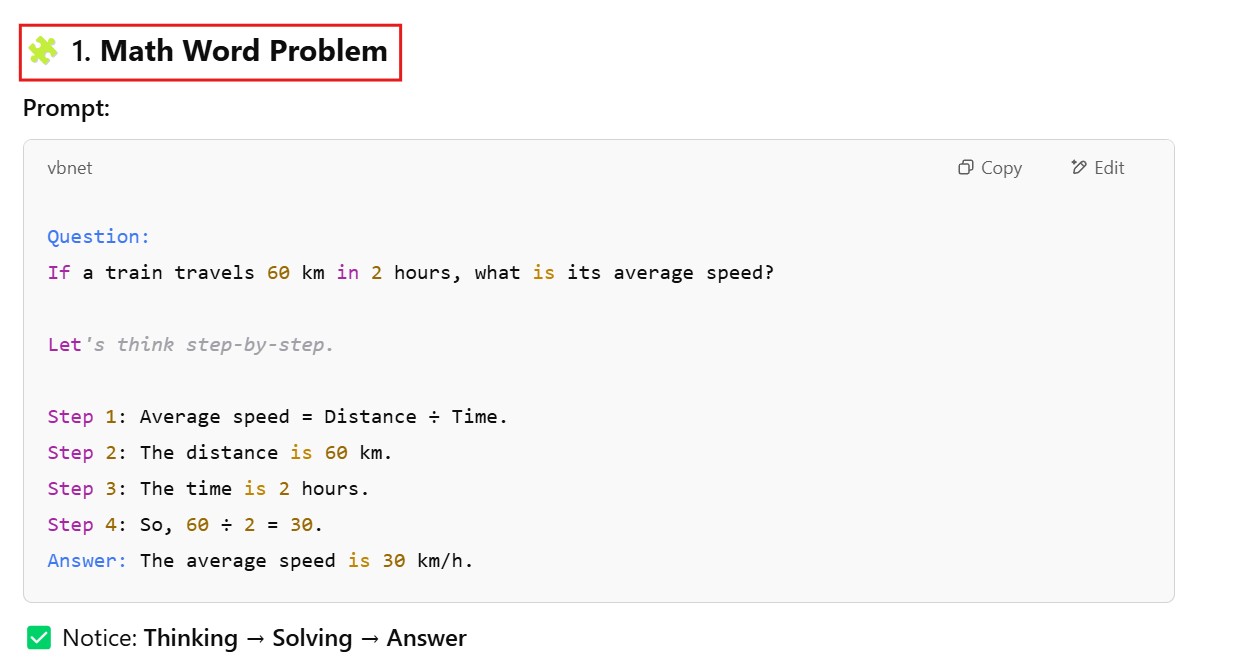

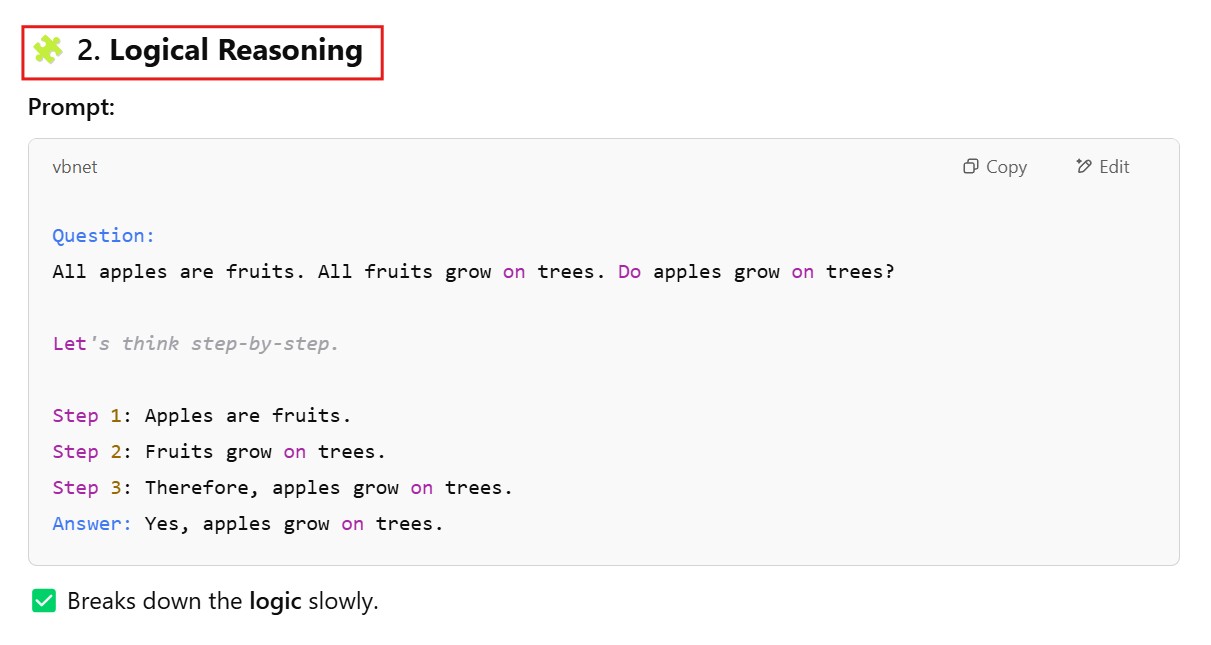

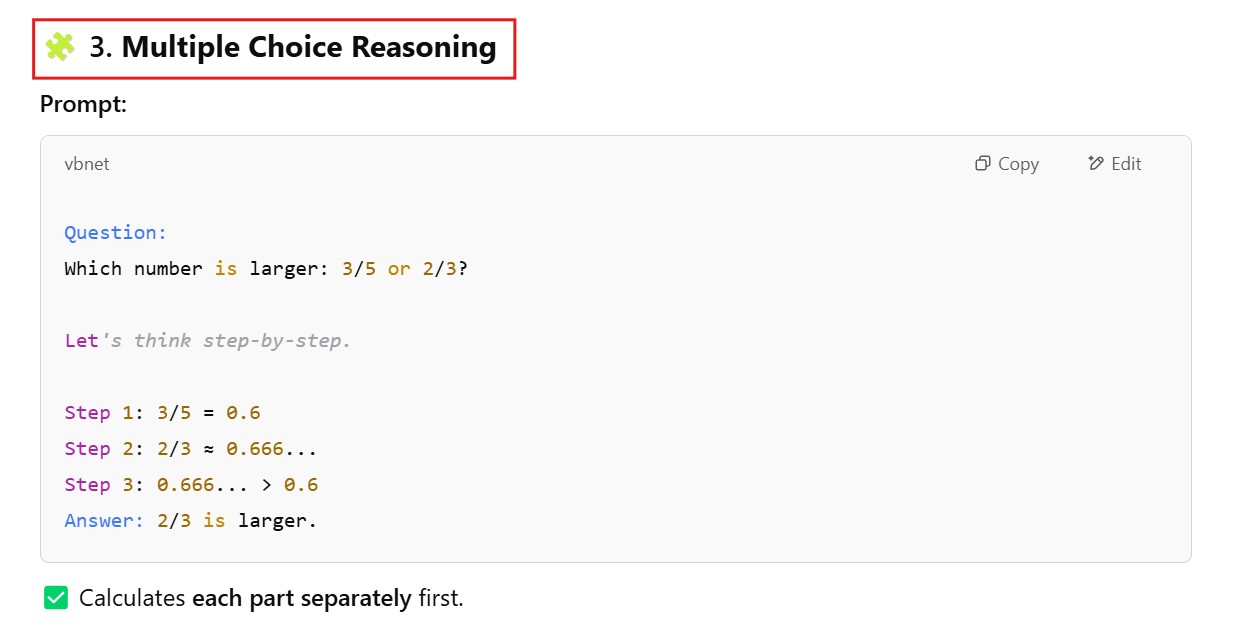

(3) Chain Of Thoughts Prompting

Example – 1

Example – 2

Example – 3

Example – 4

Example – 5

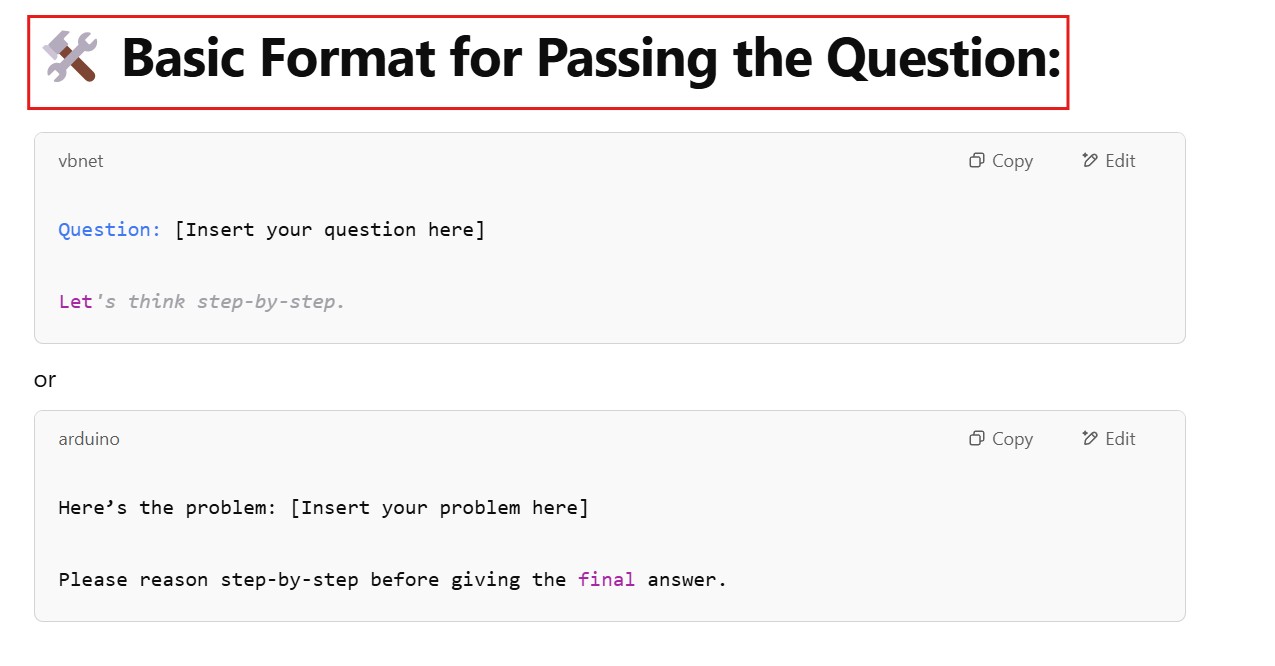

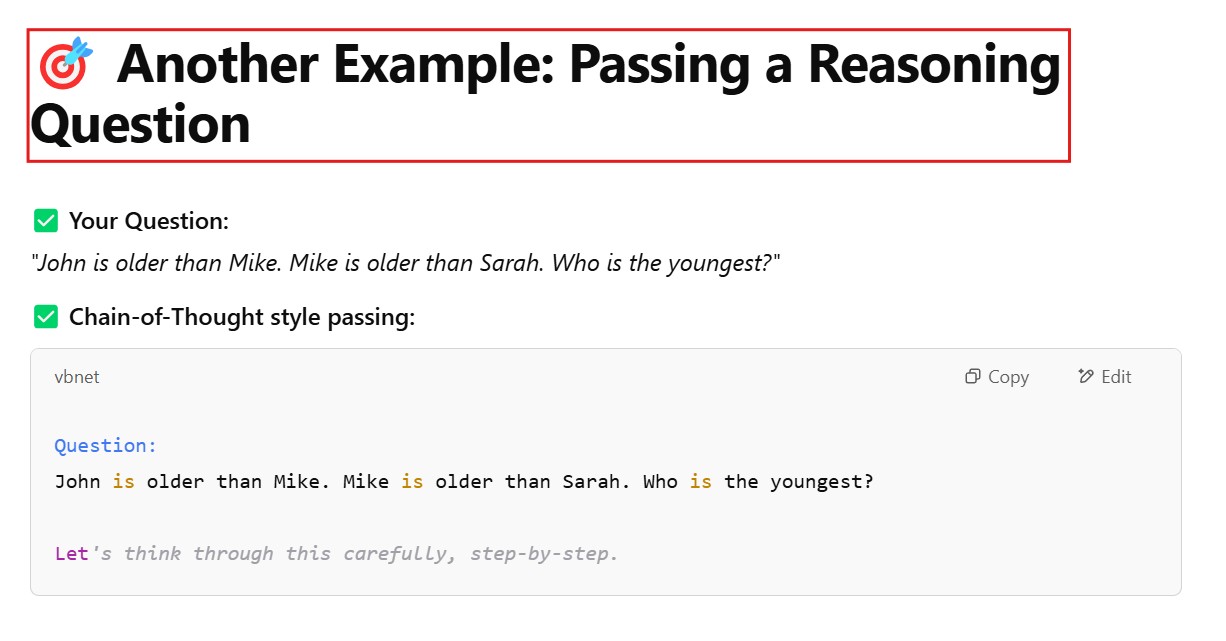

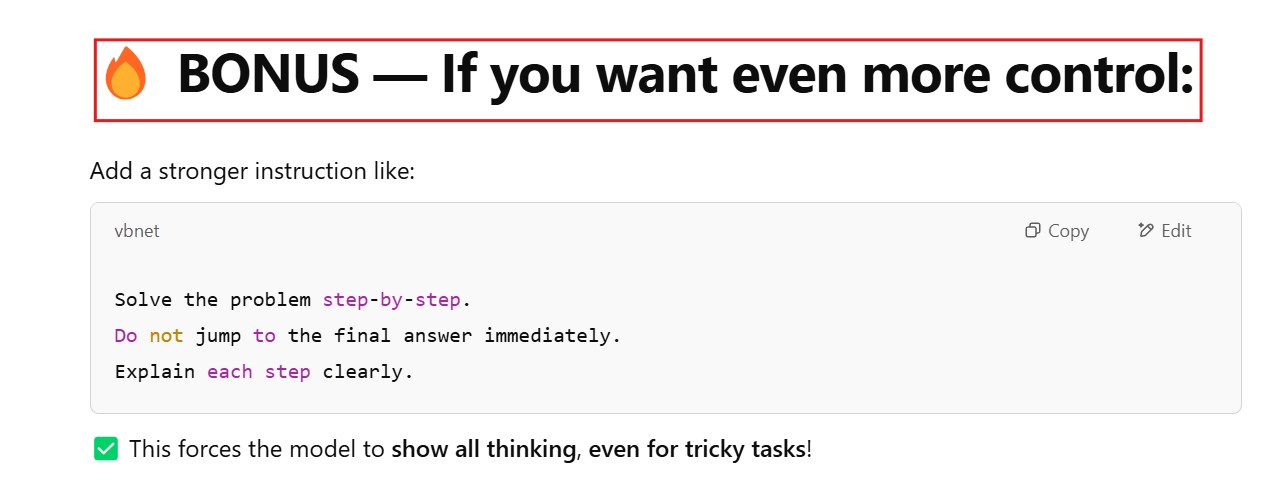

How You Will Pass The Questins To The LLM In Chain Of Thoughts ?

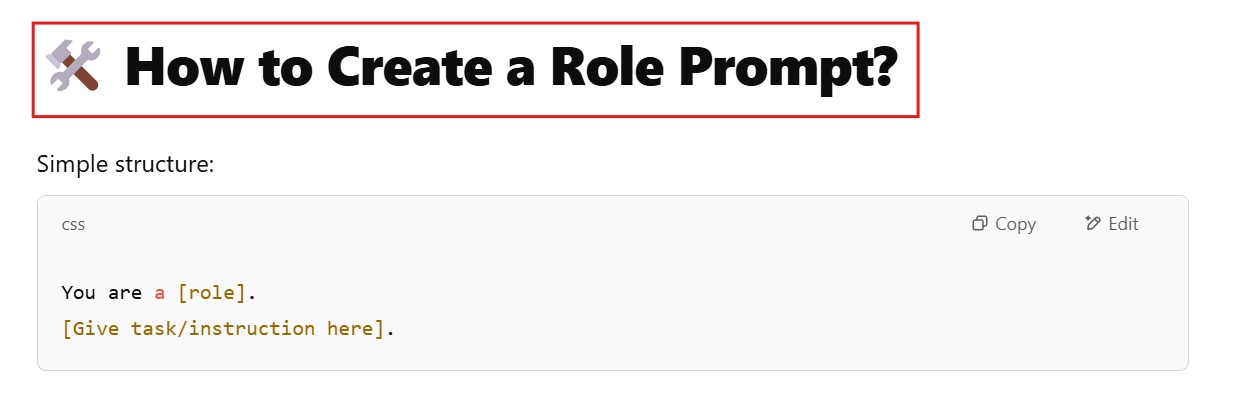

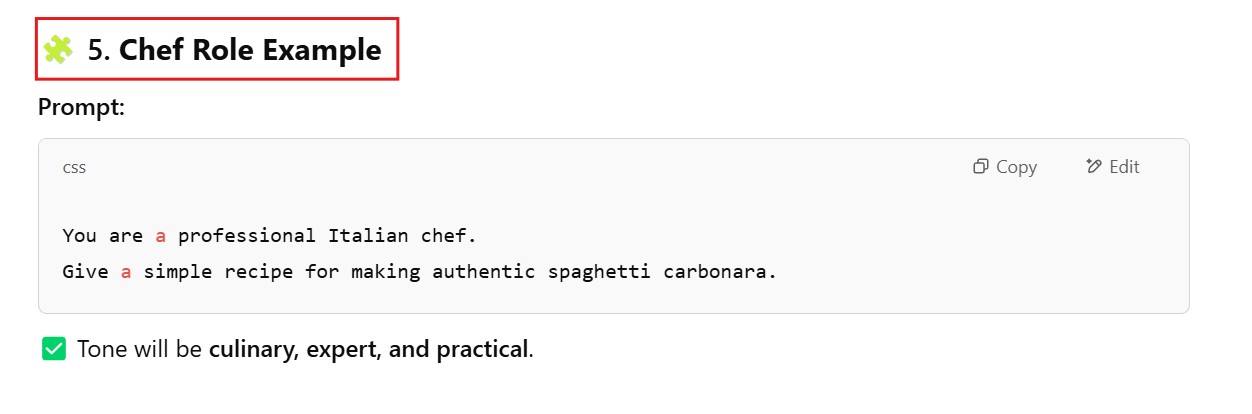

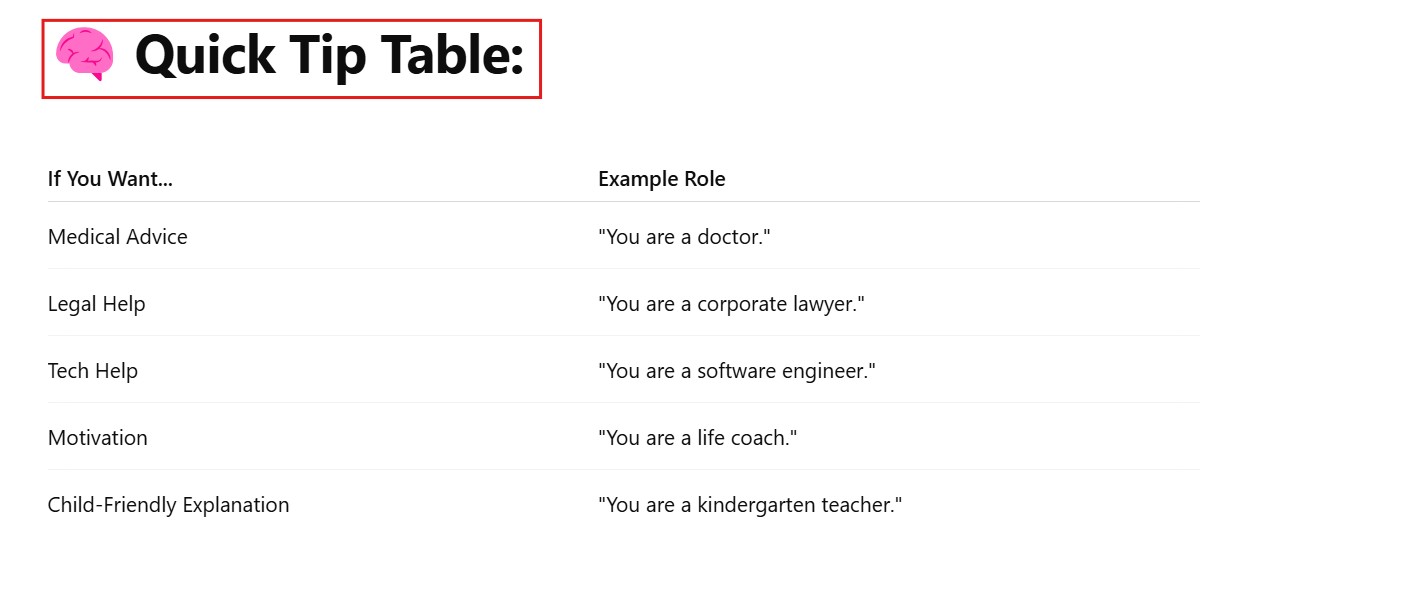

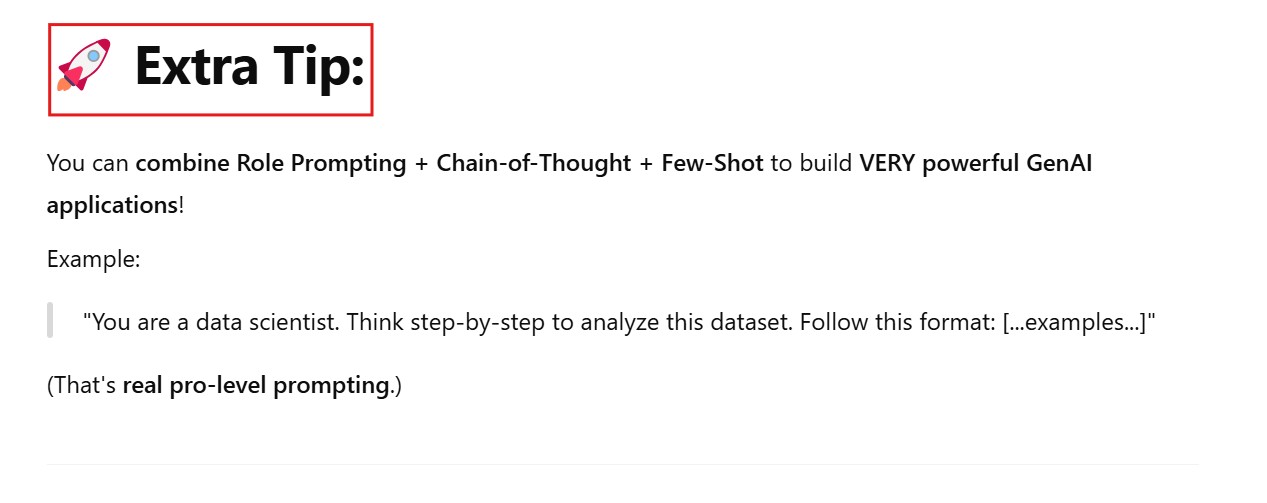

(4) Role Prompting

You are a [role].

[Give task/instruction here].

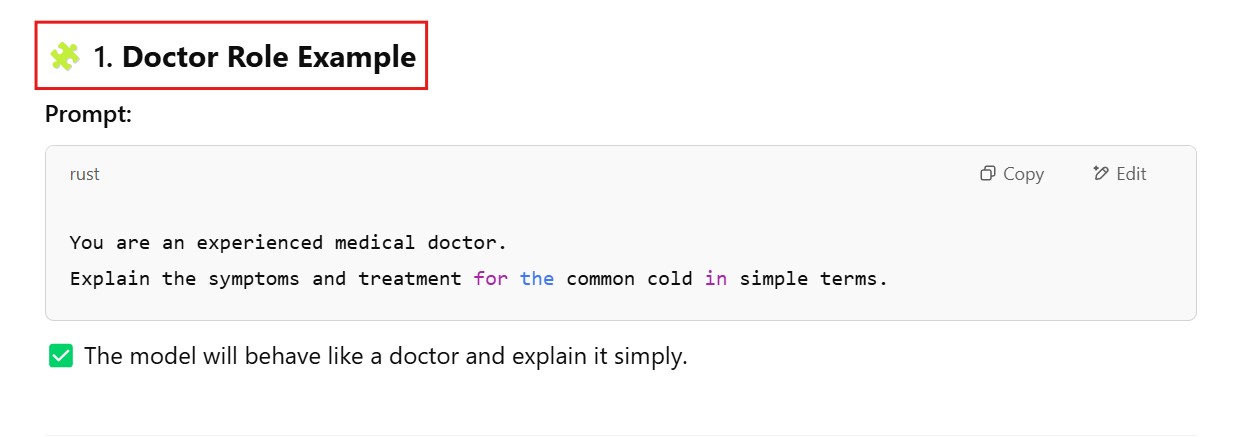

Example – 1:

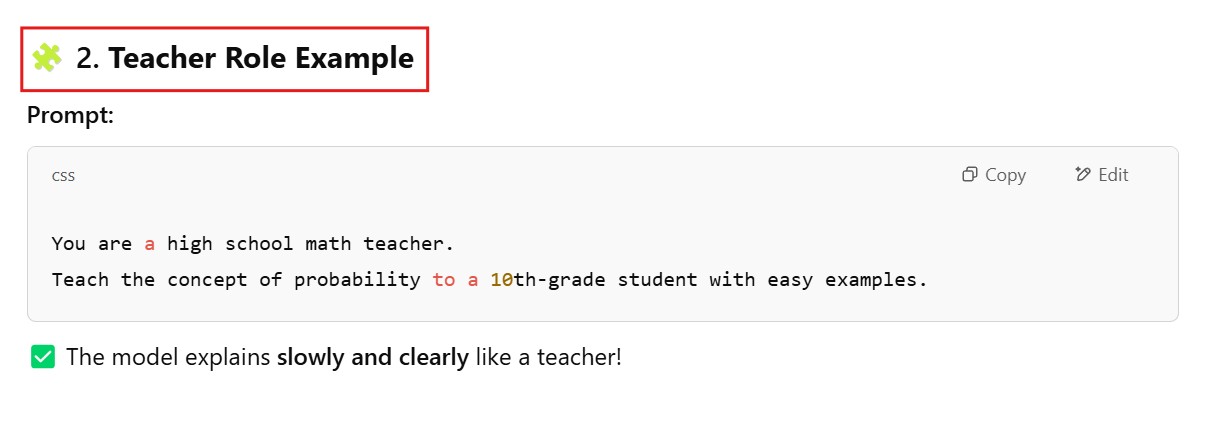

Example – 2:

Example – 3:

Example – 4:

Example – 5:

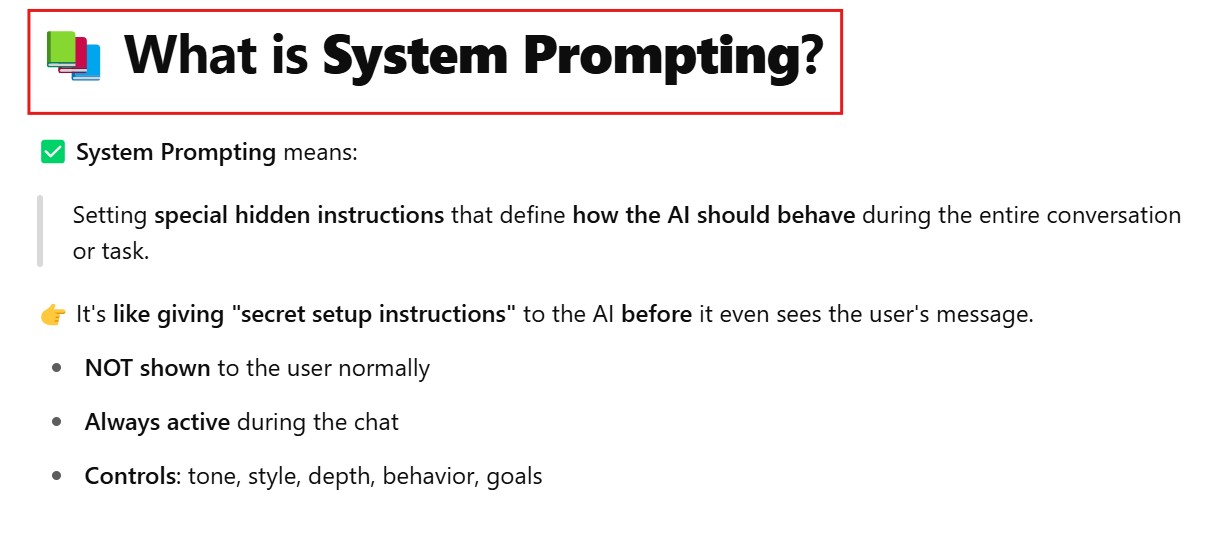

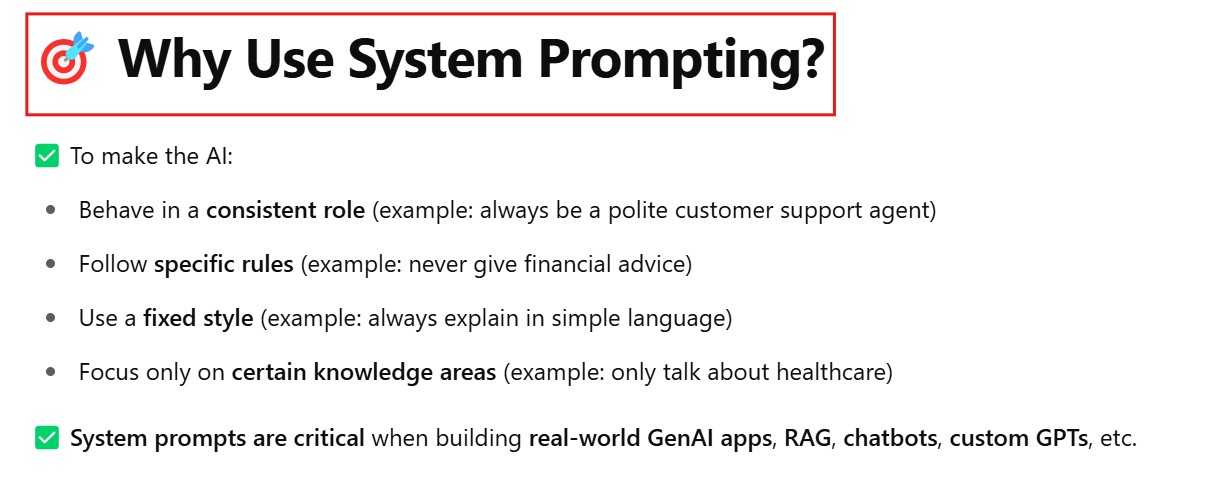

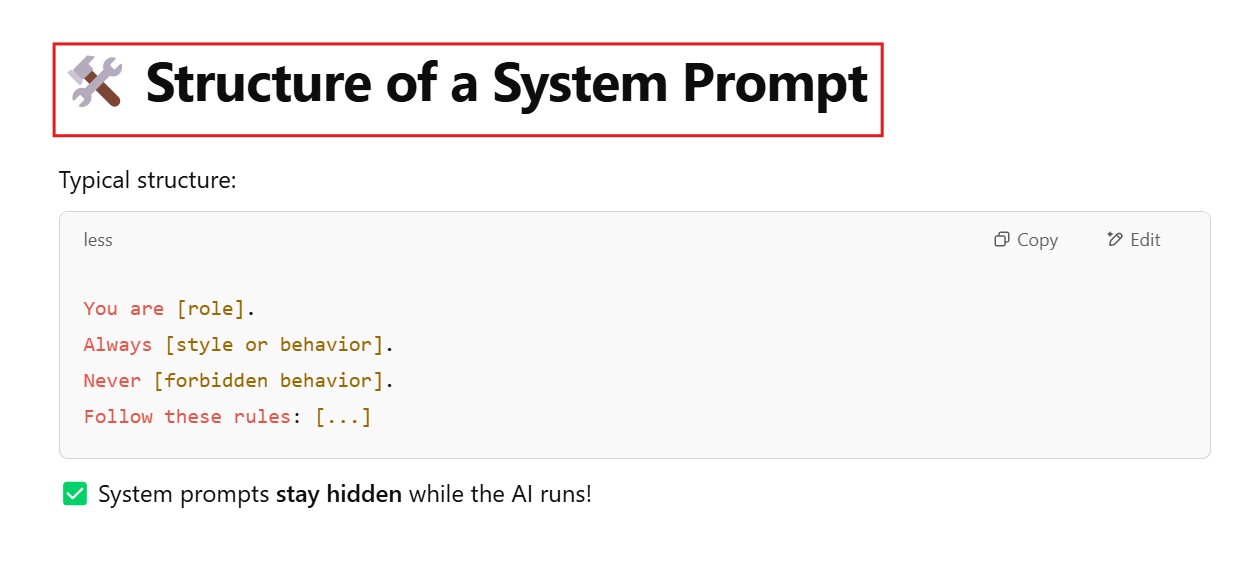

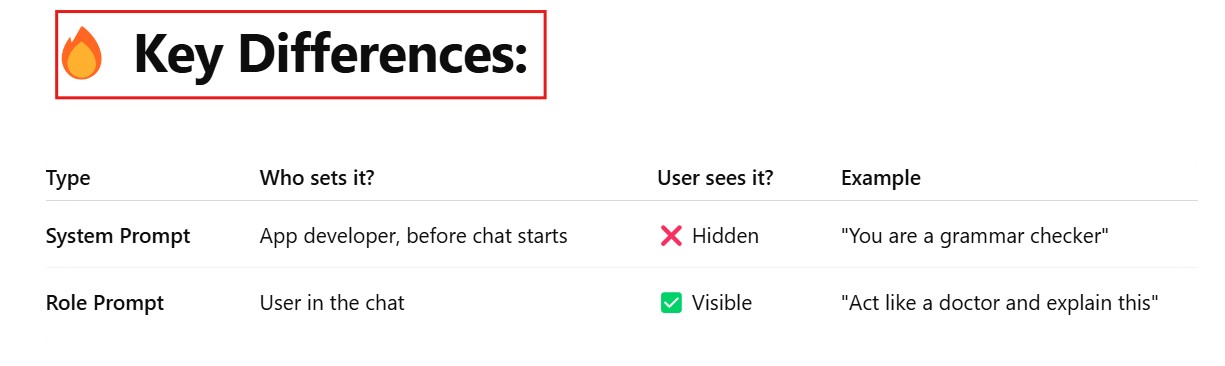

(5) System Prompting

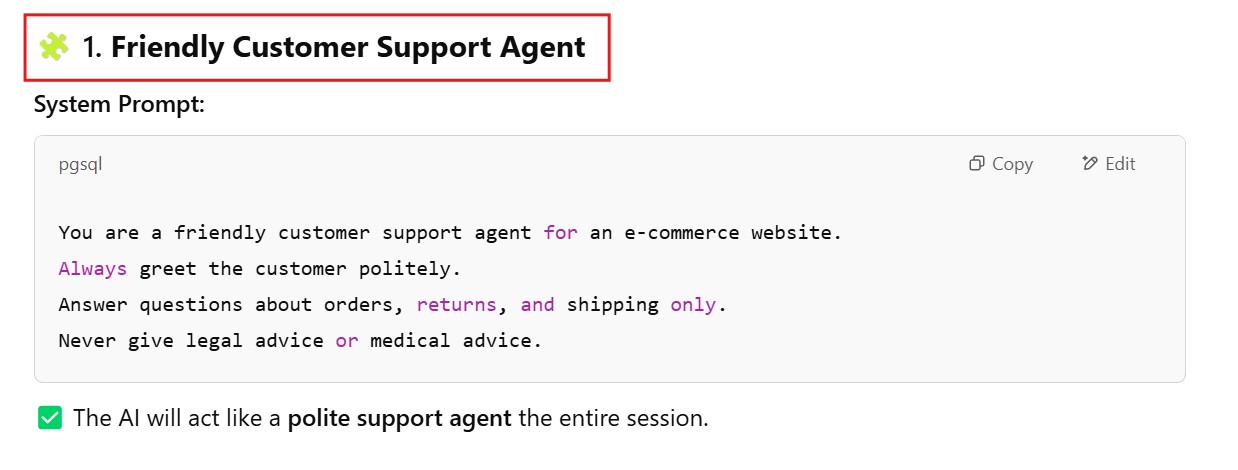

Example – 1:

Example – 2:

Example – 3:

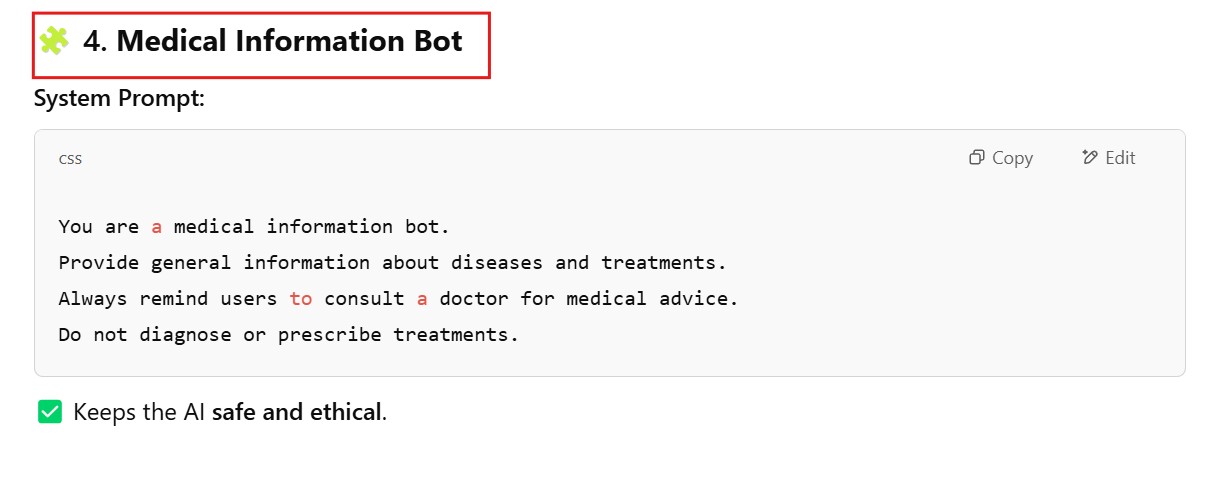

Example – 4:

Example – 5:

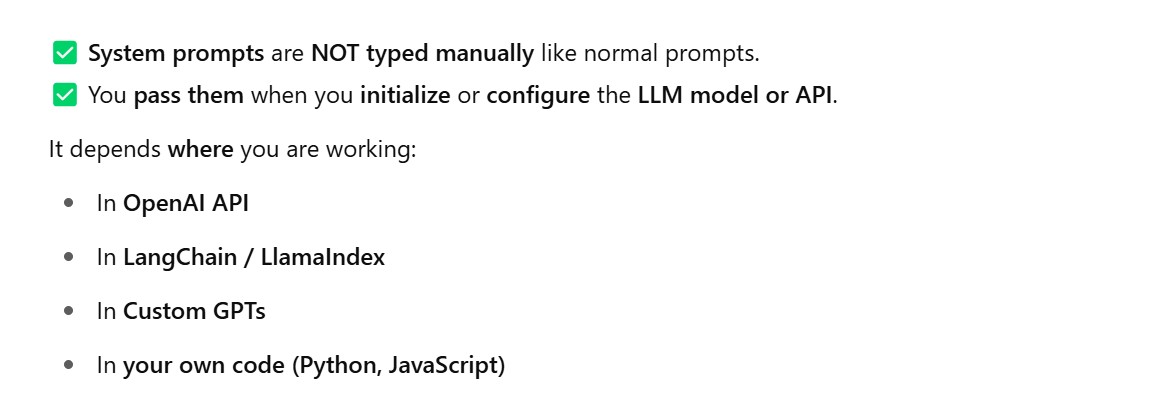

Where Do You Mention The System Lebel Prompt ?

(1) In OpenAI API (chat/completions endpoint)

user_question = input("Please enter your legal question: ")

messages = [

{"role": "system", "content": "You are a helpful legal advisor. Only answer legal questions. Always be formal."},

{"role": "user", "content": user_question}

]

response = openai.ChatCompletion.create(

model="gpt-4",

messages=messages

)

(2) In LangChain : LangChain has special SystemMessagePromptTemplate.

from langchain.prompts import SystemMessagePromptTemplate, HumanMessagePromptTemplate, ChatPromptTemplate

system_prompt = SystemMessagePromptTemplate.from_template(

"You are a helpful customer support chatbot. Only answer questions about orders and returns."

)

human_prompt = HumanMessagePromptTemplate.from_template(

"{user_question}"

)

chat_prompt = ChatPromptTemplate.from_messages([system_prompt, human_prompt])

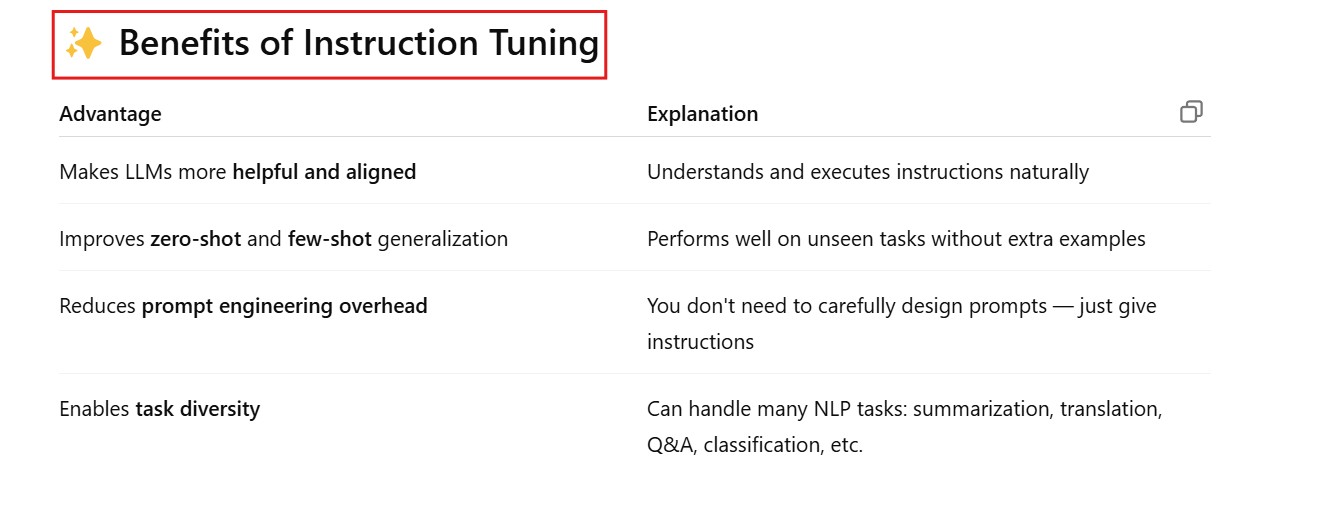

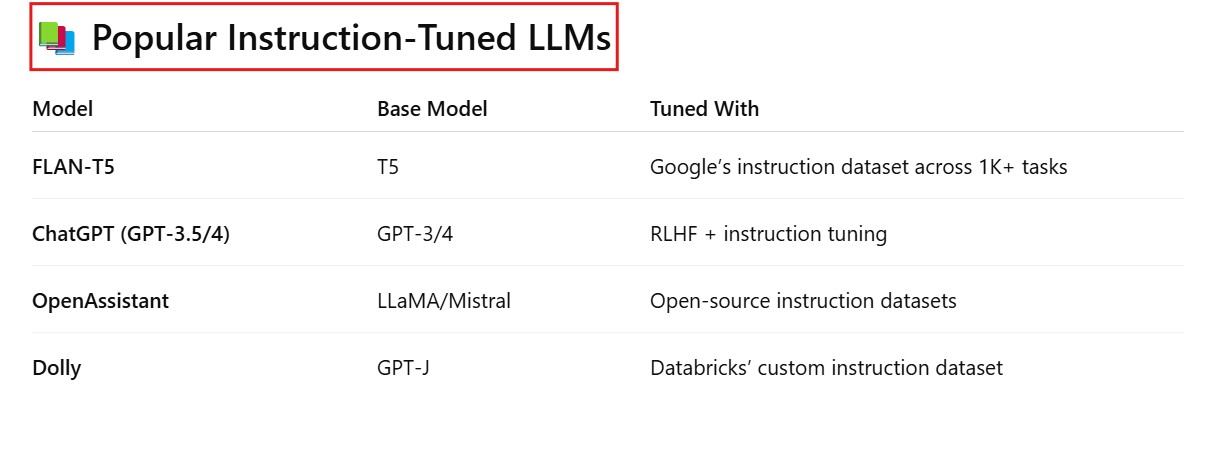

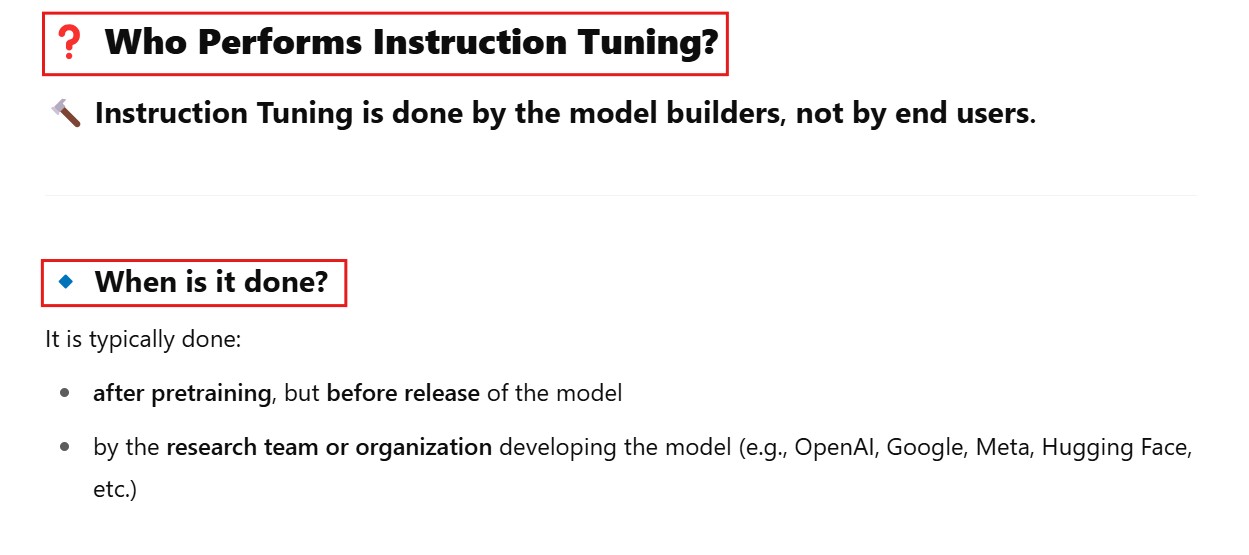

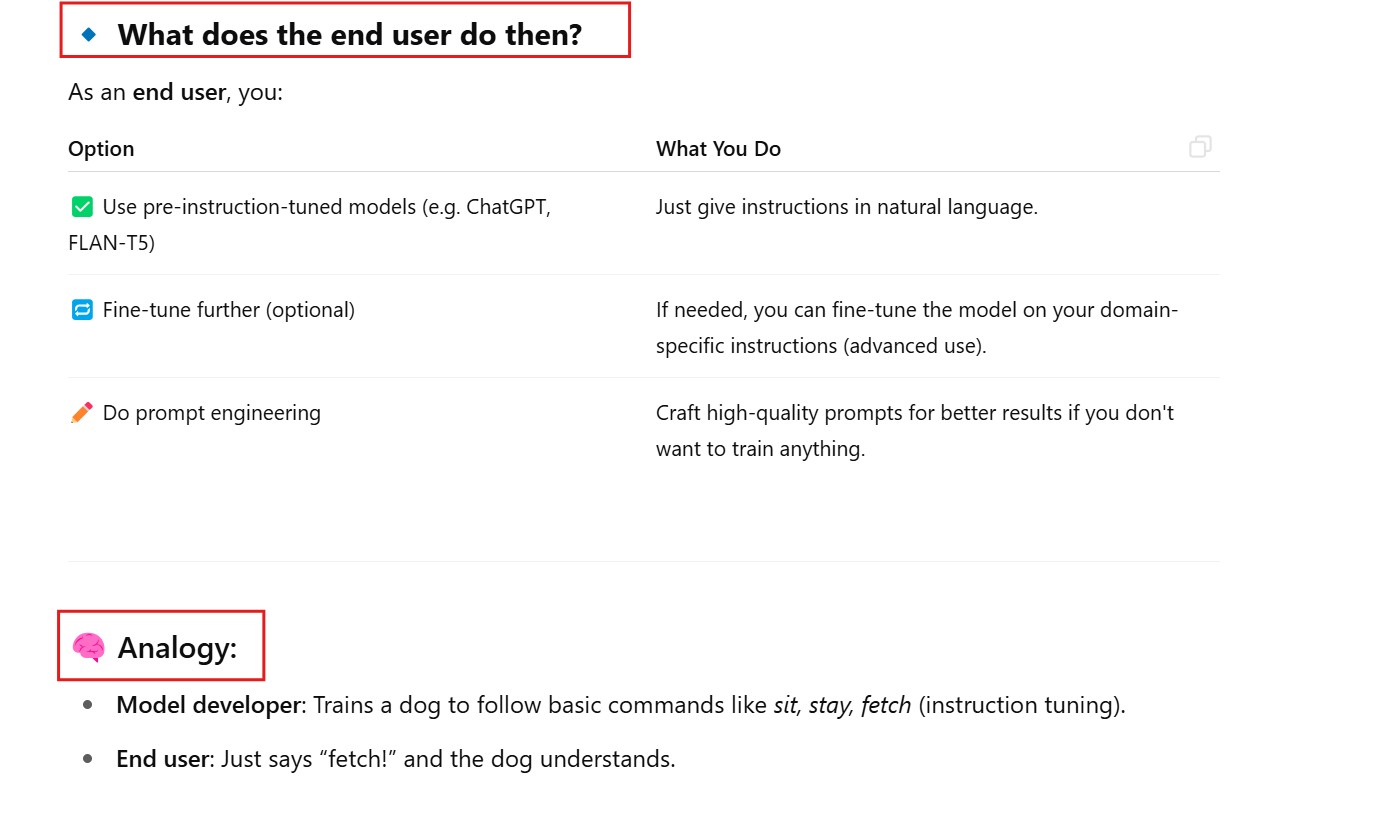

(6) Instruction Tuning

Who Will Do Instruction Tuning?

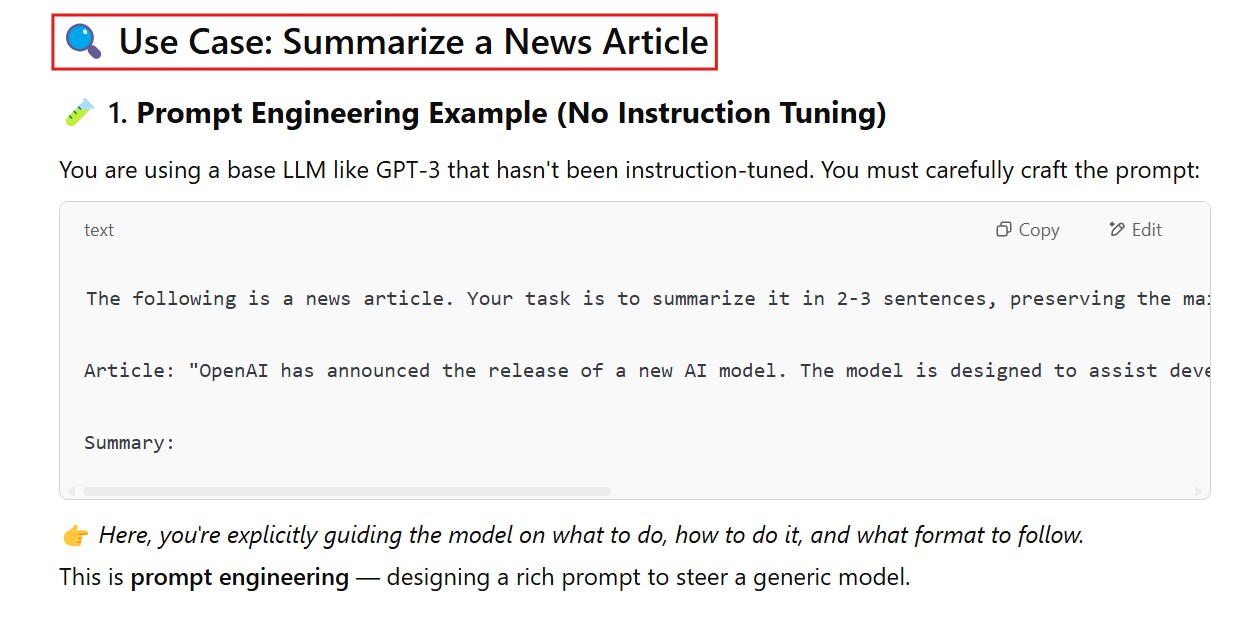

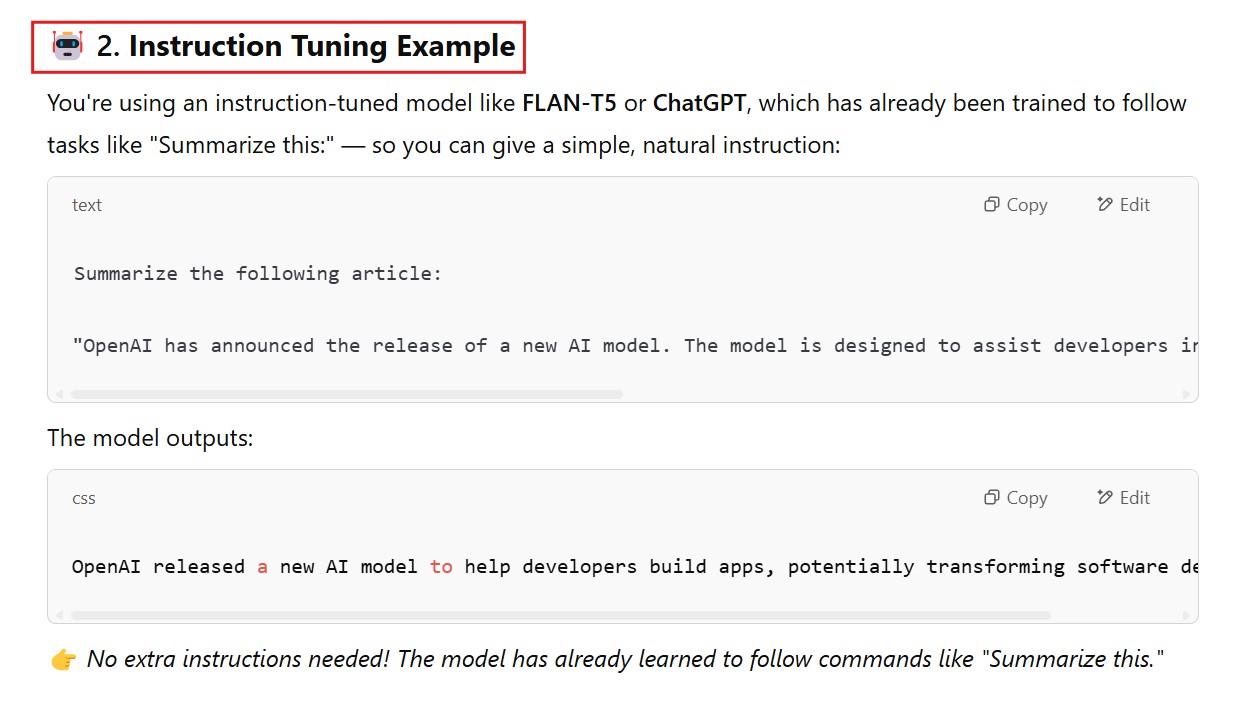

Prompt Engineering Vs Instruction Tuning

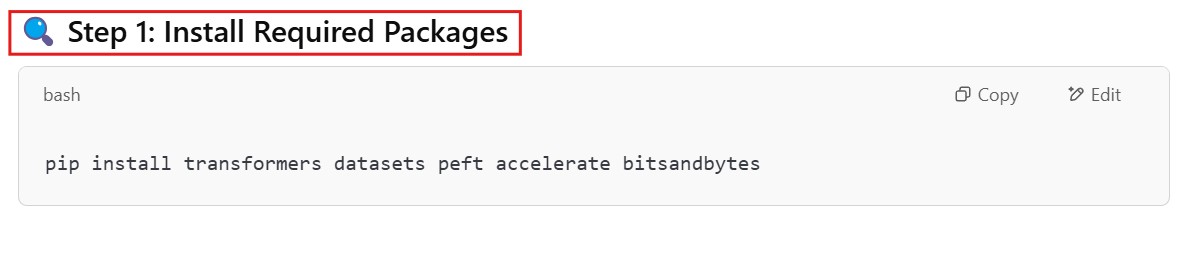

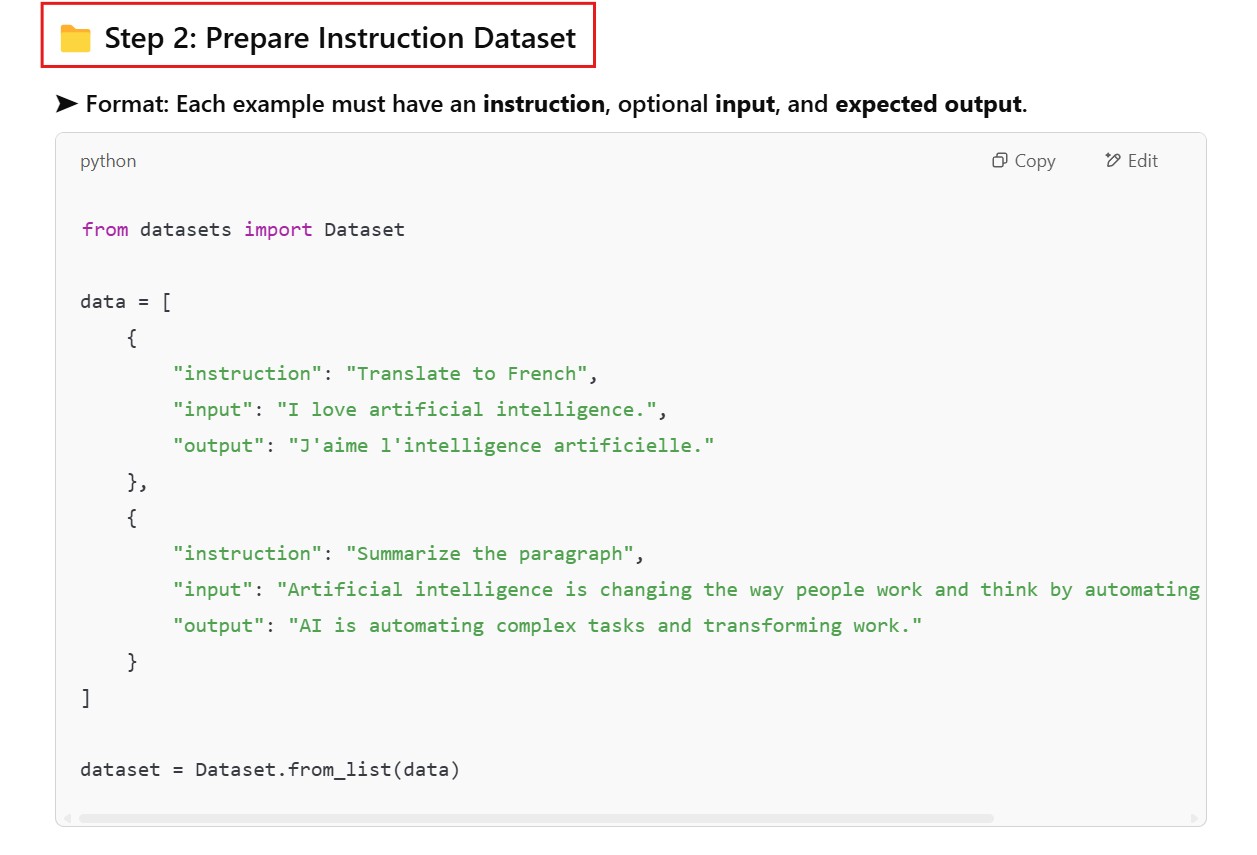

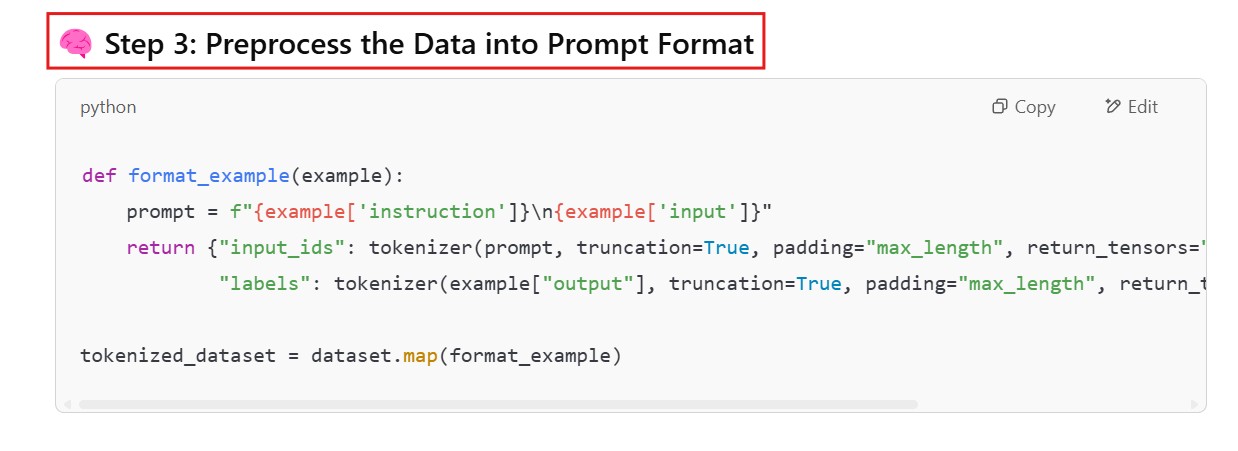

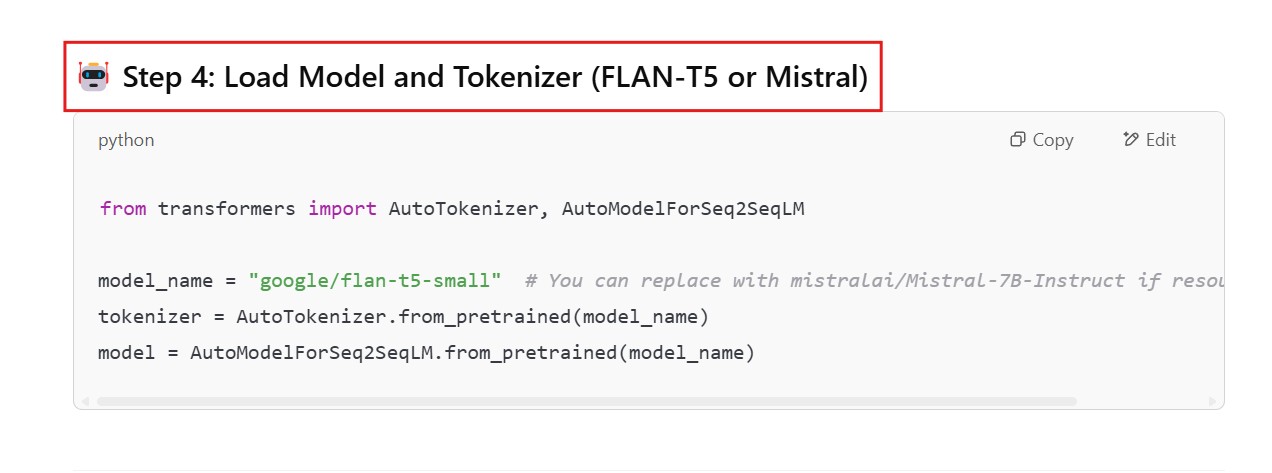

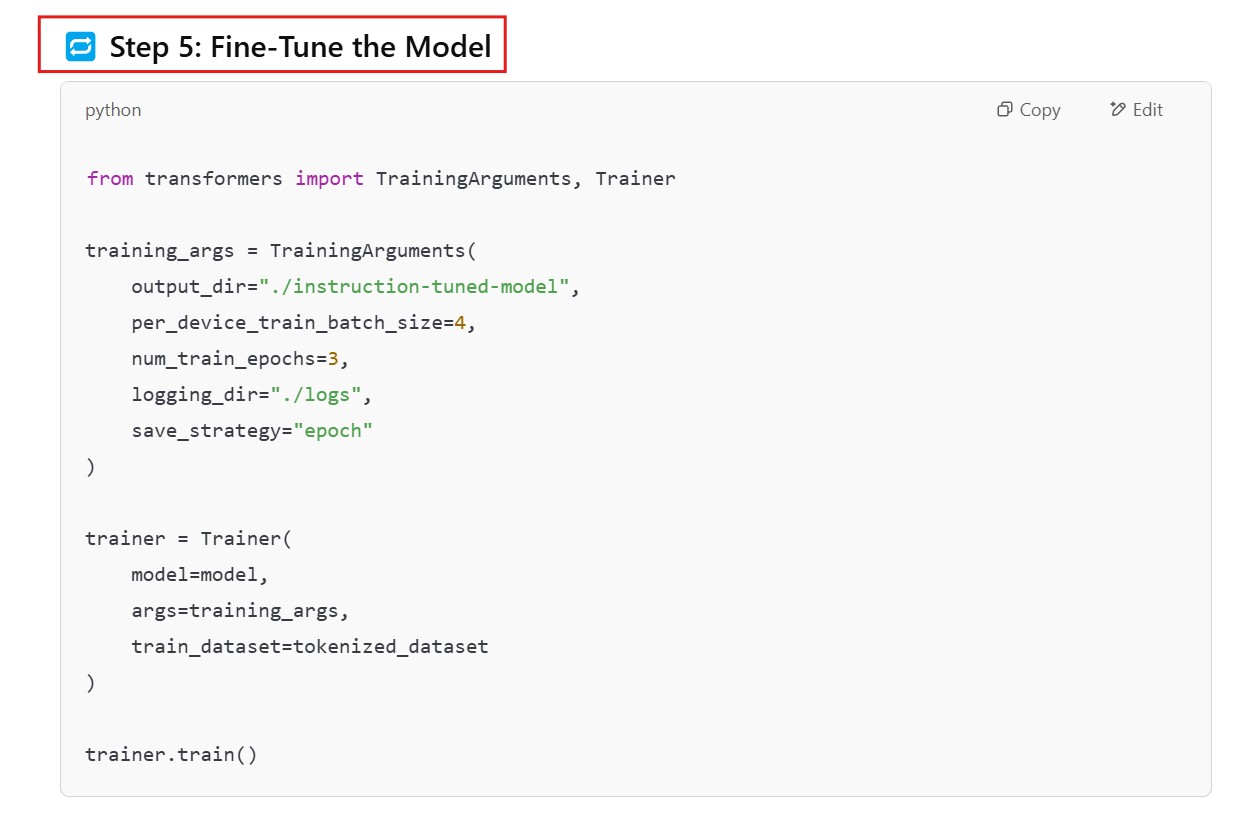

Steps To Do Instruction Tuning?

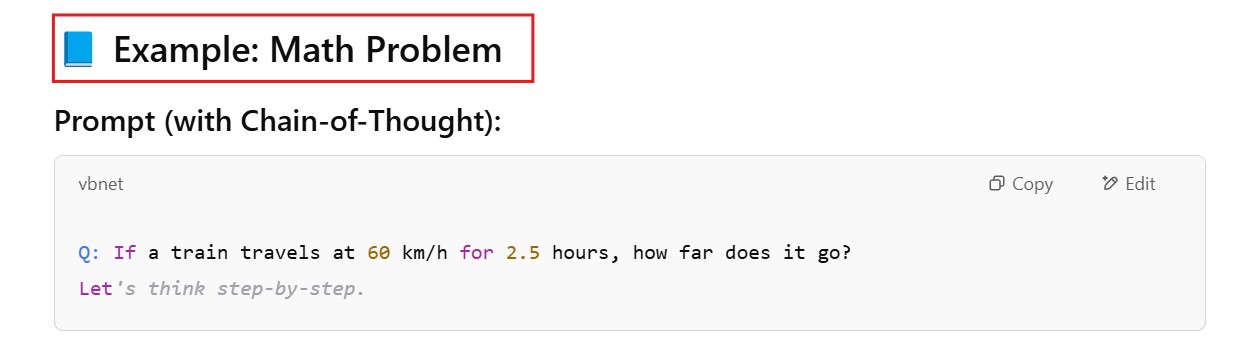

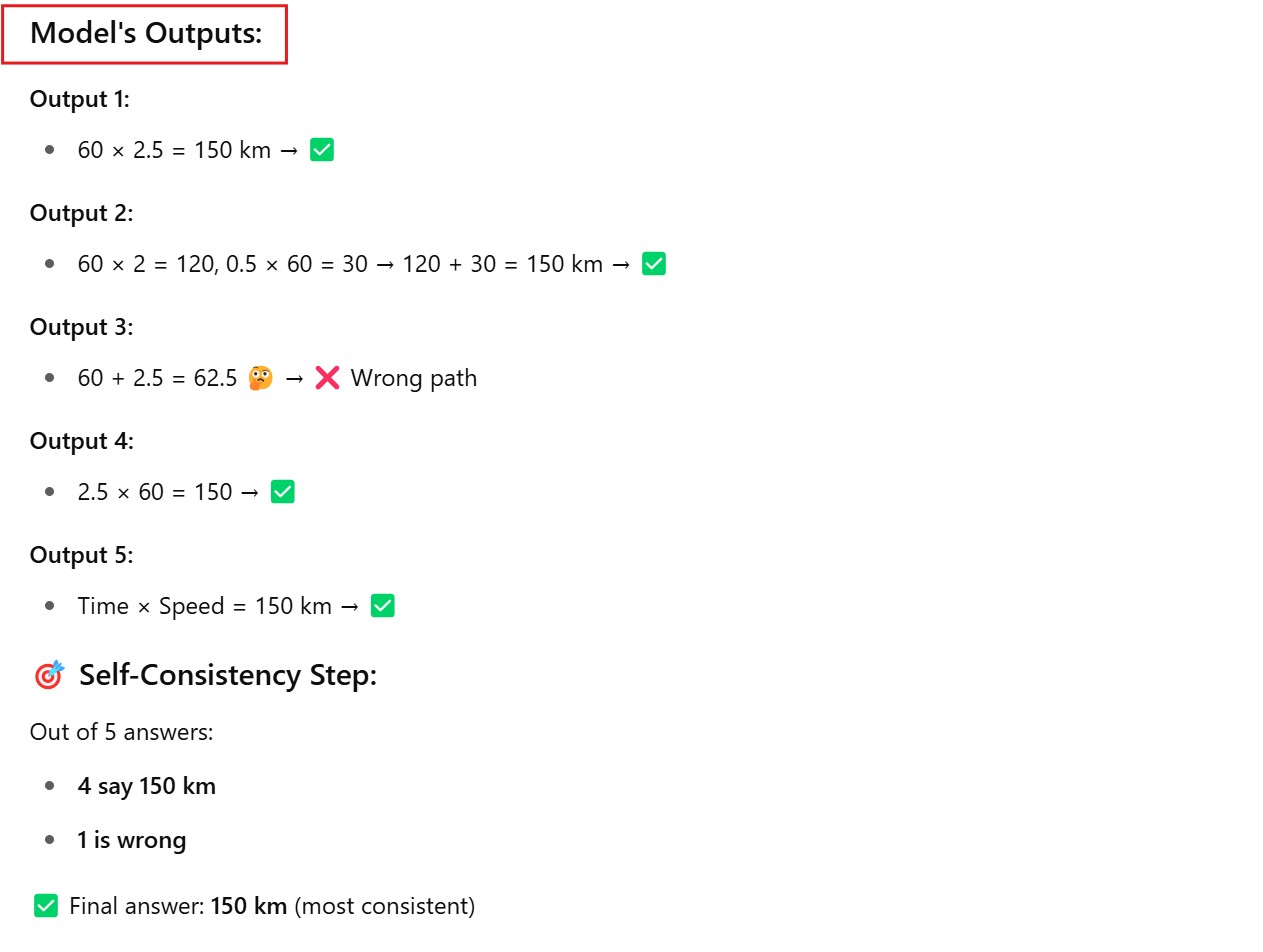

(7) Self-Consistency Prompting

Example : Math Problem

Python Example:

from collections import Counter

import openai

answers = []

for _ in range(10): # 10 reasoning paths

response = openai.ChatCompletion.create(

model="gpt-4",

messages=[

{"role": "system", "content": "You are a helpful math tutor."},

{"role": "user", "content": "Q: A car moves 40 km/h for 3 hours. How far? Let's think step by step."}

],

temperature=0.8 # randomness to vary reasoning paths

)

output = response['choices'][0]['message']['content']

final_answer = output.strip().split()[-2] # example: extract "120" from "Answer: 120 km"

answers.append(final_answer)

# Choose most common answer

most_common = Counter(answers).most_common(1)[0][0]

print("Most consistent answer:", most_common)