-

Linear Regression: OLS Technique

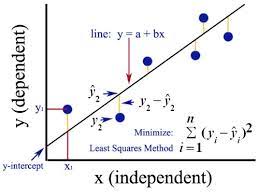

Linear Regression – OLS Technique. (1) Introduction. The Linear Regression model’s main objective is to find the best-fit line that will closely pass through all the points to minimize the loss. The question here is how to find out the best-fit line. We need some mathematical proof that this is my best-fit line. To solve this problem we have two techniques. Ordinary Least Square Technique. Gradient Descent Technique. (2) Ordinary Least Square Technique. In case of OLS technique we directly use the formula for ‘m’ and ‘b’ to derive the best fit line equation. Here in this vlog we will

-

Extreme Gradient Boosting – Regression Algorithm

Extreme Gradient Boosting – Regression Algorithm Table Of Contents: Example Of Extreme Gradient Boosting Regression. Problem Statement: Predict the package of the students based on CGPA value. Step-1: Build First Model In the case of the Boosting algorithm, the first model will be a simple one. For the regression case, we have considered the mean value to be our first model. Mean = (4.5+11+6+8)/4 = 29.5/4 = 7.375 Model1 output will always be 7.373 for all the records. Step-3: Calculate Error Made By First Model To calculate the error we will do the simple subtraction operation. We will subtract the

-

Gradient Boosting – Classification Algorithm

Gradient Boosting – Classification Algorithm Table Of Contents: Example Of Gradient Boosting Classification. Problem Statement: Whether the student will get placement or not is based on CGPA and IQ. Step-1: Build First Model – Calculate Log Of Odds. In the case of the Boosting algorithm, the first model will be a simple one. For the regression case, we have considered the mean value to be our first model. But it will not make any sense in the case of classification. Hence we will consider Log(odds) as our mathematical function for the first model. Log(Odds) The odds of an event happening

-

Cat Boost Algorithm

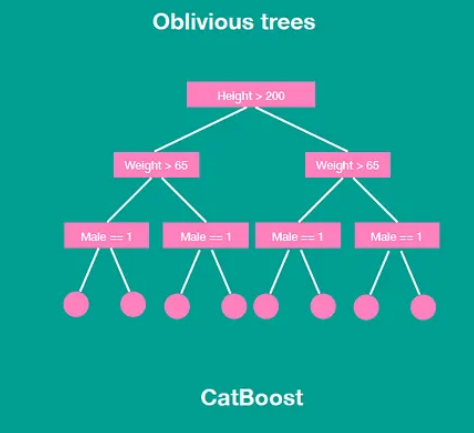

Cat Boost Algorithms Table Of Contents: What is the CatBoost Algorithm? Features Of CatBoost Algorithm. Is tuning required in CatBoost? When and When Not to Use CatBoost (1) What Is The Cat Boost Algorithm? The term CatBoost is an acronym that stands for “Category” and “Boosting.” Does this mean the “Category’ in CatBoost means it only works for categorical features? The answer is, “No.” According to the CatBoost documentation, CatBoost supports numerical, categorical, and text features but has a good handling technique for categorical data. The CatBoost algorithm has quite a number of parameters to tune the features in the processing stage. “Boosting” in CatBoost refers to

-

Light Gradient Boosting

Light Gradient Boosting Table Of Contents: What Is a Light Gradient Boosting Algorithm? Key Features Of Light GBM. (1) Light Gradient Boosting. LightGBM is another popular gradient-boosting framework that is known for its excellent performance and efficiency. It is designed to be a faster and more memory-efficient implementation compared to traditional gradient-boosting algorithms like XGBoost. LightGBM introduces several key optimizations to achieve these improvements. (2) Key Features Of Light GBM. Gradient-based One-Side Sampling (GOSS): LightGBM incorporates a technique called Gradient-based One-Side Sampling (GOSS) to reduce the number of data instances used for gradient-based decision-making. GOSS focuses on keeping the instances

-

Extreme Gradient Boosting

Extreme Gradient Boosting Table Of Contents: Evolution Of Tree Algorithms. What is XGBoost, And Why Is It So Popular? What Are The Features Supported By XGBoost? Installation of XGBoost. Should we use XG-Boost All The Time? Hyper-Parameters Involved In XG-Boost. (1) Evolution Of Tree Algorithm Artificial neural networks and deep learning lead the market for unstructured data like images, audio, and texts. At the same time, when we talk about small or medium-level structured data, tree-based algorithms dominate the market. And when we say tree, it all starts with the basic building block, i.e., Decision Trees DTs were able to

-

Gradient Boosting Algorithm

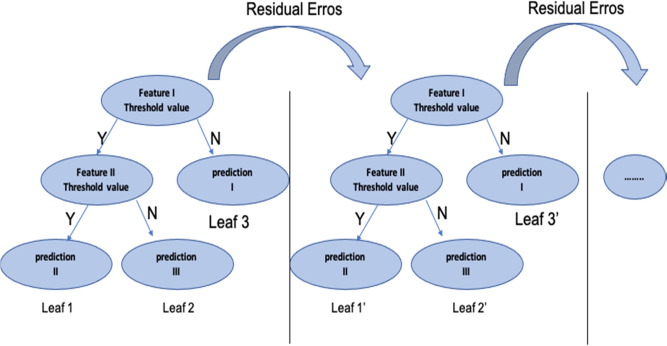

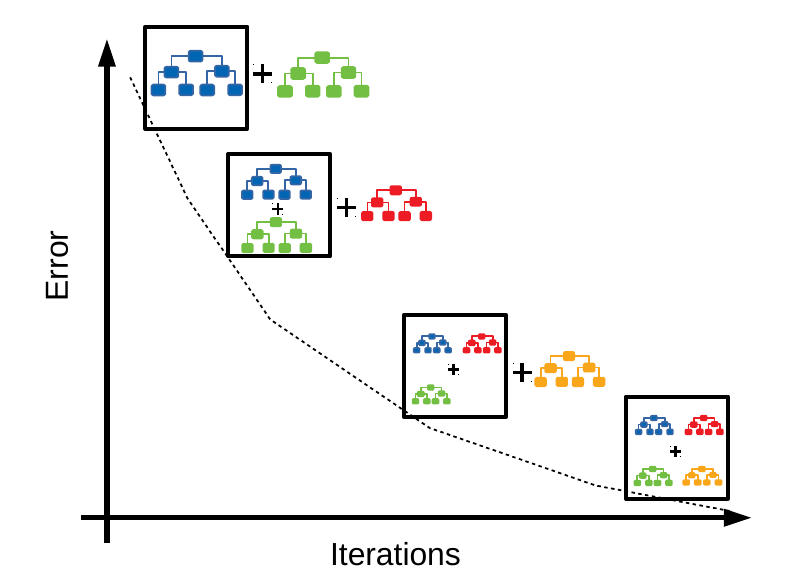

Gradient Boosting Algorithm Table Of Contents: Introduction What Is the Gradient Boosting Machine Algorithm? How Does Gradient Boosting Machine Algorithm Work? Example Of Gradient Boosting Algorithm. (1) Introduction: The principle behind boosting algorithms is first we build a model on the training dataset, then a second model is built to rectify the errors present in the first model. Let me try to explain to you what exactly this means and how this works. Suppose you have n data points and 2 output classes (0 and 1). You want to create a model to detect the class of the test data. Now what

-

AdaBoost Algorithm

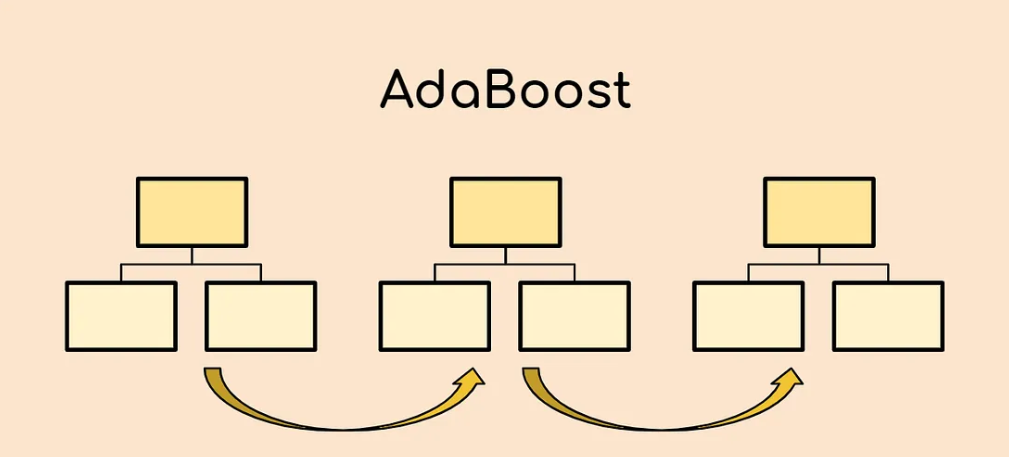

AdaBoost Algorithm Table Of Contents: Introduction What Is the AdaBoost Algorithm? Understanding the Working of the AdaBoost Algorithm (1) Introduction Boosting is a machine learning ensemble technique that combines multiple weak learners to create a strong learner. The term “boosting” refers to the idea of boosting the performance of weak models by iteratively training them on different subsets of the data. The main steps involved in a boosting algorithm are as follows: Initialize the ensemble: Initially, each instance in the training data is given equal weight, and a weak learner (e.g., a decision tree) is trained on the data. Iteratively

-

Boosting Algorithms

Boosting Algorithms Table Of Contents: What Is Boosting In Machine Learning? Types Of Boosting Algorithm. (1) What Is Boosting? Boosting is a machine learning ensemble technique that combines multiple weak learners (typically decision trees) to create a strong learner. The main idea behind boosting algorithms is to iteratively train weak models in a sequential manner, where each subsequent model focuses on correcting the mistakes made by previous models. This iterative process gradually improves the overall predictive performance of the ensemble. (2) Types Of Boosting Algorithms. AdaBoost (Adaptive Boosting): AdaBoost assigns weights to each training instance and adjusts them based on

-

Under Fitting Vs Over Fitting

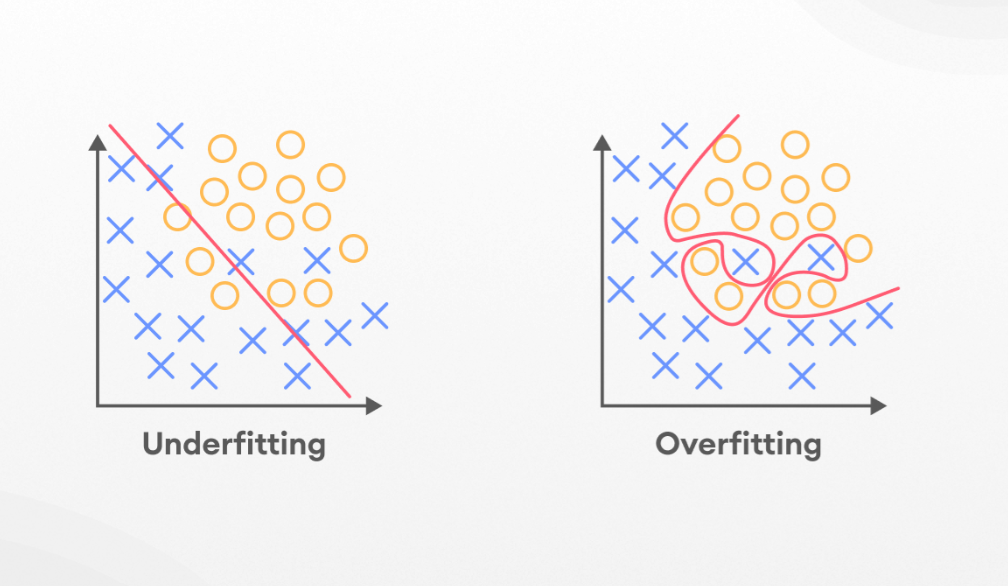

Underfitting vs Overfitting Table Of Contents: What is Generalization What is Underfitting What is Overfitting How To Detect Underfitting How To Avoid Underfitting How To Detect Overfitting How To Prevent Overfitting Model Prone To Underfitting (1) What Is Generalization? In supervised learning, the main goal is to use training data to build a model that will be able to make accurate predictions based on new, unseen data, which has the same characteristics as the initial training set. This is known as generalization. Generalization relates to how effectively the concepts learned by a machine learning model apply to particular examples that were