-

Activation Functions.

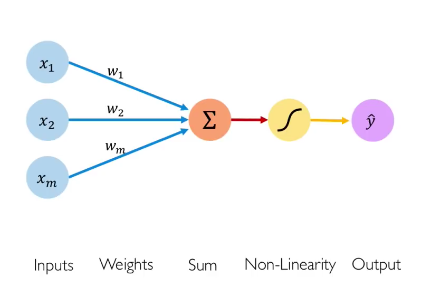

Activation Functions Table Of Contents: What Is An Activation Function? Why To Use Activation Function? Why Should An Activation Function Be Differentiable? Types Of Activation Functions. Sigmoid(Logistic) Activation Function. Hyperbolic Tangent (tanh) Activation. Rectified Linear Unit (ReLU) Activation. Leaky ReLU Activation. Softmax Activation. (1) What Is An Activation Function? An activation function is a mathematical function that determines the output of a neuron or a layer of neurons in a neural network. It introduces non-linearity into the network, allowing it to model complex relationships and make non-linear transformations of the input data. (2) Why To Use Activation Function? Activation functions

-

What Is Perceptron?

What Is Perceptron? Table Of Contents: What Is A Perceptron? Structure Of Perceptron? Activation Function For Perceptron. Learning Algorithm For Perceptron. Single Layer Perceptron. Limitations Of Perceptron. Multi-Layer Perceptron. (1) What Is A Perceptron? Perceptrons are the building blocks of artificial neural networks (ANNs) and serve as the simplest form of artificial neuron. They were introduced by Frank Rosenblatt in the late 1950s and played a crucial role in the development of neural networks. Perceptron is a single-layer neural network and a multi-layer perceptron is called Neural Networks. Perceptron is a linear classifier (binary). Also, it is used in supervised

-

Working Of A Single Neuron.

Working Of A Single Neuron. Table Of Contents: The Linear Unit. Example: The Linear Unit As A Model. Multiple Inputs. (1) The Linear Unit. So let’s begin with the fundamental component of a neural network: the individual neuron. As a diagram, a neuron (or unit) with one input looks like below: The input is x. Its connection to the neuron has a weight which is w. Whenever a value flows through a connection, you multiply the value by the connection’s weight. For the input, what reaches the neuron is w * x. A neural network “learns” by modifying its weights. The b is a special kind of weight we call

-

How Do Neural Networks Work?

How Does Neural Networks Works? Table Of Contents: How Does Neural Networks Work? Input Data. Feedforward. Activation Functions. Output Layer. Loss Function. Backpropagation and Training. Training Iterations. Prediction and Inference. (1) How Does Neural Networks Work? Neural networks work by processing input data through a network of interconnected artificial neurons, also known as nodes or units. The network learns from the input data and adjusts the connections (weights) between neurons to make accurate predictions or perform specific tasks. Here’s a general overview of how neural networks work: (2) Input Data. The neural network receives input data, which can be in

-

What Are Neural Networks?

What Are Neural Networks? Table Of Contents: Neural Networks? What Are Neurons? What Are Layers? Weights & Biases. Activation Functions. Feedforward and Backpropagation. Deep Neural Networks. (1) Neural Networks? Neural networks, also known as artificial neural networks (ANNs), are computational models inspired by the structure and functioning of the human brain’s neural networks. They consist of interconnected artificial neurons, also called nodes or units, organized in layers. (2) What Are Neurons? Neurons are the basic building blocks of neural networks. Artificial neurons receive input signals, perform computations, and produce an output signal. Each neuron applies an activation function to the

-

Introduction To Deep Learning.

Introduction To Deep Learning Table Of Contents: What Is Deep Learning? Applications Of Deep Learning. Importance Of Deep Learning. History Of Deep Learning. What Are Neural Networks? How Do Neural Networks Work? (1) What Is Deep Learning ? Deep learning is a subfield of machine learning and artificial intelligence (AI) that focuses on training artificial neural networks to learn and make intelligent decisions. It is characterized by the use of deep neural networks, which are neural networks with multiple layers of interconnected artificial neurons. In deep learning, the term “deep” refers to the depth of the neural networks, meaning they

-

Deep Learning Syllabus

Deep Learning Syllabus (1) Introduction To Deep Learning: Overview of deep learning and its applications. Historical development and milestones in deep learning. Basics of neural networks and their components. (2) Artificial Neural Networks: Perceptrons and activation functions Feedforward neural networks Training algorithms: gradient descent, backpropagation Regularization techniques: dropout, weight decay Optimization algorithms: stochastic gradient descent, Adam Initialization strategies (3) Convolutional Neural Networks (CNNs): Introduction to CNNs and their architecture. Convolutional layers, pooling layers, and fully connected layers CNN training and optimization CNN applications in computer vision tasks (e.g., image classification, object detection) (4) Recurrent Neural Networks (RNNs): Introduction to RNNs

-

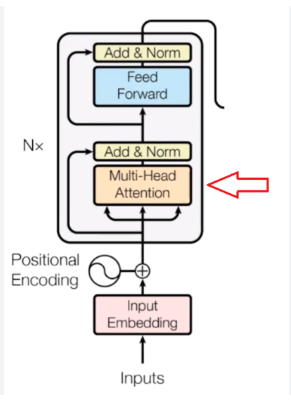

Transformers Neural Networks

Transformers Neural Networks Table Of Contents: What Is Transformer? Key Features Of Transformer? Applications Of Transformer? Key Features Of Transformer? How Do Transformers Handle The Issue Of Vanishing Gradients In Long-Term Dependencies? (1) What Is Transformer? Transformers are a type of deep learning model architecture that have gained significant attention and popularity, particularly in natural language processing (NLP) tasks. Unlike traditional recurrent neural networks (RNNs), transformers rely on a self-attention mechanism to capture dependencies between different elements of the input sequence. (2) Key Features Of Transformer? Self-Attention Mechanism: The self-attention mechanism is the core component of transformers. It allows the

-

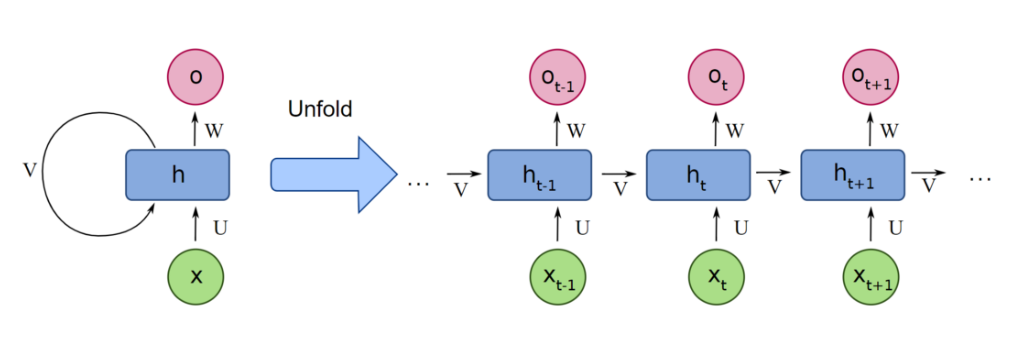

Recurrent Neural Networks(RNN)

Recurrent Neural Networks Table Of Contents: What Is Recurrent Neural Networks? (1) What Is Recurrent Neural Networks? Recurrent Neural Networks (RNNs) are a type of artificial neural network designed to process sequential and time-dependent data. They are particularly effective in tasks involving sequential data, such as natural language processing, speech recognition, time series analysis, and handwriting recognition. The key feature of RNNs is their ability to maintain a hidden state that captures information from previous time steps and propagates it to future steps. This recurrent connectivity allows RNNs to capture temporal dependencies and patterns in the data. (2) Components Of

-

Multi Horizon Forecasting

Multi Horizon Forecasting Table Of Contents: What Is Multi Horizon Forecasting? (1) What Is Multi Horizon Forecasting? Multi-horizon forecasting in time series refers to predicting future values of a time series over multiple future time steps. Instead of making a single-step forecast, where you predict the next value in the time series, multi-horizon forecasting involves predicting several future values at once, typically for a predefined range or sequence of future time steps. For example, in a daily sales forecasting scenario, a single-step forecast would predict tomorrow’s sales based on today’s data. In contrast, a multi-horizon forecast might predict the sales