-

CNN – What Is CNN ?

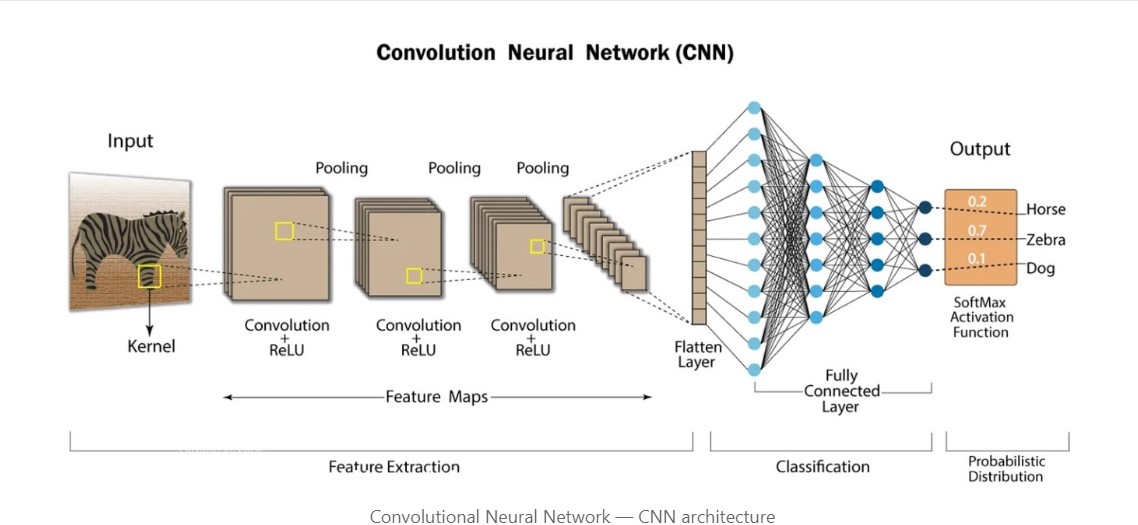

What Is An CNN? Syllabus: What Is CNN? Differences between CNNs and fully connected networks. Applications of CNNs. (1) What Is CNN? A Convolutional Neural Network (CNN) is a type of deep learning model specifically designed to process and analyze grid-like data structures, such as images. It is widely used for tasks such as image recognition, object detection, and video analysis, among others. (2) Difference Between CNN and ANN? The main difference between Convolutional Neural Networks (CNNs) and Artificial Neural Networks (ANNs) lies in their structure and the types of tasks they excel at. Here’s a simple breakdown: (3) What

-

CNN – Convolutional Neural Networks.

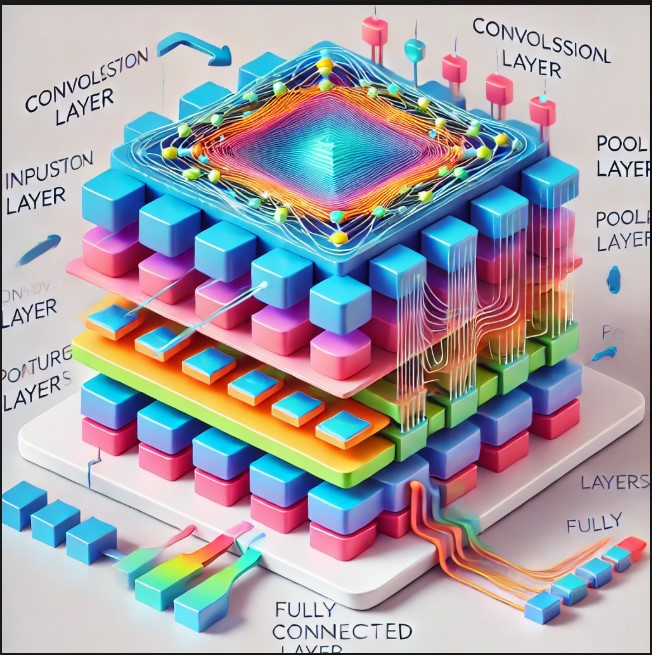

Convolutional Neural Networks Syllabus: 1. Fundamentals of CNNs What are CNNs? Differences between CNNs and fully connected networks. Applications of CNNs. Components of CNNs Convolution layers. Filters (Kernels) and their role. Stride, Padding, and their effects. Activation Functions ReLU, Sigmoid, Tanh, etc. Pooling Layers Max pooling, Average pooling, Global pooling. Fully Connected Layers How they integrate features learned from convolutional layers. 2. Advanced Architectures and Concepts Popular CNN Architectures LeNet, AlexNet, VGG, ResNet, Inception, MobileNet, EfficientNet. Residual Networks (ResNets) Skip connections and their significance. Inception Networks Factorized convolutions and mixed architecture. Depthwise Separable Convolutions Efficiency in MobileNet and EfficientNet. Dilated

-

For A Batch Of 100 Records How The Records Will Be Passed At A Time Or One By One?

For A Batch Of 100 Records How The Records Will Be Passed At A Time Or One By One? Answer: For a batch of 100 records in a neural network, all 100 records are passed through the network at the same time (in parallel), not one by one. This is made possible through vectorized operations and the efficient handling of data by modern hardware like GPUs. Why Are Records Processed Simultaneously? How It Works Internally: Summary: How Individual Neurons Operation Happens: Yes, the operations we discussed (matrix multiplication, bias addition, and activation) apply to individual neurons as part of the

-

When Does The Weights Of The Neural Networks Are Updated?

When Does The Neural Network Is Updated? Answer: The weights in a neural network are typically updated after each batch during training, not after each epoch. This depends on the type of gradient descent being used. Types of Gradient Descent and Weight Updates:

-

What Is An Epoch ?

What Is An Epoch? (1) What Is An Epoch? An epoch in deep learning refers to one complete pass through the entire training dataset by the neural network during training. It is a key concept when training machine learning models, especially neural networks, to understand how data is processed and how the model learns. (2) Key Points About Epochs: (3) Examples Of Epochs (4) Why Are Multiple Epochs Needed? (5) Choosing the Right Number of Epochs (6) Visualization

-

What Is Hierarchical Representations In Deep Learning?

What Is Hierarchical Representations In Deep Learning? Table Of Contents: What Is Hierarchical Feature Representation? Key Concepts of Hierarchical Representations. (1) What Is Hierarchical Feature Representation? Hierarchical representations refer to the layered structure of features or patterns that a machine learning model, particularly in deep learning, learns from input data. These representations progress from simple, low-level features in early layers to more complex, high-level abstractions in deeper layers of a neural network. (2) Key Concepts In Hierarchical Representation. (3) Benefits Of Hierarchical Representation.

-

Probability Theory

Probability Theory Table Of Contents: Probability Of ‘A’ and ‘B’ Happening Together. Probability Of ‘A’ Given ‘B’ has already Happened. (1) Probability Of ‘A’ and ‘B’ Happening Together. P(A∩B)=P(A)×P(B) for an independent event if there is no relationship between a and b how we are multiplying there individual probability As here we are considering two events we need to consider all possible outcomes for both the events. For rolling two dies together we will have 36 number of outcomes. For tossing two coins together we will hae 4 possible outcomes. (2) Probability Of ‘A’ Given ‘B’ Has Already Happened. (3)

-

Parametric & Non Parametric Models

Parametric Vs Non Parametric Models Table Of Contents: Parametric Models Key Characteristics Examples Advantages Disadvantages Non Parametric Models Key Characteristics Examples Advantages Disadvantages Parametric Model: A parametric model assumes a specific functional form for the relationship between the input features and the output. These models have a fixed number of parameters that are determined during the training process. Key Features – Parametric Model Examples – Parametric Model Advantages & Disadvantages – Parametric Model Non – Parametric Model: A non-parametric model makes no strong assumptions about the form of the mapping function. Instead, it learns the structure directly from the data,

-

Topics To Learn In Support Vector Machine.

Topics To Learn In SVM Table Of Contents: Introduction to SVM What is SVM? Use cases and applications of SVM Strengths and weaknesses of SVM Mathematical Foundations Linear separability Concept of a hyperplane Margin and margin maximization Support vectors and their role Functional and geometric margins SVM for Linearly Separable Data Objective function for linear SVM Hard margin SVM Optimization problem formulation Lagrange multipliers and the dual problem SVM for Non-Linearly Separable Data Soft margin SVM Slack variables and their significance Trade-off parameter CCC: bias-variance tradeoff Practical scenarios for soft margin SVM Kernel Trick What is the kernel trick? Common

-

Hyper Parameters In Decision Tree.

Hyper Parameters In Decision Tree Table Of Contents: Maximum Depth (max_depth) Minimum Samples Split (min_samples_split) Minimum Samples per Leaf (min_samples_leaf) Maximum Features (max_features) Maximum Leaf Nodes (max_leaf_nodes) Minimum Impurity Decrease (min_impurity_decrease) Split Criterion (criterion) Random State (random_state) Class Weight (class_weight) Presort (presort) Splitter (splitter) (1) Maximum Depth (max_depth) from sklearn.datasets import load_iris from sklearn.tree import DecisionTreeClassifier, export_text from sklearn.tree import plot_tree import matplotlib.pyplot as plt # Load the Iris dataset iris = load_iris() X, y = iris.data, iris.target # Build a decision tree with max_depth=3 clf = DecisionTreeClassifier(max_depth=3, random_state=42) clf.fit(X, y) # Plot the decision tree plt.figure(figsize=(12, 8)) plot_tree(clf, feature_names=iris.feature_names,